Nasty OTAA connect

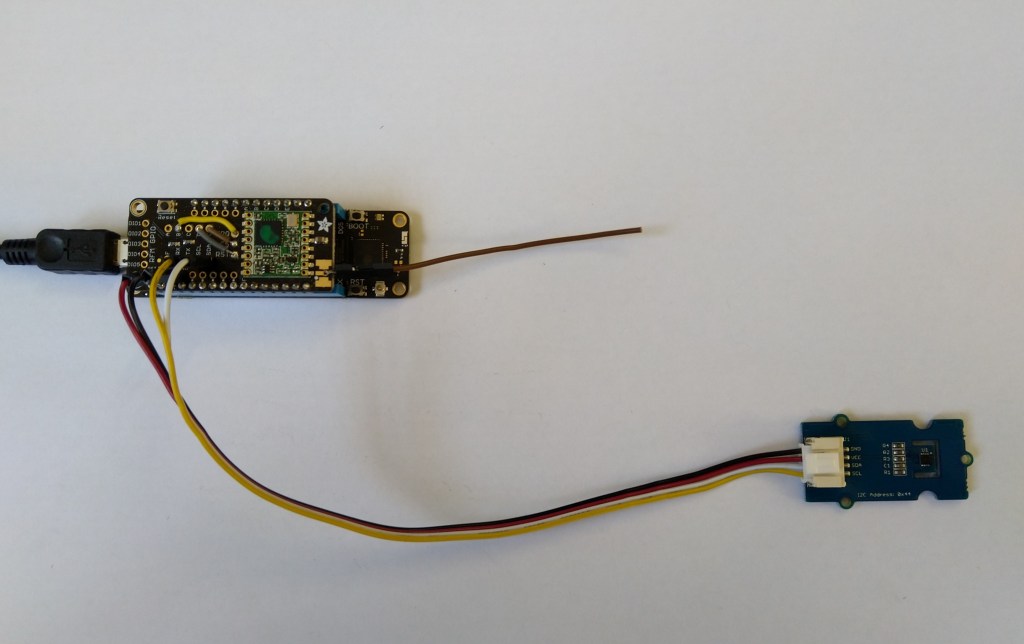

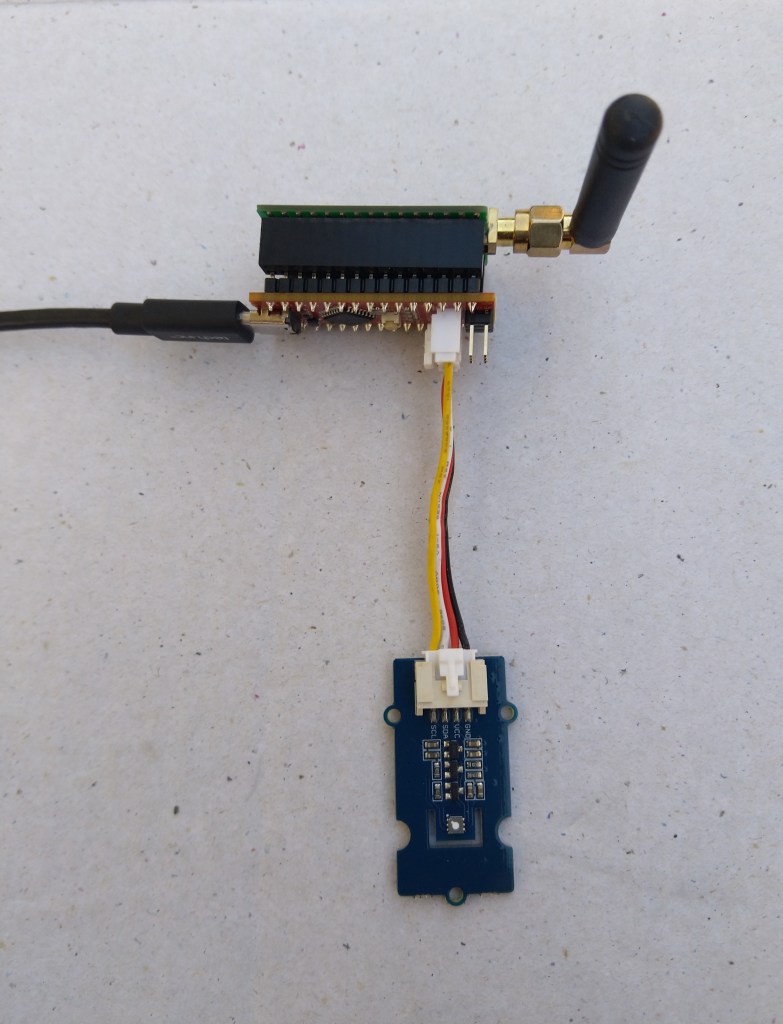

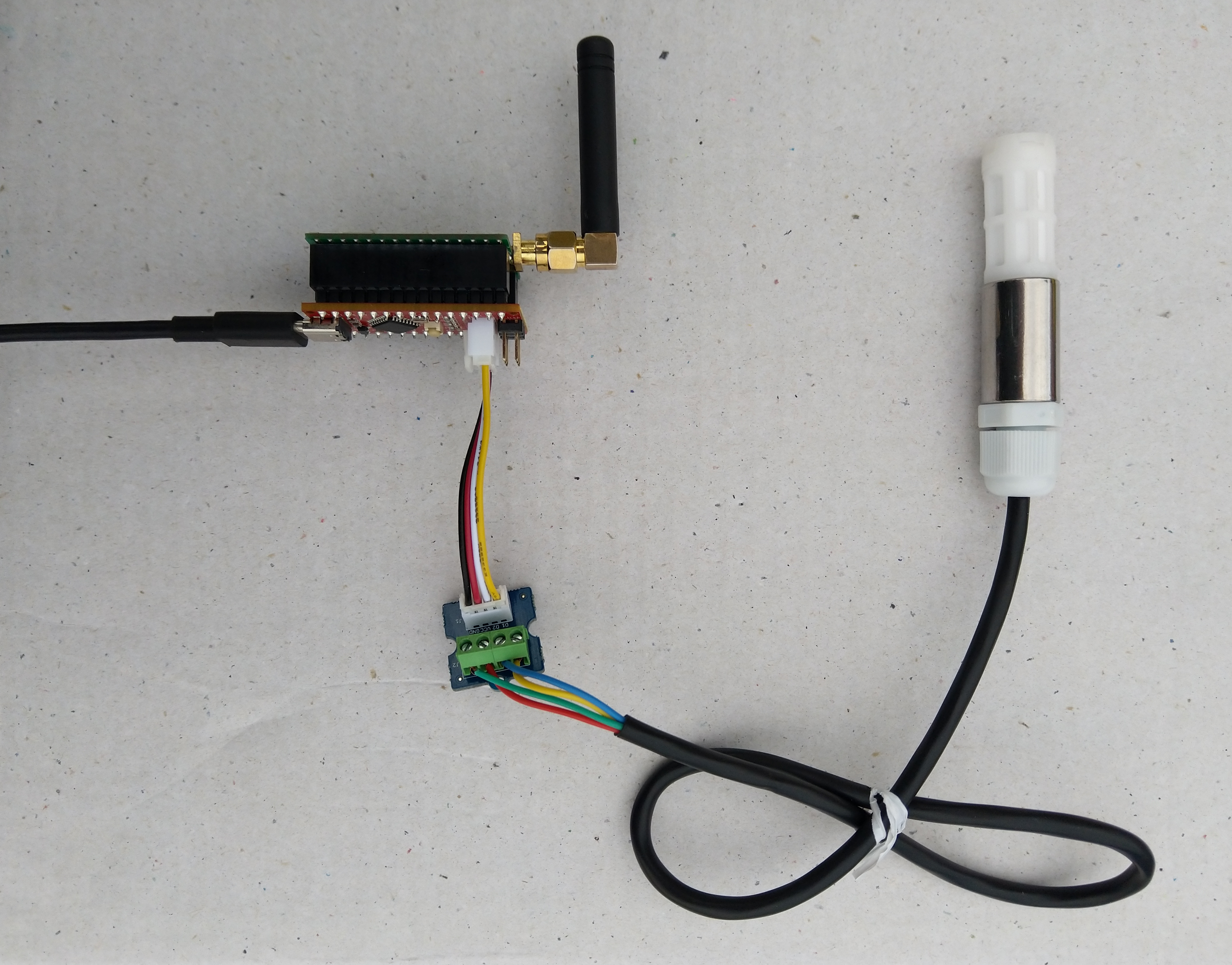

After getting basic connectivity for my Seeedstudio LoRa-E5 Development Kit and Fezduino test rig working I wanted to see if I could get the device connected to The Things Industries(TTI) via the RAK7258 WisGate Edge Lite on the shelf in my office.

My Over the Air Activation (OTAA) implementation is very “nasty” as it is assumed that there are no timeouts or failures and it only sends one BCD message “48656c6c6f204c6f526157414e” which is “hello LoRaWAN”. The code just sequentially steps through the necessary commands (with a suitable delay after each is sent) to join the TTI network.

public class Program

{

#if TINYCLR_V2_FEZDUINO

private static string SerialPortId = SC20100.UartPort.Uart5;

#endif

private const string AppKey = "................................";

//txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+ID=AppEui,{AppEui}\r\n"));

//private const string AppEui = "................";

//txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+ID=AppEui,\"{AppEui}\"\r\n"));

private const string AppEui = ".. .. .. .. .. .. .. ..";

private const byte messagePort = 1;

//private const string payload = "48656c6c6f204c6f526157414e"; // Hello LoRaWAN

private const string payload = "01020304"; // AQIDBA==

//private const string payload = "04030201"; // BAMCAQ==

public static void Main()

{

UartController serialDevice;

int txByteCount;

int rxByteCount;

Debug.WriteLine("devMobile.IoT.SeeedE5.NetworkJoinOTAA starting");

try

{

serialDevice = UartController.FromName(SerialPortId);

serialDevice.SetActiveSettings(new UartSetting()

{

BaudRate = 9600,

Parity = UartParity.None,

StopBits = UartStopBitCount.One,

Handshaking = UartHandshake.None,

DataBits = 8

});

serialDevice.Enable();

// Set the Region to AS923

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes("AT+DR=AS923\r\n"));

Debug.WriteLine($"TX: DR {txByteCount} bytes");

Thread.Sleep(500);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

// Set the Join mode

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes("AT+MODE=LWOTAA\r\n"));

Debug.WriteLine($"TX: MODE {txByteCount} bytes");

Thread.Sleep(500);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

// Set the appEUI

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+ID=AppEui,\"{AppEui}\"\r\n"));

Debug.WriteLine($"TX: ID=AppEui {txByteCount} bytes");

Thread.Sleep(500);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

// Set the appKey

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+KEY=APPKEY,{AppKey}\r\n"));

Debug.WriteLine($"TX: KEY=APPKEY {txByteCount} bytes");

Thread.Sleep(500);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

// Set the PORT

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+PORT={messagePort}\r\n"));

Debug.WriteLine($"TX: PORT {txByteCount} bytes");

Thread.Sleep(500);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

// Join the network

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes("AT+JOIN\r\n"));

Debug.WriteLine($"TX: JOIN {txByteCount} bytes");

Thread.Sleep(10000);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

while (true)

{

// Unconfirmed message

txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+MSGHEX=\"{payload}\"\r\n"));

Debug.WriteLine($"TX: MSGHEX {txByteCount} bytes");

// Confirmed message

//txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+CMSGHEX=\"{payload}\"\r\n"));

//Debug.WriteLine($"TX: CMSGHEX {txByteCount} bytes");

Thread.Sleep(10000);

// Read the response

rxByteCount = serialDevice.BytesToRead;

if (rxByteCount > 0)

{

byte[] rxBuffer = new byte[rxByteCount];

serialDevice.Read(rxBuffer);

Debug.WriteLine($"RX :{UTF8Encoding.UTF8.GetString(rxBuffer)}");

}

Thread.Sleep(30000);

}

}

catch (Exception ex)

{

Debug.WriteLine(ex.Message);

}

}

}

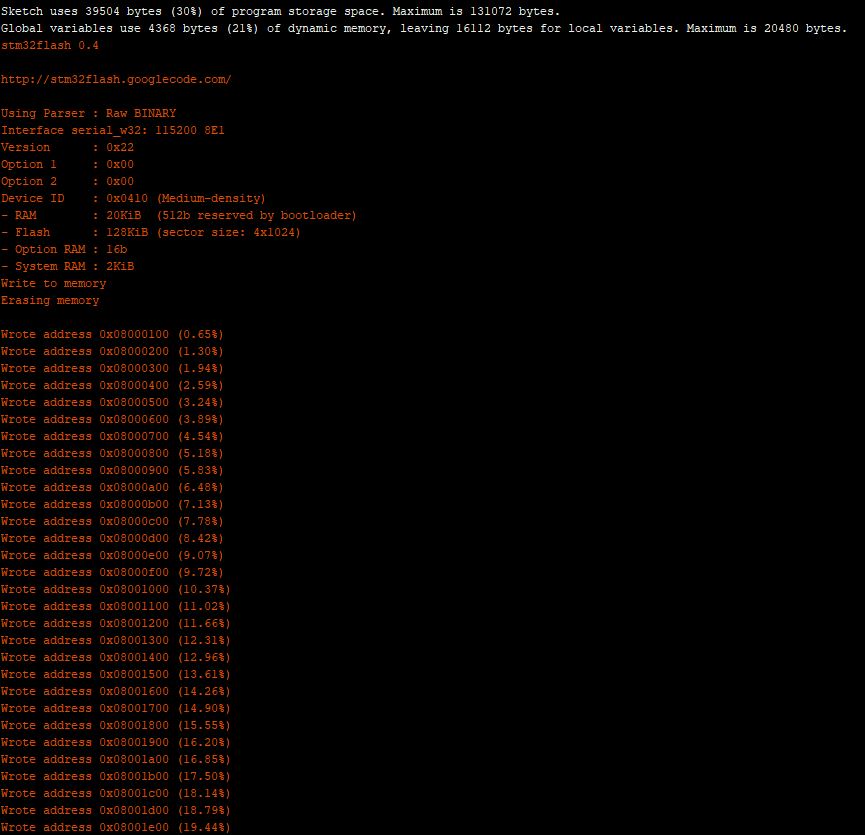

The code is not suitable for production but it confirmed my software and hardware configuration worked.

The thread '<No Name>' (0x2) has exited with code 0 (0x0).

devMobile.IoT.SeeedE5.NetworkJoinOTAA starting

TX: DR 13 bytes

RX :+DR: AS923

TX: MODE 16 bytes

RX :+MODE: LWOTAA

TX: ID=AppEui 40 bytes

RX :+ID: AppEui, ..:..:.:.:.:.:.:.

TX: KEY=APPKEY 48 bytes

RX :+KEY: APPKEY ................................

TX: PORT 11 bytes

RX :+PORT: 1

TX: JOIN 9 bytes

RX :+JOIN: Start

+JOIN: NORMAL

+JOIN: Network joined

+JOIN: NetID 000013 DevAddr ..:..:..:..

+JOIN: Done

TX: MSGHEX 22 bytes

RX :+MSGHEX: Start

+MSGHEX: FPENDING

+MSGHEX: RXWIN1, RSSI -41, SNR 9.0

+MSGHEX: Done

TX: MSGHEX 22 bytes

RX :+MSGHEX: Start

+MSGHEX: Done

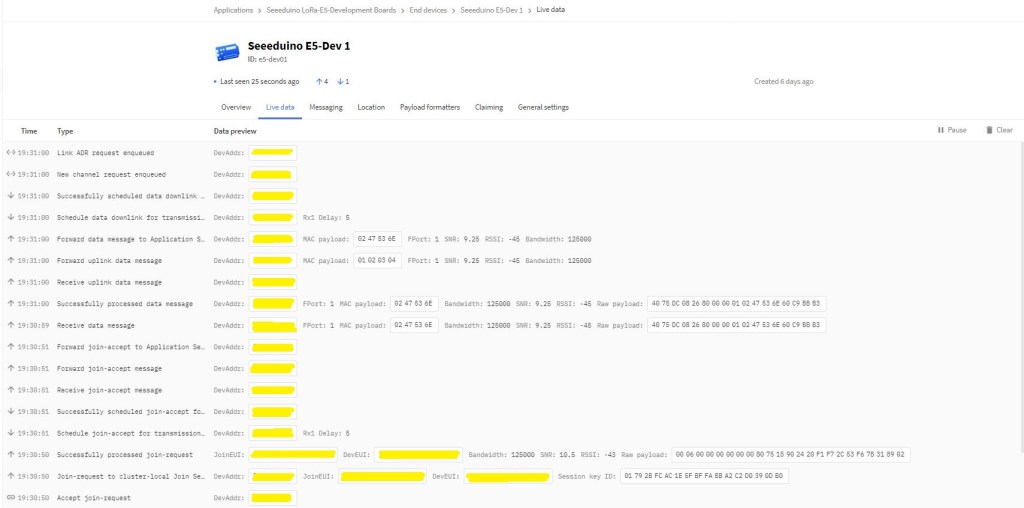

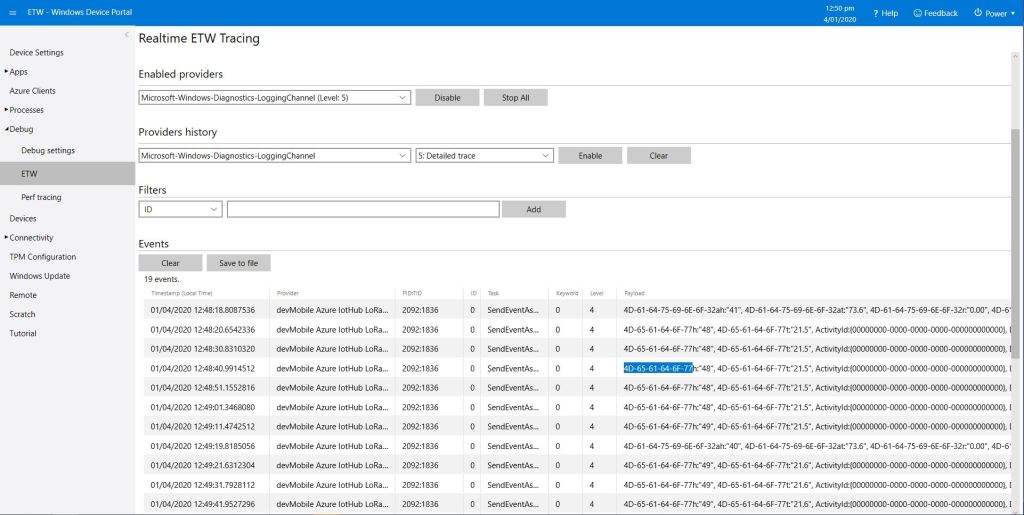

In the Visual Studio 2019 debug output I could see messages getting sent and then after a short delay they were visible in the TTI console.

I had an issue with how the AppUI parameter was handled

private const string AppKey = "................................";

//txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+ID=AppEui,{AppEui}\r\n"));

//private const string AppEui = "................";

//txByteCount = serialDevice.Write(UTF8Encoding.UTF8.GetBytes($"AT+ID=AppEui,\"{AppEui}\"\r\n"));

private const string AppEui = ".. .. .. .. .. .. .. ..";

It appears that If the appkey (or other string parameter) has spaces it has to be enclosed in quotations.