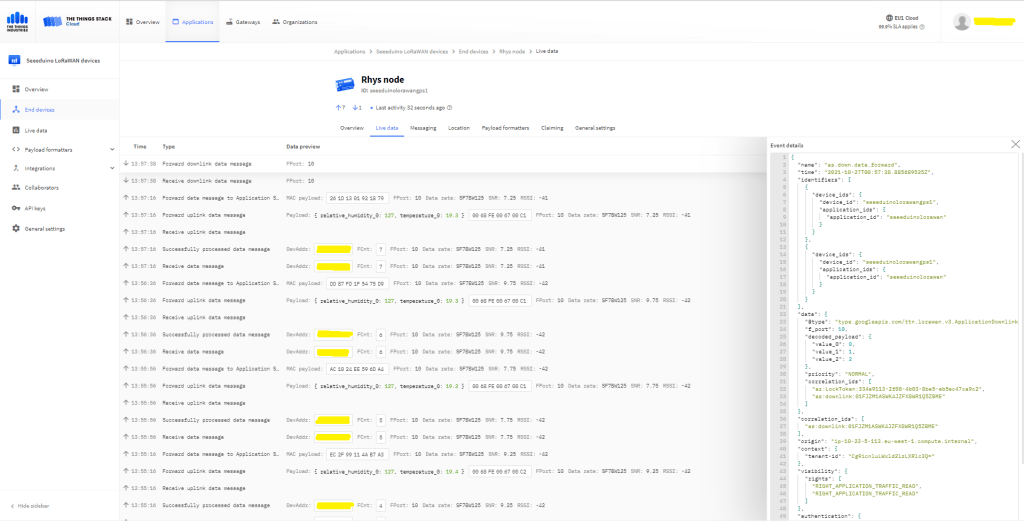

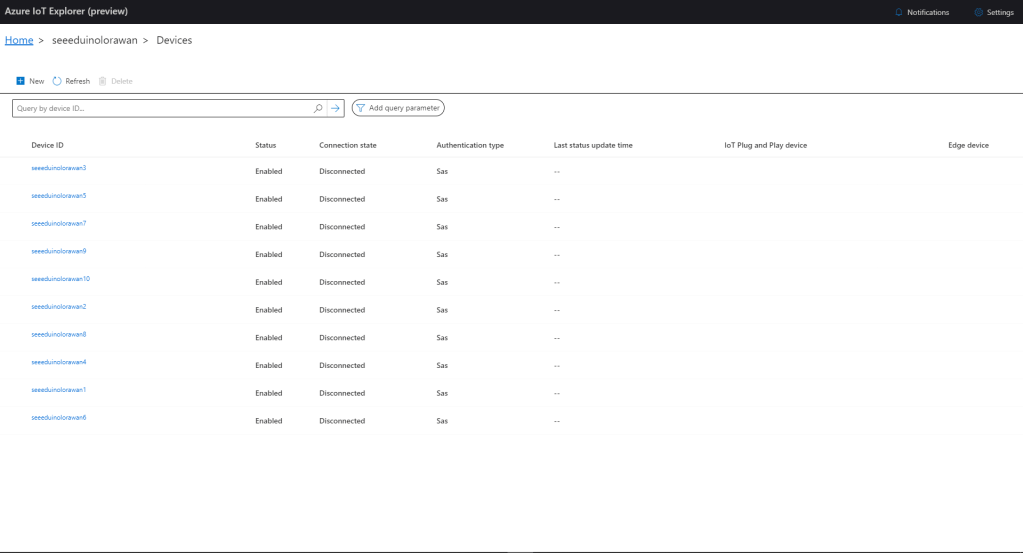

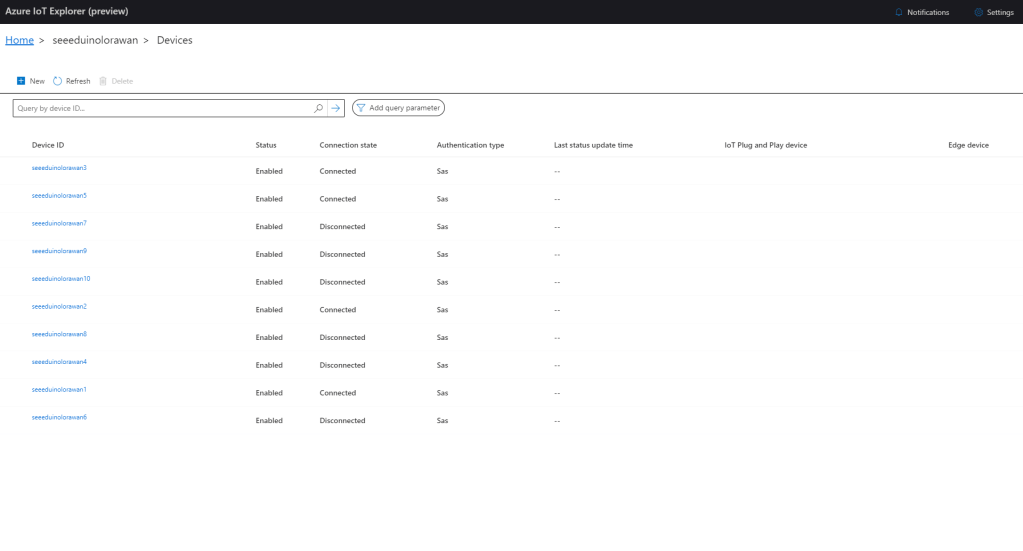

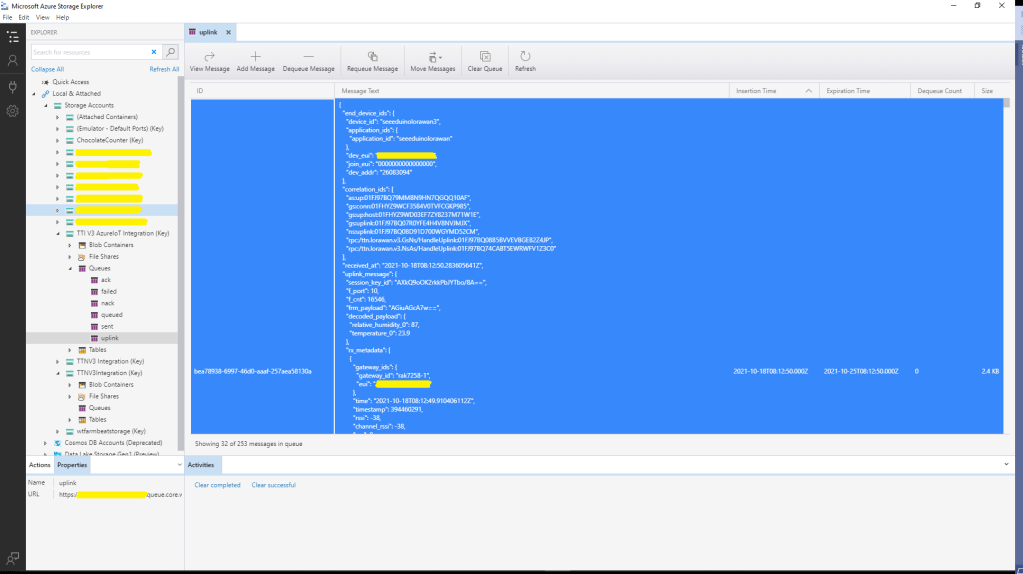

In a previous version of my Things Network Industries(TTI) The Things Network(TTN) connector I queried the The Things Stack(TTS) Application Programing Interface(API) to get a list of Applications and their Devices. For a large number of Applications and/or Devices this process could take many 10’s of seconds. Application and Device creation and deletion then had to be tracked to keep the AzureDeviceClient connection list current, which added significant complexity.

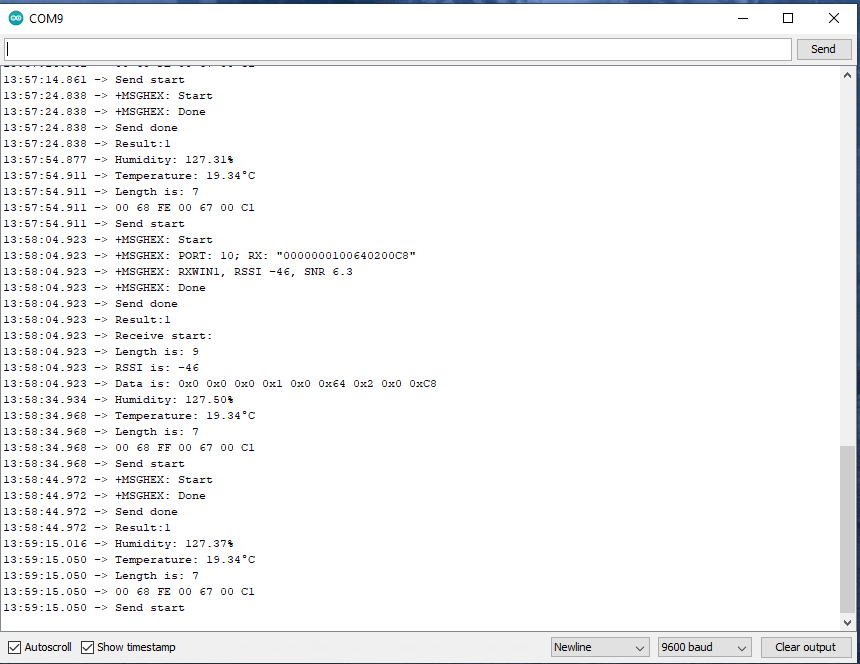

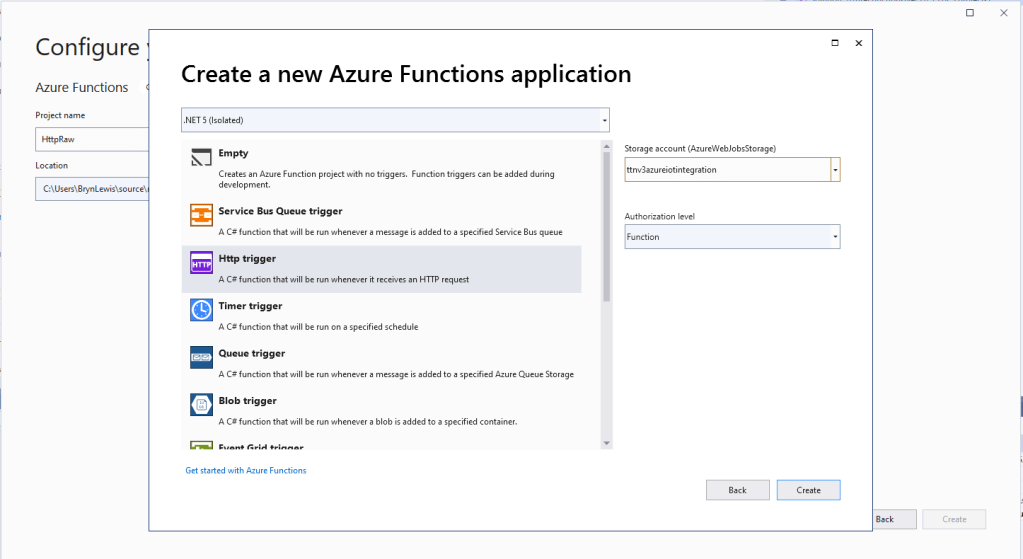

In this version a downlink message can be sent to a device only after an uplink message. I’m looking at adding an Azure Function which initiates a connection to the configured Azure IoT Hub for the specified device to mitigate with this issue.

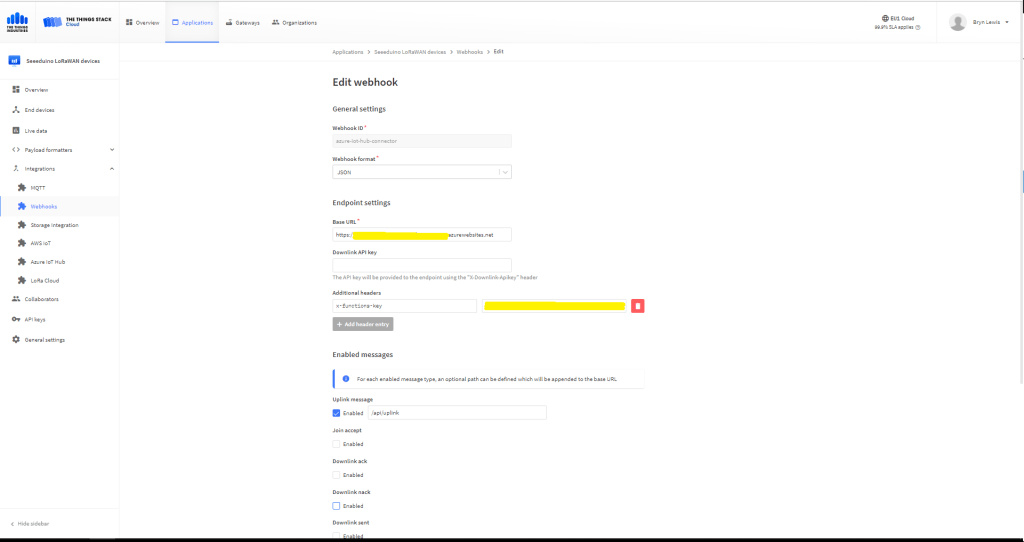

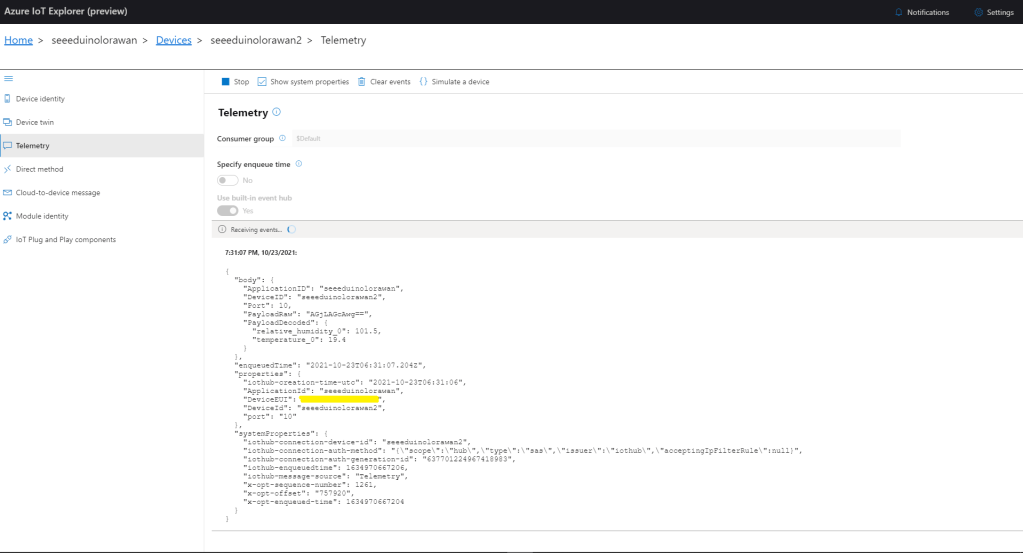

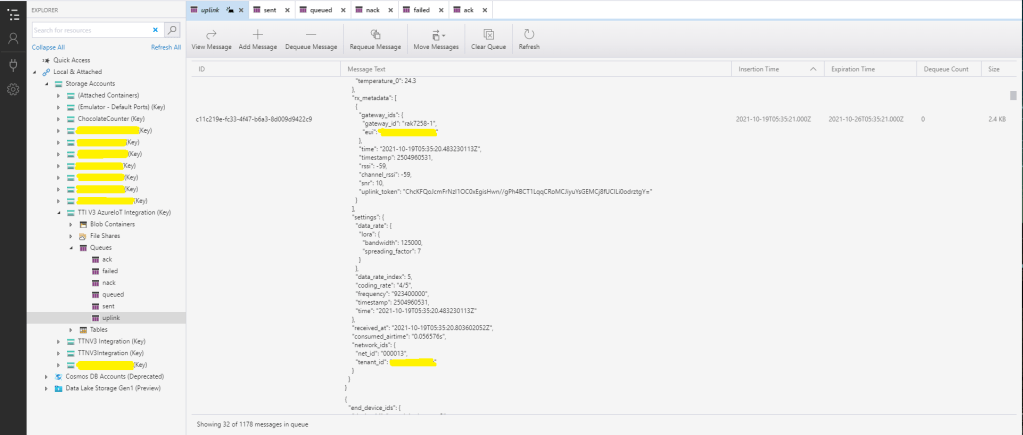

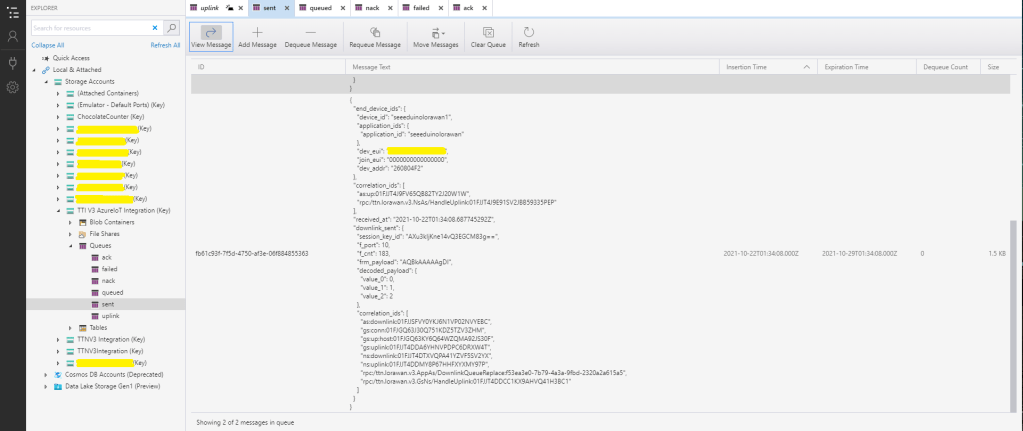

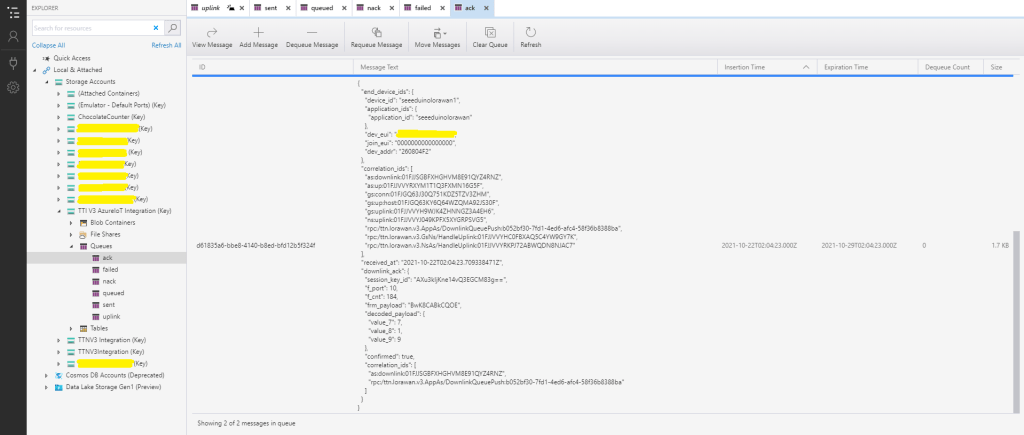

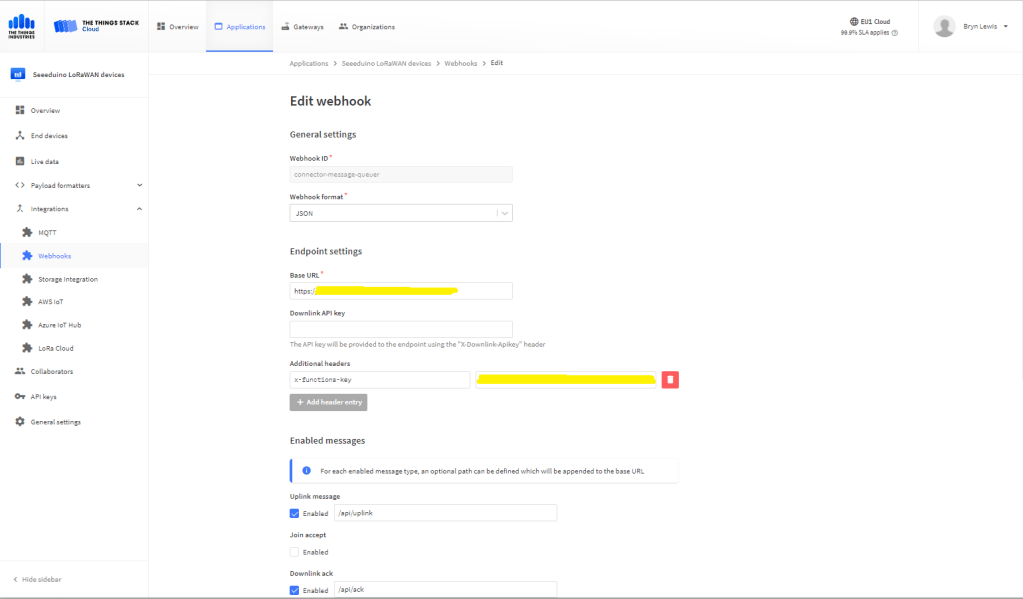

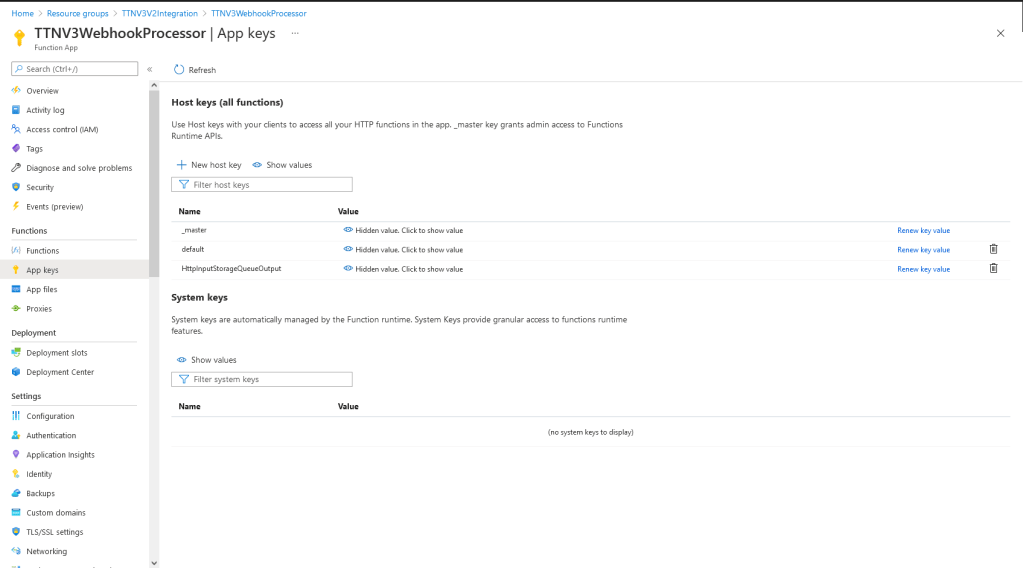

To send a TTN downlink message to a device the minimum required info is the LoRaWAN port number (specified in a Custom Property on the Azure IoT Hub cloud to device message), the device Id (from uplink message payload, which has been validated by a successful Azure IoT Hub connection) web hook id, web hook base URL, and an API Key (The Web Hook parameters are stored in the Connector configuration).

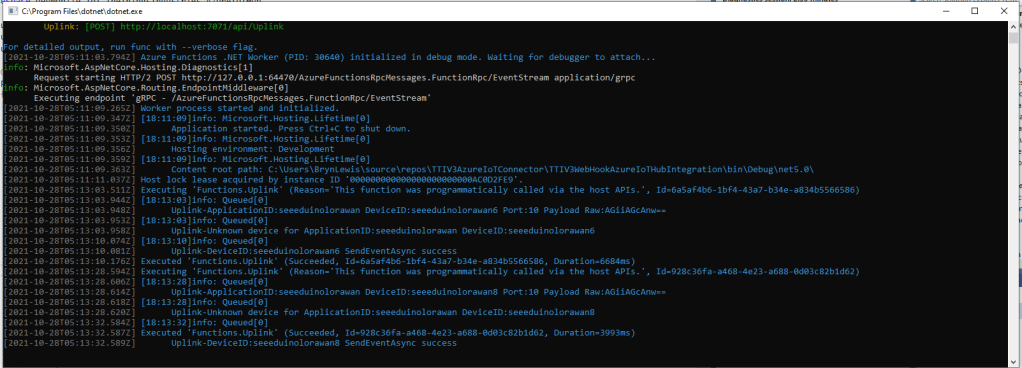

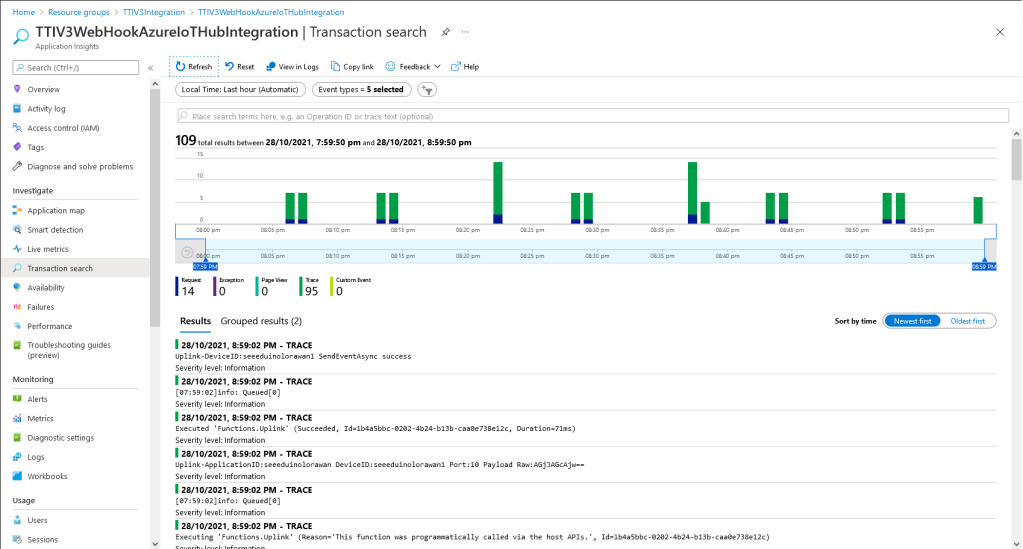

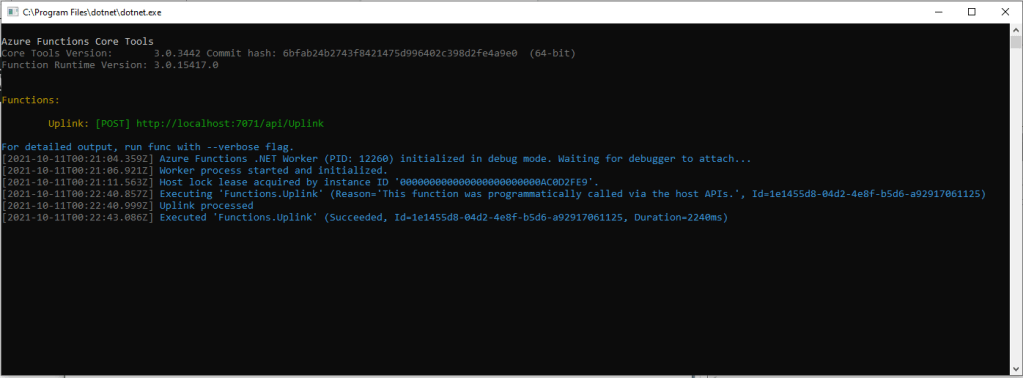

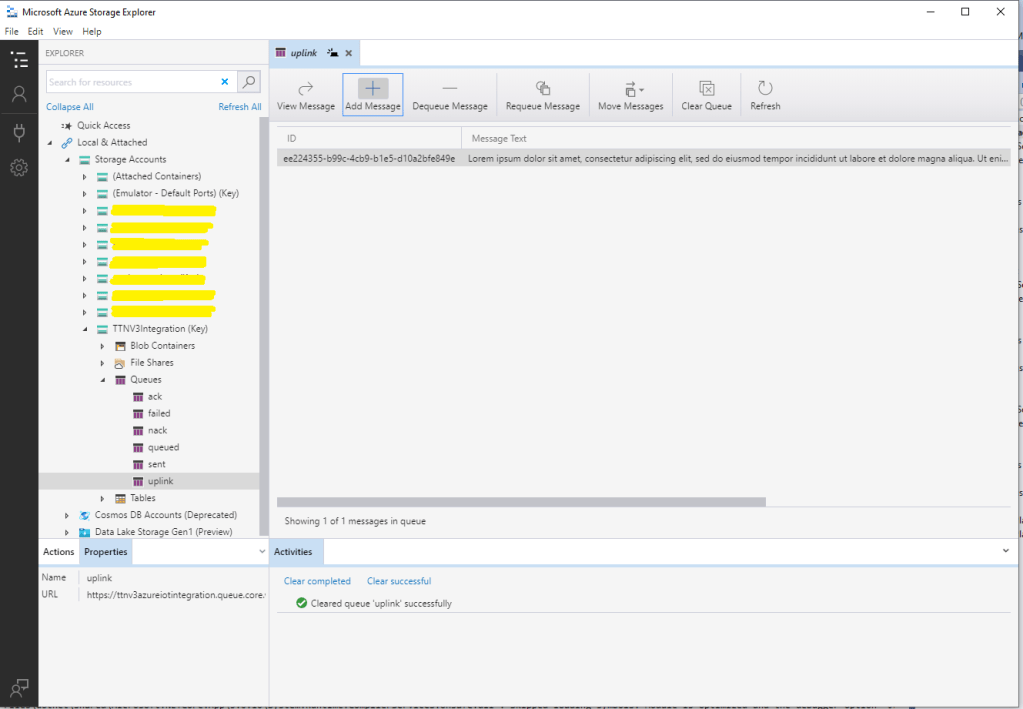

When a LoRaWAN device sends an Uplink message a session is established using Advanced Message Queuing Protocol(AMQP) so connections can be multiplexed)

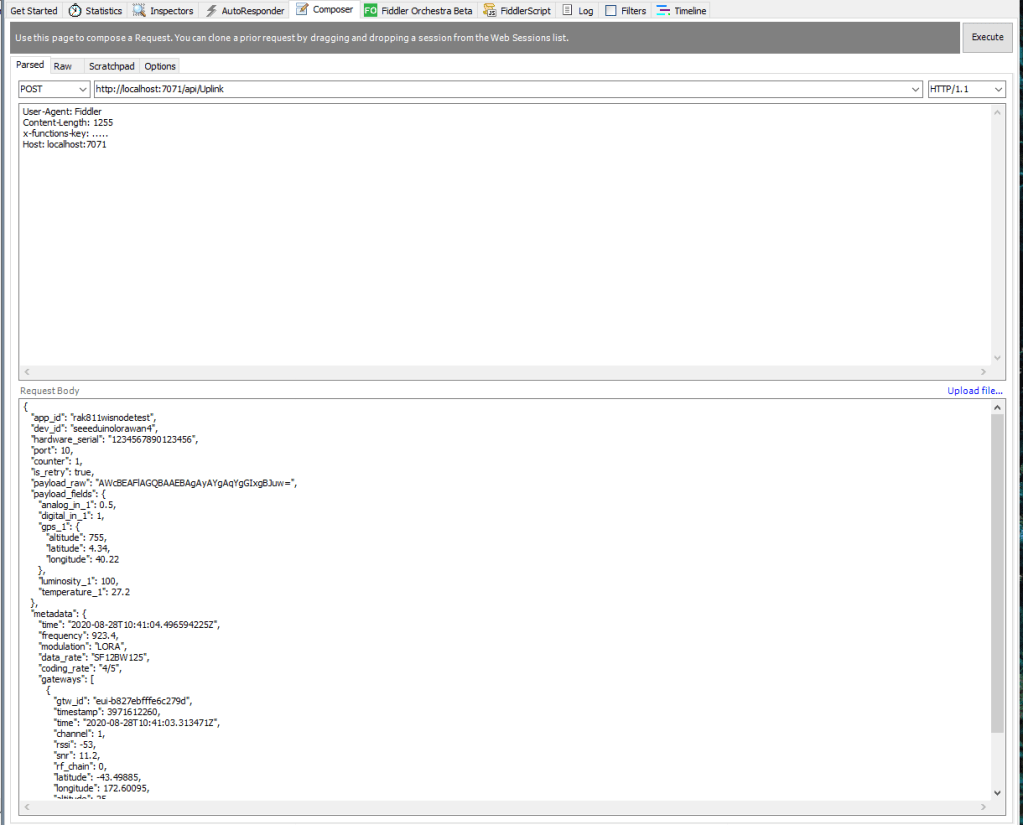

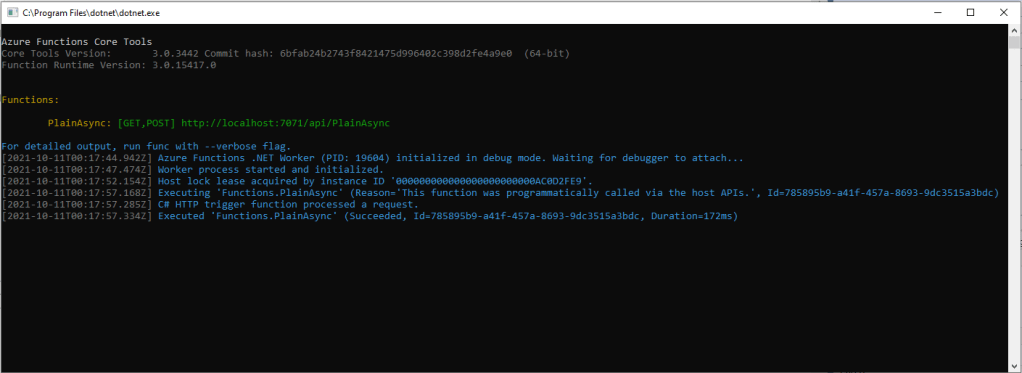

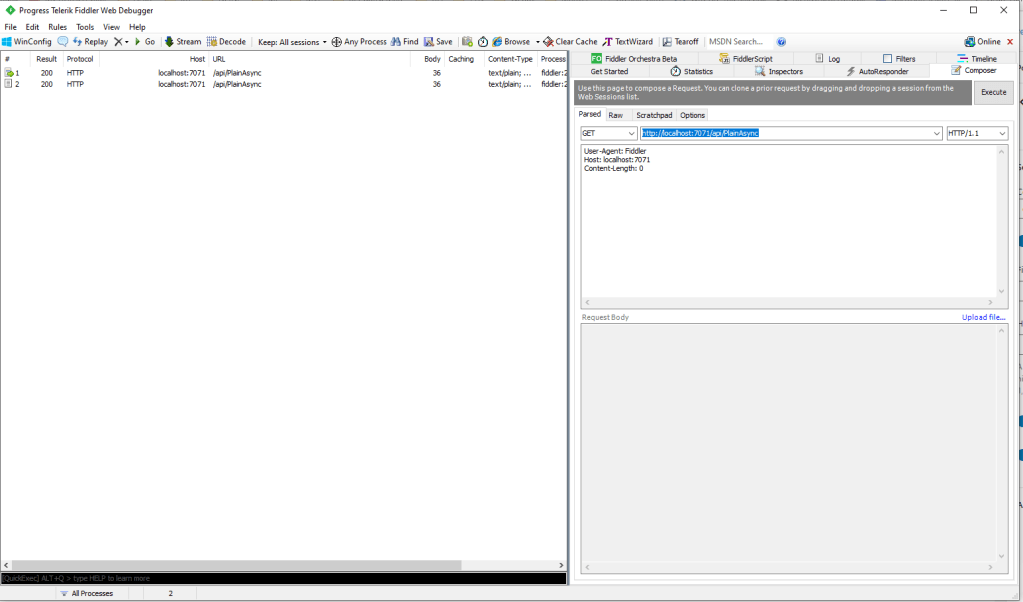

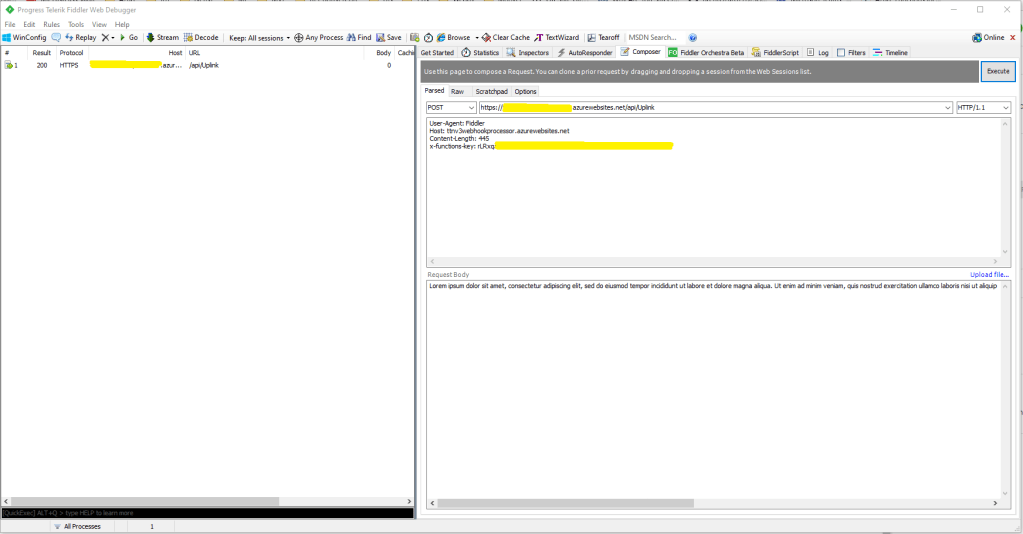

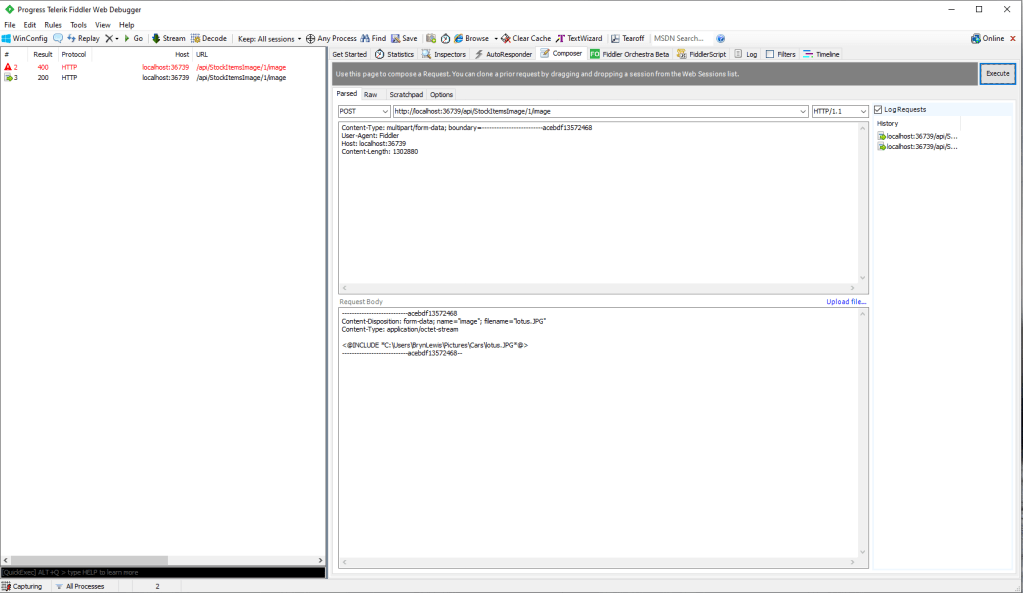

I used Azure IoT Explorer to send Cloud to Device messages to the Azure IoT Hub (to initiate the sending of a downlink message to the Device by the Connector) after simulating a TTN uplink message with Telerik Fiddler and a modified TTN sample payload.

BEWARE – TTN URLs and Azure IoT Hub device identifiers are case sensitive

...

if (!_DeviceClients.TryGetValue(deviceId, out DeviceClient deviceClient))

{

logger.LogInformation("Uplink-Unknown device for ApplicationID:{0} DeviceID:{1}", applicationId, deviceId);

deviceClient = DeviceClient.CreateFromConnectionString(_configuration.GetConnectionString("AzureIoTHub"), deviceId,

new ITransportSettings[]

{

new AmqpTransportSettings(TransportType.Amqp_Tcp_Only)

{

AmqpConnectionPoolSettings = new AmqpConnectionPoolSettings()

{

Pooling = true,

}

}

});

try

{

await deviceClient.OpenAsync();

}

catch (DeviceNotFoundException)

{

logger.LogWarning("Uplink-Unknown DeviceID:{0}", deviceId);

return req.CreateResponse(HttpStatusCode.NotFound);

}

if (!_DeviceClients.TryAdd(deviceId, deviceClient))

{

logger.LogWarning("Uplink-TryAdd failed for ApplicationID:{0} DeviceID:{1}", applicationId, deviceId);

return req.CreateResponse(HttpStatusCode.Conflict);

}

Models.AzureIoTHubReceiveMessageHandlerContext context = new Models.AzureIoTHubReceiveMessageHandlerContext()

{

DeviceId = deviceId,

ApplicationId = applicationId,

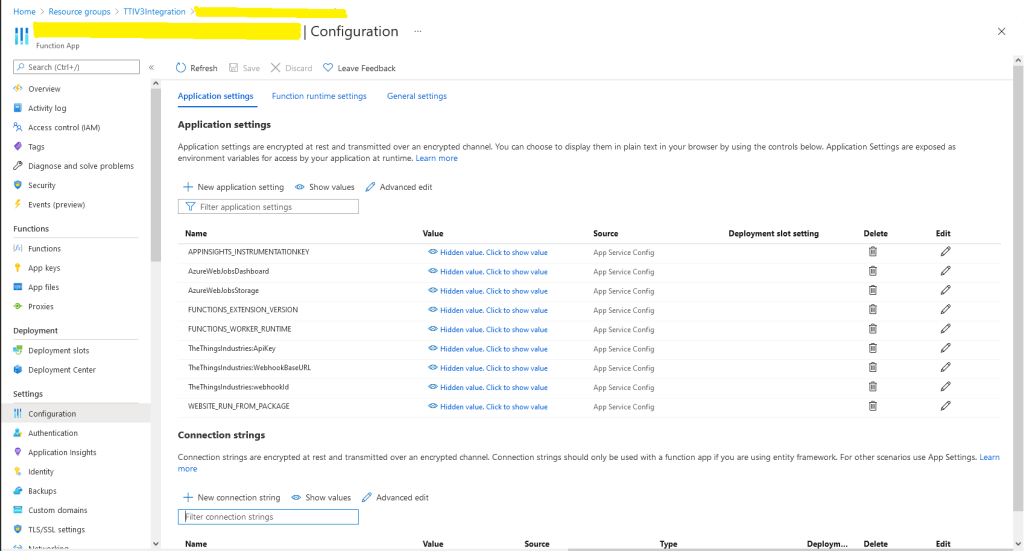

WebhookId = _configuration.GetSection("TheThingsIndustries").GetSection("WebhookId").Value,

WebhookBaseURL = _configuration.GetSection("TheThingsIndustries").GetSection("WebhookBaseURL").Value,

ApiKey = _configuration.GetSection("TheThingsIndustries").GetSection("APiKey").Value,

};

await deviceClient.SetReceiveMessageHandlerAsync(AzureIoTHubClientReceiveMessageHandler, context);

await deviceClient.SetMethodDefaultHandlerAsync(AzureIoTHubClientDefaultMethodHandler, context);

}

...

An Azure IoT Hub can invoke methods(synchronous) or send messages(asynchronous) to a device for processing. The Azure IoT Hub DeviceClient has two methods SetMethodDefaultHandlerAsync and SetReceiveMessageHandlerAsync which enable the processing of direct methods and messages.

private async Task<MethodResponse> AzureIoTHubClientDefaultMethodHandler(MethodRequest methodRequest, object userContext)

{

if (methodRequest.DataAsJson != null)

{

_logger.LogWarning("AzureIoTHubClientDefaultMethodHandler name:{0} payload:{1}", methodRequest.Name, methodRequest.DataAsJson);

}

else

{

_logger.LogWarning("AzureIoTHubClientDefaultMethodHandler name:{0} payload:NULL", methodRequest.Name);

}

return new MethodResponse(404);

}

After some experimentation in previous TTN Connectors I found the synchronous nature of DirectMethods didn’t work well with LoRAWAN “irregular” connectivity so currently they are ignored.

public partial class Integration

{

private async Task AzureIoTHubClientReceiveMessageHandler(Message message, object userContext)

{

try

{

Models.AzureIoTHubReceiveMessageHandlerContext receiveMessageHandlerContext = (Models.AzureIoTHubReceiveMessageHandlerContext)userContext;

if (!_DeviceClients.TryGetValue(receiveMessageHandlerContext.DeviceId, out DeviceClient deviceClient))

{

_logger.LogWarning("Downlink-DeviceID:{0} unknown", receiveMessageHandlerContext.DeviceId);

return;

}

using (message)

{

string payloadText = Encoding.UTF8.GetString(message.GetBytes()).Trim();

if (!AzureDownlinkMessage.PortTryGet(message.Properties, out byte port))

{

_logger.LogWarning("Downlink-Port property is invalid");

await deviceClient.RejectAsync(message);

return;

}

// Split over multiple lines in an attempt to improve readability. In this scenario a valid JSON string should start/end with {/} for an object or [/] for an array

if ((payloadText.StartsWith("{") && payloadText.EndsWith("}"))

||

((payloadText.StartsWith("[") && payloadText.EndsWith("]"))))

{

try

{

downlink.PayloadDecoded = JToken.Parse(payloadText);

}

catch (JsonReaderException)

{

downlink.PayloadRaw = payloadText;

}

}

else

{

downlink.PayloadRaw = payloadText;

}

_logger.LogInformation("Downlink-IoT Hub DeviceID:{0} MessageID:{1} LockToken :{2} Port{3}",

receiveMessageHandlerContext.DeviceId,

message.MessageId,

message.LockToken,

downlink.Port);

Models.DownlinkPayload Payload = new Models.DownlinkPayload()

{

Downlinks = new List<Models.Downlink>()

{

downlink

}

};

string url = $"{receiveMessageHandlerContext.WebhookBaseURL}/{receiveMessageHandlerContext.ApplicationId}/webhooks/{receiveMessageHandlerContext.WebhookId}/devices/{receiveMessageHandlerContext.DeviceId}/down/replace");

using (var client = new WebClient())

{

client.Headers.Add("Authorization", $"Bearer {receiveMessageHandlerContext.ApiKey}");

client.UploadString(new Uri(url), JsonConvert.SerializeObject(Payload));

}

_logger.LogInformation("Downlink-DeviceID:{0} LockToken:{1} success", receiveMessageHandlerContext.DeviceId, message.LockToken);

}

}

catch (Exception ex)

{

_logger.LogError(ex, "Downlink-ReceiveMessge processing failed");

}

}

}

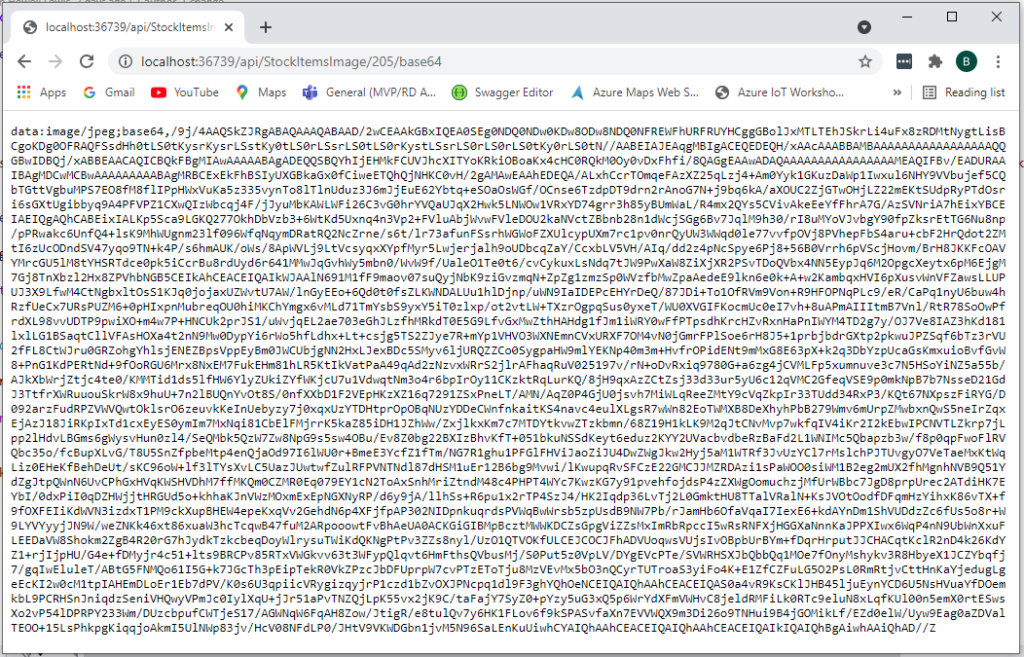

If the message body contains a valid JavaScript Object Notation(JSON) payload it is “spliced” into the DownLink message decoded_payload field otherwise the Base64 encoded frm_payload is populated.

The SetReceiveMessageHandlerAsync context information is used to construct a TTN downlink message payload and request URL(with default queuing, message priority and confirmation options)