As part of some security updates to one of the ASP.NET Core applications I work on there were changes to how users are authorised and authenticated(A&A). As part of this we were looking at replacing custom A&A (login names & passwords+ existing database) with ASP.NET Core Identity and a Custom Storage Provider. I downloaded the sample code from the Github AspNetCore.Docs repository and opened the project in Visual Studio 2022.

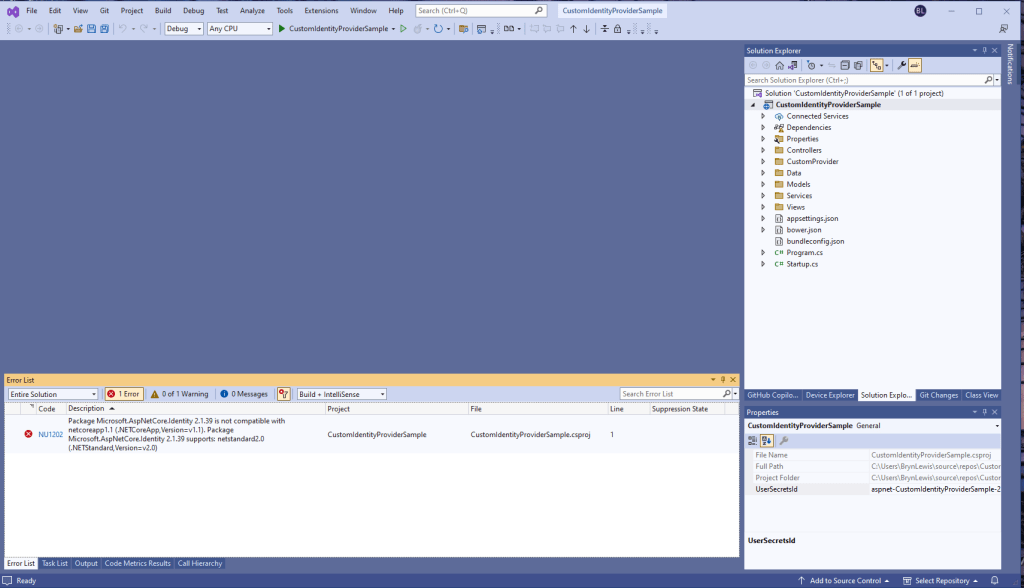

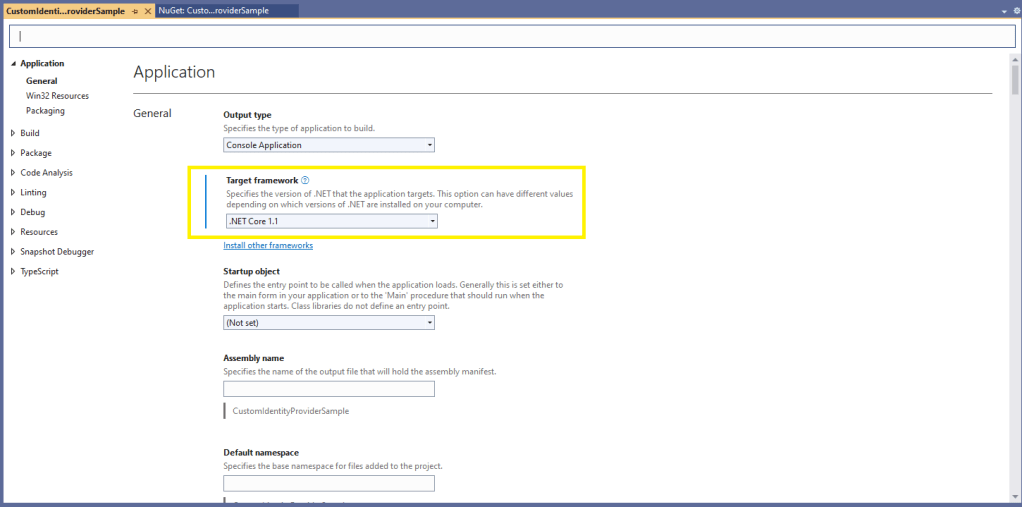

The Custom Storage Provider sample hasn’t been updated for years and used .NET Core 1.1 which was released Q4 2016.

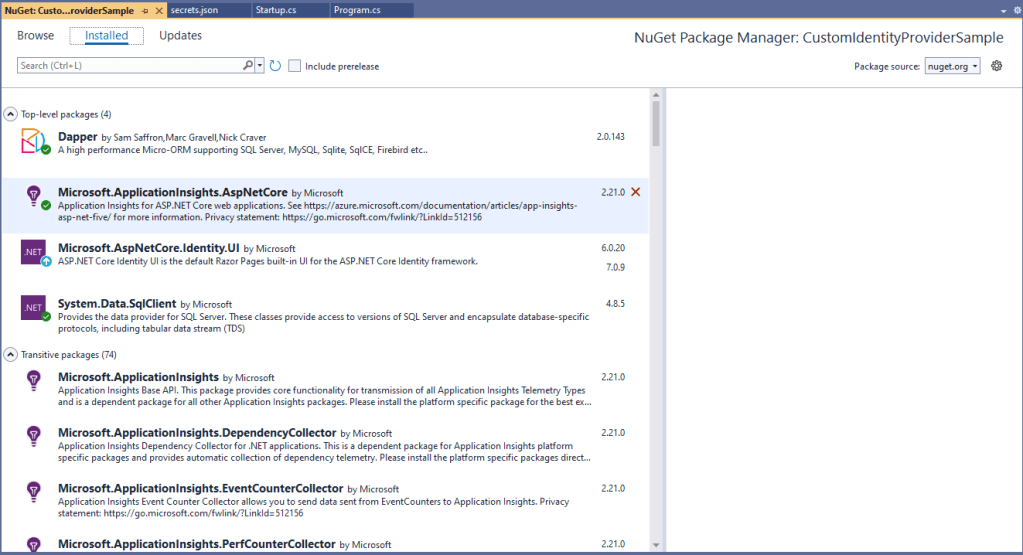

When I opened the project there were errors and many of the NuGets were deprecated.

The sample code uses Dapper (micro ORM) to access tables in a custom Microsoft SQL Server database but there was also NuGets and code that referenced Entity Framework Core.

After several unsuccessful attempts at updating the NuGets packages I started again from scratch

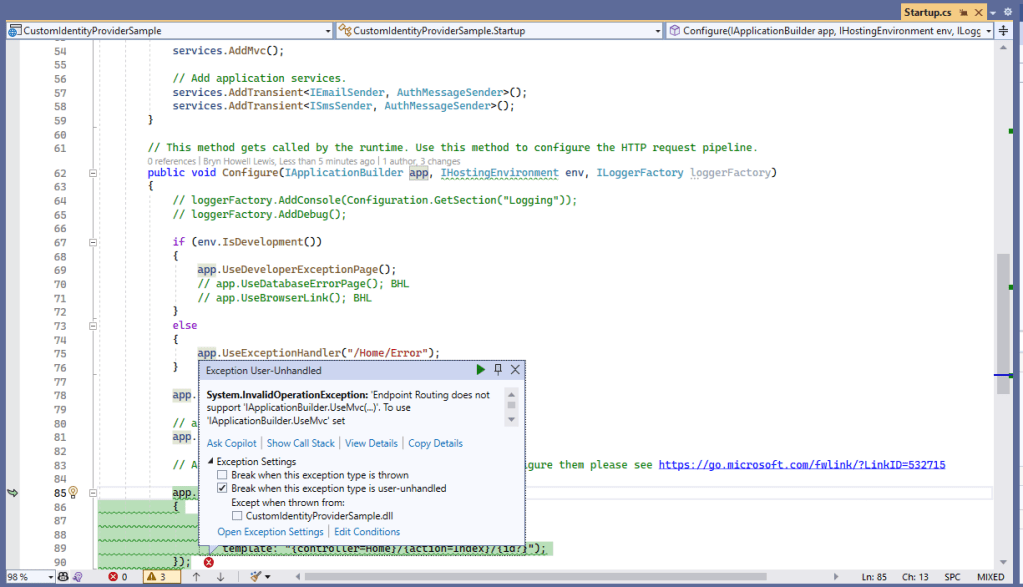

The code wouldn’t compile so I started fixing issues (The first couple of attempts were very “hacky”). The UseDatabaseErrorPage method was from EF Core so it was commented out. The UseBrowserLink method was from the Browser Link support which I decided not to use etc.

...

namespace CustomIdentityProviderSample

{

public class Startup

{

public Startup(IHostingEnvironment env)

{

var builder = new ConfigurationBuilder()

.SetBasePath(env.ContentRootPath)

.AddJsonFile("appsettings.json", optional: false, reloadOnChange: true)

.AddJsonFile($"appsettings.{env.EnvironmentName}.json", optional: true);

if (env.IsDevelopment())

{

// For more details on using the user secret store see https://go.microsoft.com/fwlink/?LinkID=532709

builder.AddUserSecrets<Startup>();

}

builder.AddEnvironmentVariables();

Configuration = builder.Build();

}

public IConfigurationRoot Configuration { get; }

// This method gets called by the runtime. Use this method to add services to the container.

public void ConfigureServices(IServiceCollection services)

{

// Add identity types

services.AddIdentity<ApplicationUser, ApplicationRole>()

.AddDefaultTokenProviders();

// Identity Services

services.AddTransient<IUserStore<ApplicationUser>, CustomUserStore>();

services.AddTransient<IRoleStore<ApplicationRole>, CustomRoleStore>();

string connectionString = Configuration.GetConnectionString("DefaultConnection");

services.AddTransient<SqlConnection>(e => new SqlConnection(connectionString));

services.AddTransient<DapperUsersTable>();

services.AddMvc();

// Add application services.

services.AddTransient<IEmailSender, AuthMessageSender>();

services.AddTransient<ISmsSender, AuthMessageSender>();

}

// This method gets called by the runtime. Use this method to configure the HTTP request pipeline.

public void Configure(IApplicationBuilder app, IHostingEnvironment env, ILoggerFactory loggerFactory)

{

// loggerFactory.AddConsole(Configuration.GetSection("Logging"));

// loggerFactory.AddDebug();

if (env.IsDevelopment())

{

app.UseDeveloperExceptionPage();

// app.UseDatabaseErrorPage(); BHL

// app.UseBrowserLink(); BHL

}

else

{

app.UseExceptionHandler("/Home/Error");

}

app.UseStaticFiles();

app.UseRouting(); // BHL

// app.UseIdentity(); BHL

app.UseAuthentication();

app.UseAuthorization();

// Add external authentication middleware below. To configure them please see https://go.microsoft.com/fwlink/?LinkID=532715

app.UseMvc(routes =>

{

routes.MapRoute(

name: "default",

template: "{controller=Home}/{action=Index}/{id?}");

});

}

}

}

This process was repeated many times until the Custom Storage Provider sample compiled so I could run it in the Visual Studio 2022 debugger.

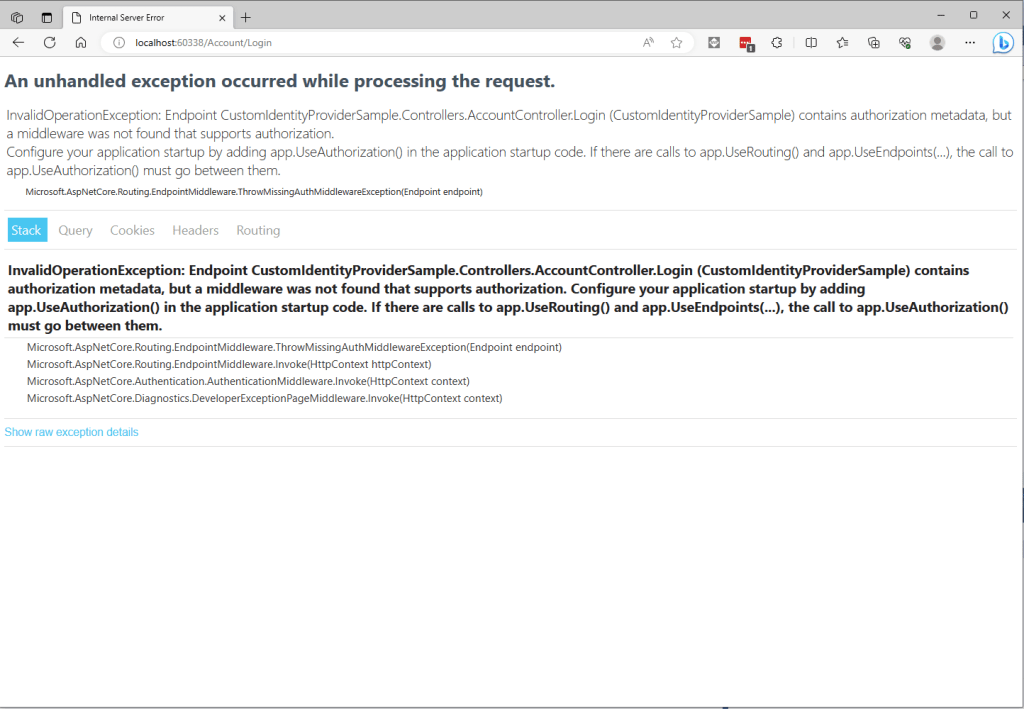

The Custom Storage Provider sample then failed because of changes to routing etc.

The Custom Storage Provider sample then failed because of changes to A&A middleware

The Custom Storage Provider sample then failed because the database creation script and the code didn’t match.

CREATE TABLE [dbo].[CustomUser](

[Id] [uniqueidentifier] NOT NULL,

[Email] [nvarchar](256) NULL,

[EmailConfirmed] [bit] NOT NULL,

[PasswordHash] [nvarchar](max) NULL,

[UserName] [nvarchar](256) NOT NULL,

CONSTRAINT [PK_dbo.CustomUsers] PRIMARY KEY CLUSTERED

(

[Id] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

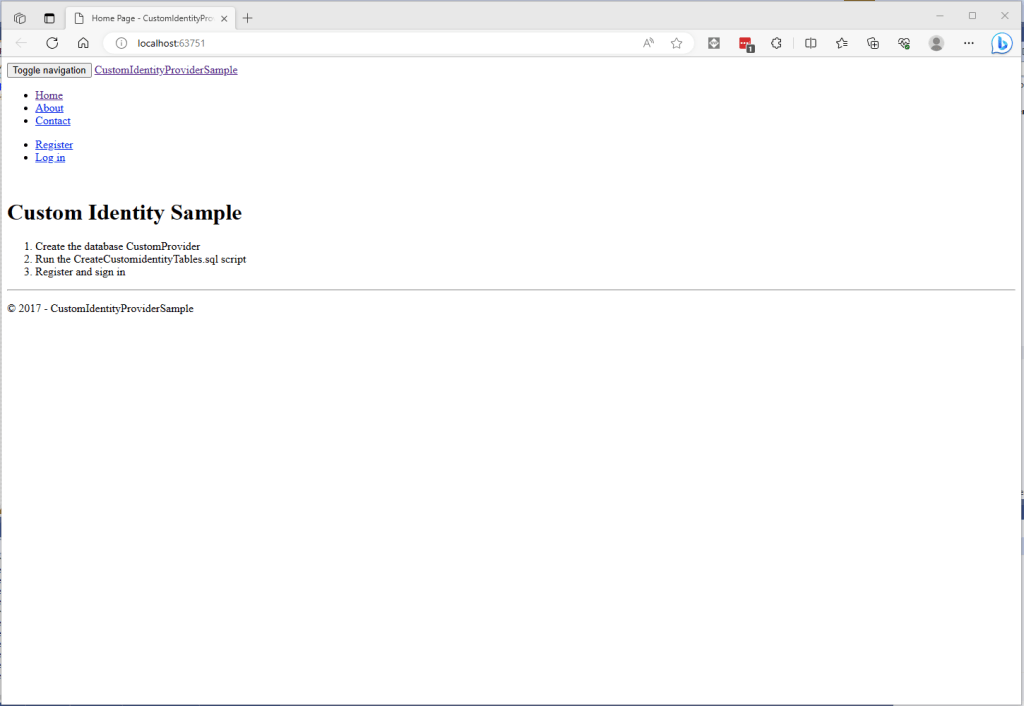

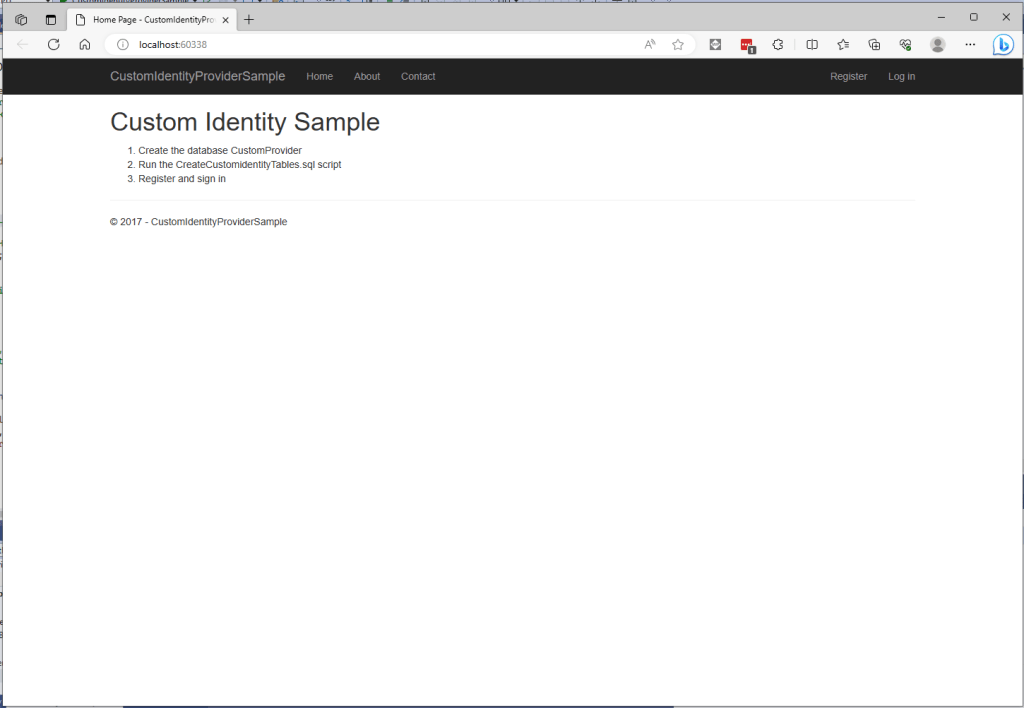

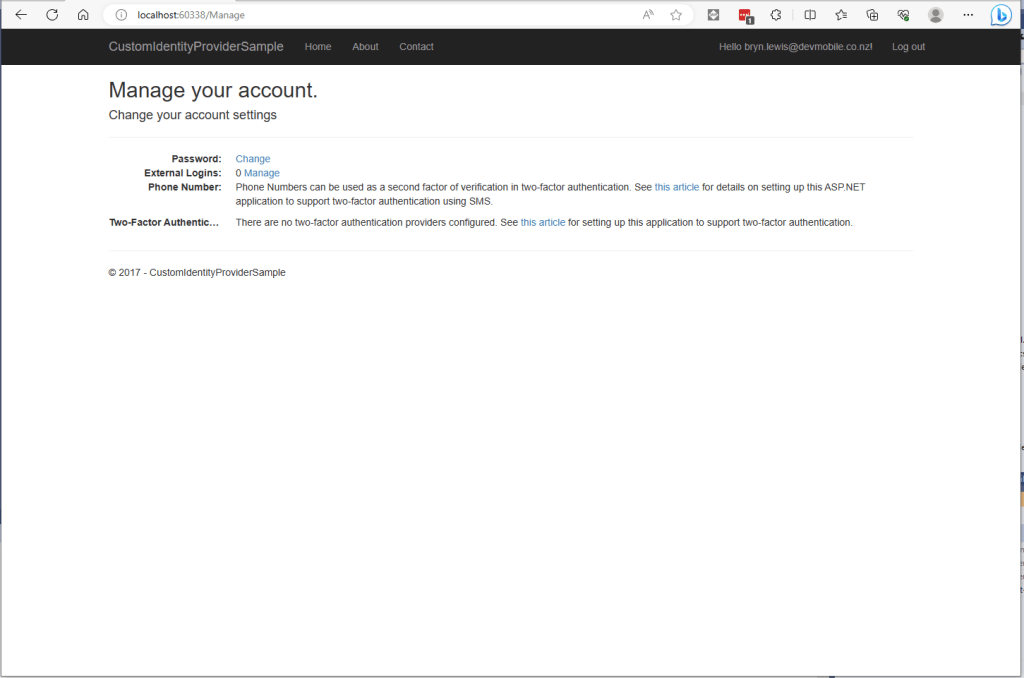

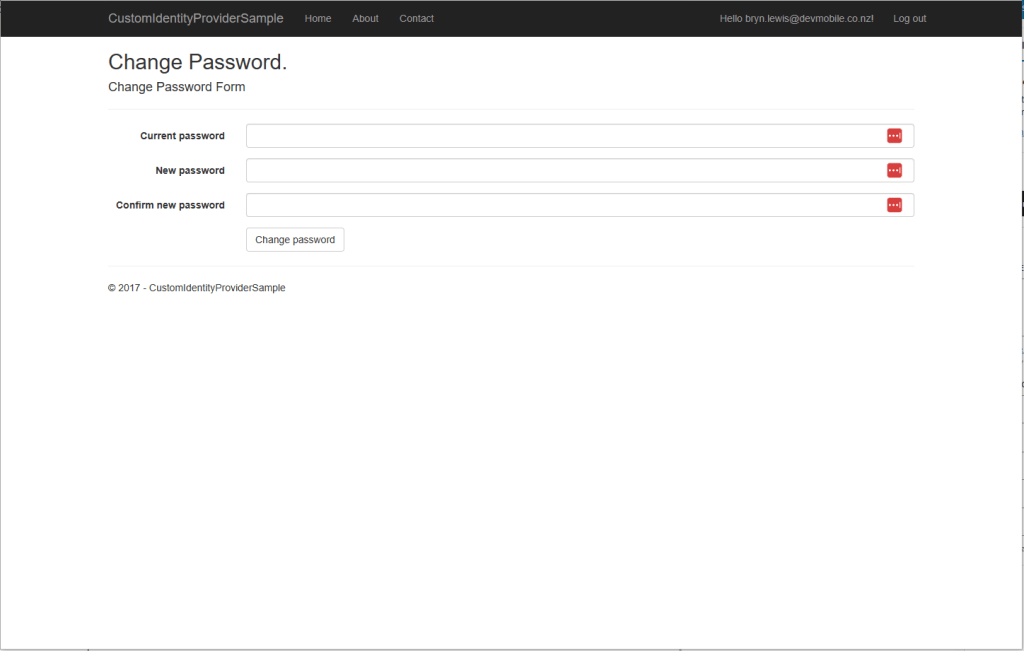

Finally, the Custom Storage Provider sample worked but the page layout was broken

I then worked through the Razor views adding stylesheets where necessary.

@inject Microsoft.ApplicationInsights.AspNetCore.JavaScriptSnippet JavaScriptSnippet

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>@ViewData["Title"] - CustomIdentityProviderSample</title>

@* BHL *@

<link rel="stylesheet" href="https://ajax.aspnetcdn.com/ajax/bootstrap/3.3.7/css/bootstrap.css"

asp-fallback-href="~/lib/bootstrap/dist/css/bootstrap.css"

asp-fallback-test-class="sr-only" asp-fallback-test-property="position" asp-fallback-test-value="absolute" />

<environment names="Development">

<link rel="stylesheet" href="~/lib/bootstrap/dist/css/bootstrap.css" />

</environment>

<environment names="Staging,Production">

<link rel="stylesheet" href="https://ajax.aspnetcdn.com/ajax/bootstrap/3.3.7/css/bootstrap.css"

asp-fallback-href="~/lib/bootstrap/dist/css/bootstrap.css"

asp-fallback-test-class="sr-only" asp-fallback-test-property="position" asp-fallback-test-value="absolute" />

</environment>

@Html.Raw(JavaScriptSnippet.FullScript)

</head>

...

The Custom Storage Provider sample subset of pages then rendered better.

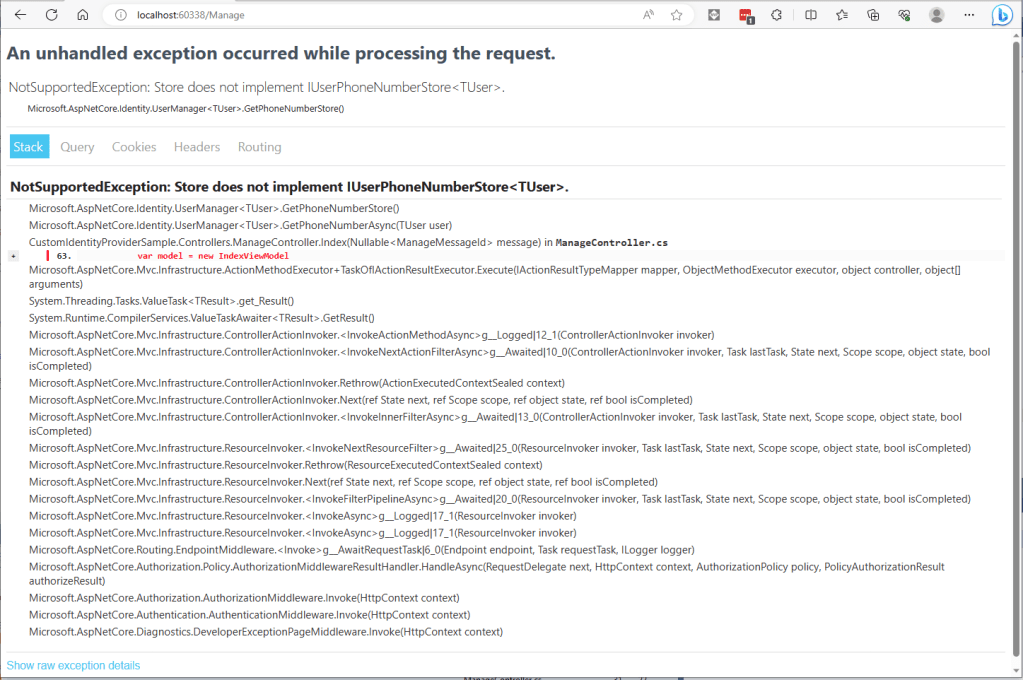

The Custom Storage Provider sample then failed when functionality like user phone number was used.

This required the addition of implementations (many just throw NotImplementedException exceptions) for IUserPhoneNumberStore, IUserTwoFactorStore, and IUserLoginStore etc.

using Microsoft.AspNetCore.Identity;

using System;

using System.Threading.Tasks;

using System.Threading;

using System.Collections.Generic;

namespace CustomIdentityProviderSample.CustomProvider

{

/// <summary>

/// This store is only partially implemented. It supports user creation and find methods.

/// </summary>

public class CustomUserStore : IUserStore<ApplicationUser>,

IUserPasswordStore<ApplicationUser>,

IUserPhoneNumberStore<ApplicationUser>,

IUserTwoFactorStore<ApplicationUser>,

IUserLoginStore<ApplicationUser>

{

private readonly DapperUsersTable _usersTable;

public CustomUserStore(DapperUsersTable usersTable)

{

_usersTable = usersTable;

}

public Task AddLoginAsync(ApplicationUser user, UserLoginInfo login, CancellationToken cancellationToken)

{

throw new NotImplementedException();

}

public async Task<IdentityResult> CreateAsync(ApplicationUser user,

CancellationToken cancellationToken = default(CancellationToken))

{

cancellationToken.ThrowIfCancellationRequested();

if (user == null) throw new ArgumentNullException(nameof(user));

return await _usersTable.CreateAsync(user);

}

public async Task<IdentityResult> DeleteAsync(ApplicationUser user,

CancellationToken cancellationToken = default(CancellationToken))

{

cancellationToken.ThrowIfCancellationRequested();

if (user == null) throw new ArgumentNullException(nameof(user));

return await _usersTable.DeleteAsync(user);

}

public async Task<ApplicationUser> FindByIdAsync(string userId,

CancellationToken cancellationToken = default(CancellationToken))

{

cancellationToken.ThrowIfCancellationRequested();

if (userId == null) throw new ArgumentNullException(nameof(userId));

Guid idGuid;

if(!Guid.TryParse(userId, out idGuid))

{

throw new ArgumentException("Not a valid Guid id", nameof(userId));

}

return await _usersTable.FindByIdAsync(idGuid);

}

public Task<ApplicationUser> FindByLoginAsync(string loginProvider, string providerKey, CancellationToken cancellationToken)

{

throw new NotImplementedException();

}

public async Task<ApplicationUser> FindByNameAsync(string userName,

CancellationToken cancellationToken = default(CancellationToken))

{

cancellationToken.ThrowIfCancellationRequested();

if (userName == null) throw new ArgumentNullException(nameof(userName));

return await _usersTable.FindByNameAsync(userName);

}

public async Task<IList<UserLoginInfo>> GetLoginsAsync(ApplicationUser user, CancellationToken cancellationToken)

{

cancellationToken.ThrowIfCancellationRequested();

if (user == null) throw new ArgumentNullException(nameof(user));

return await _usersTable.GetLoginsAsync(user.Id);

}

...

}

This also required extensions to the DapperUsersTable.cs.

public async Task<IdentityResult> UpdateAsync(ApplicationUser user)

{

string sql = "UPDATE dbo.AspNetUsers " + // BHL

"SET [Id] = @Id, [Email]= @Email, [EmailConfirmed] = @EmailConfirmed, [PasswordHash] = @PasswordHash, [UserName] = @UserName " +

"WHERE Id = @Id;";

int rows = await _connection.ExecuteAsync(sql, new { user.Id, user.Email, user.EmailConfirmed, user.PasswordHash, user.UserName });

if (rows == 1)

{

return IdentityResult.Success;

}

return IdentityResult.Failed(new IdentityError { Description = $"Could not update user {user.Email}." });

}

After many failed attempts my very nasty Custom Storage Provider refresh works (with many warnings and messages). I now understand how they work well enough that I am going to start again from scratch.