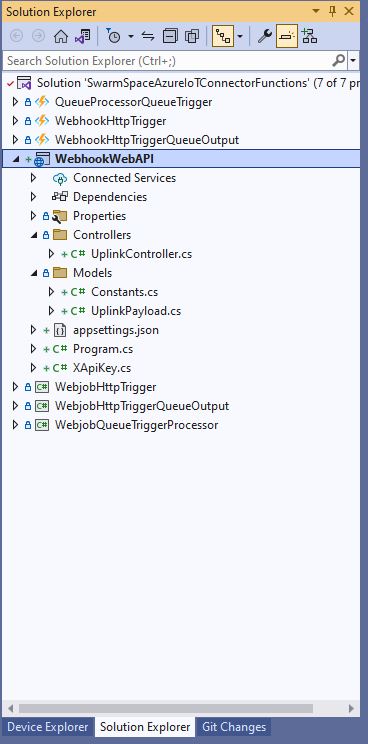

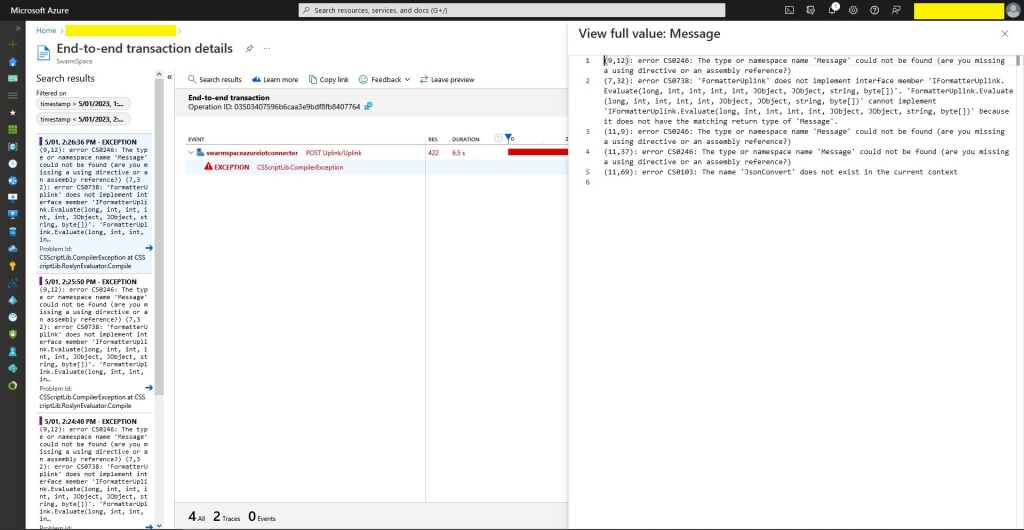

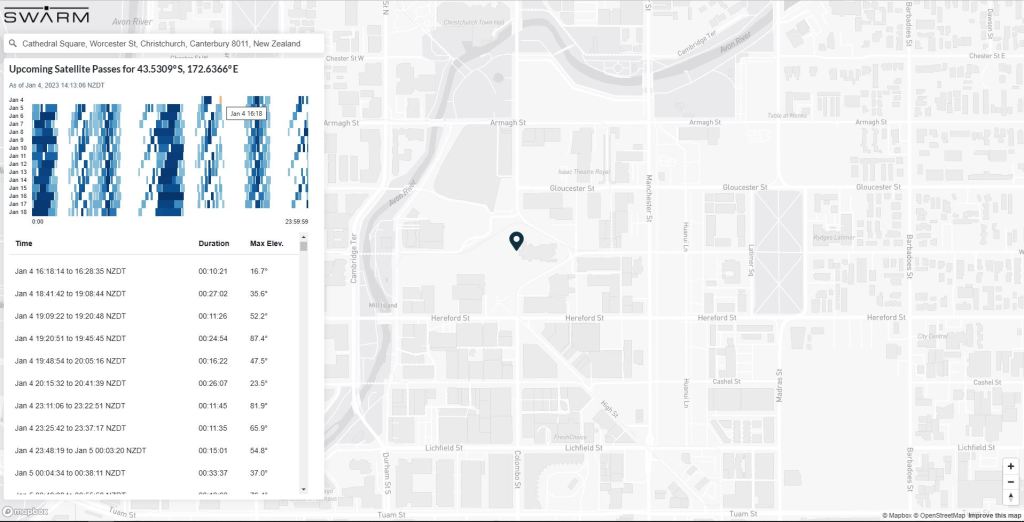

This post could have been much longer with more screen grabs and code snippets, so this is the “highlights package”. This post took a lot longer than I expected as building, testing locally, then deploying the different implementations was time consuming.

I built the projects to investigate the different options taking into account reliability, robustness, amount of code, performance (I think slow startup could be a problem). The code is very “plain” I used the default options, no copyright notices, default formatting, context sensitive error messages were used to add any required “using” statements, libraries etc.

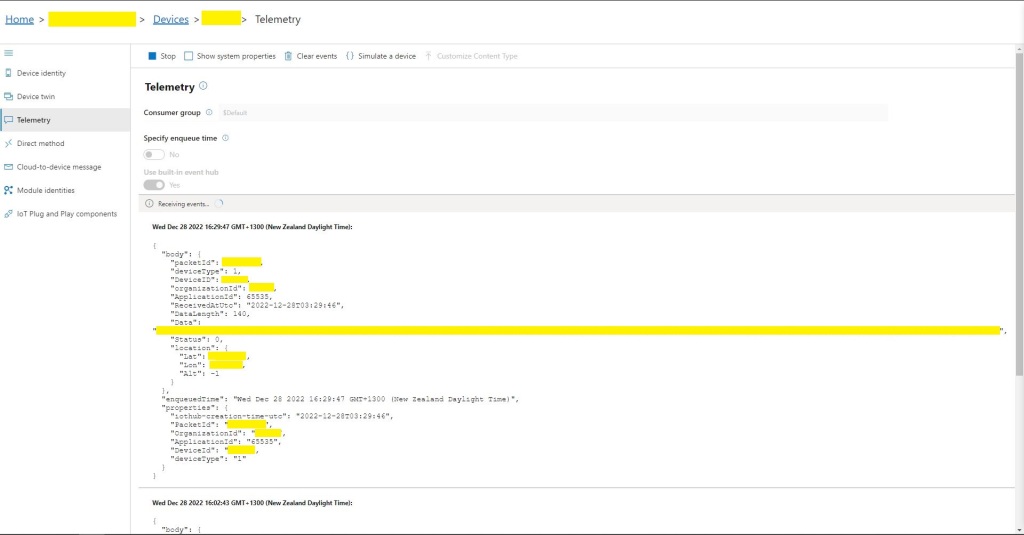

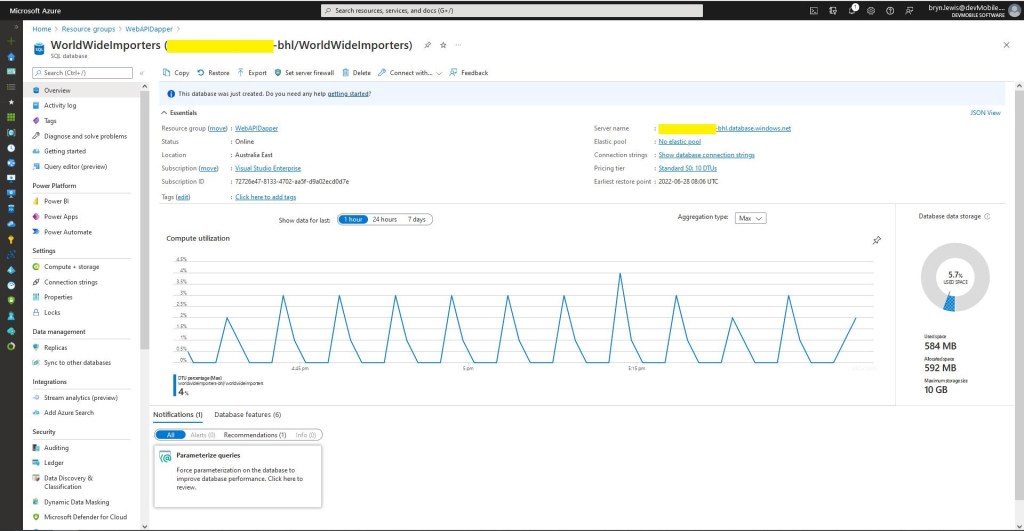

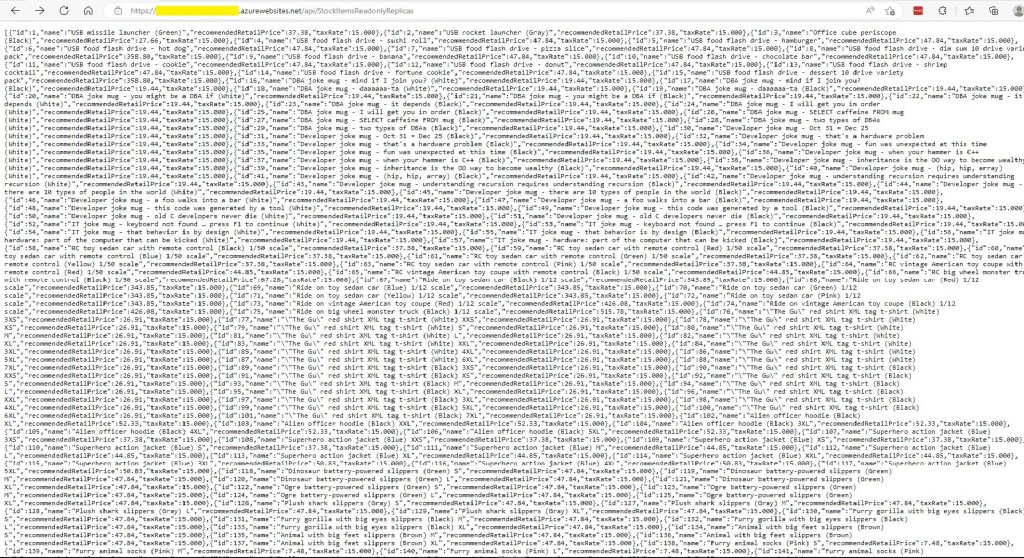

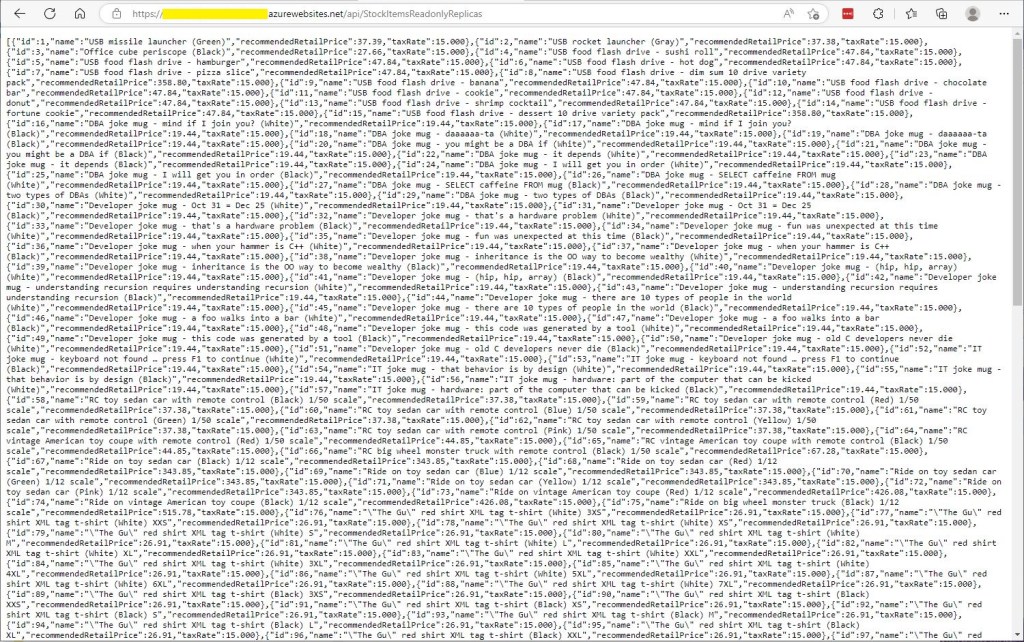

I also deployed the Azure Functions and ASP .NET Core WebAPI application to check there were no difference (beyond performance) in the way they worked. I included a “default” function (generated by the new project wizard) for reference while I was building the others.

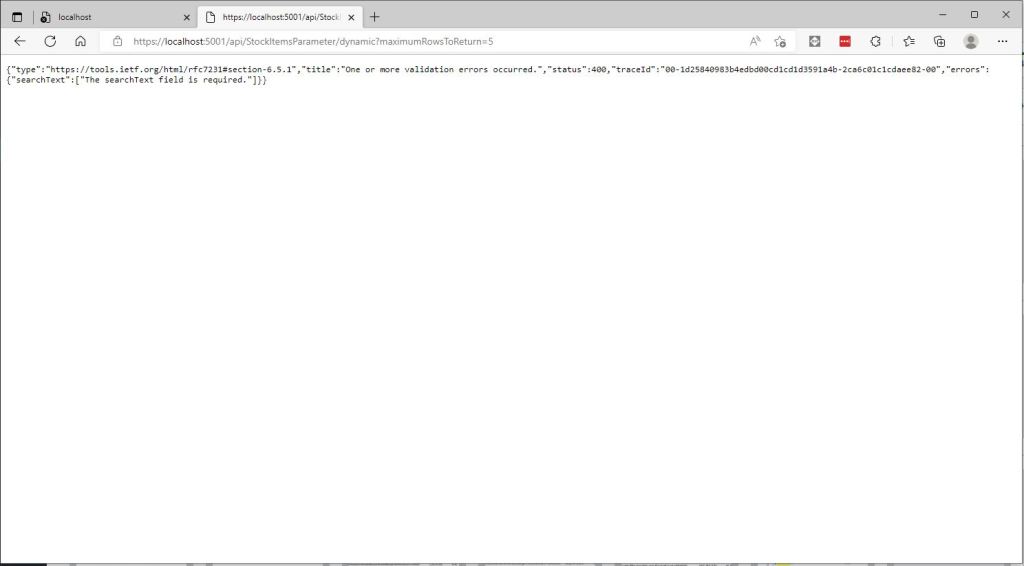

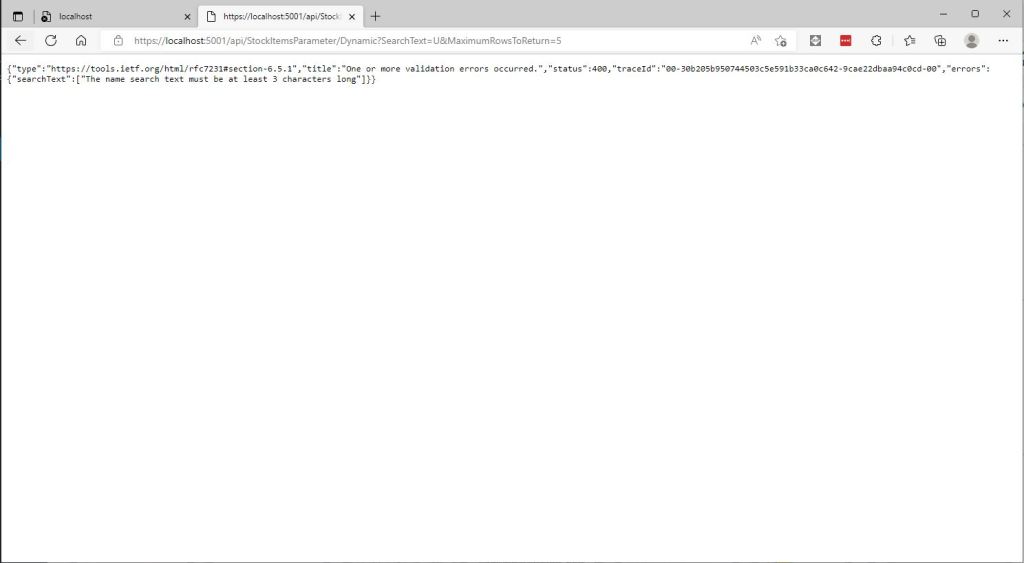

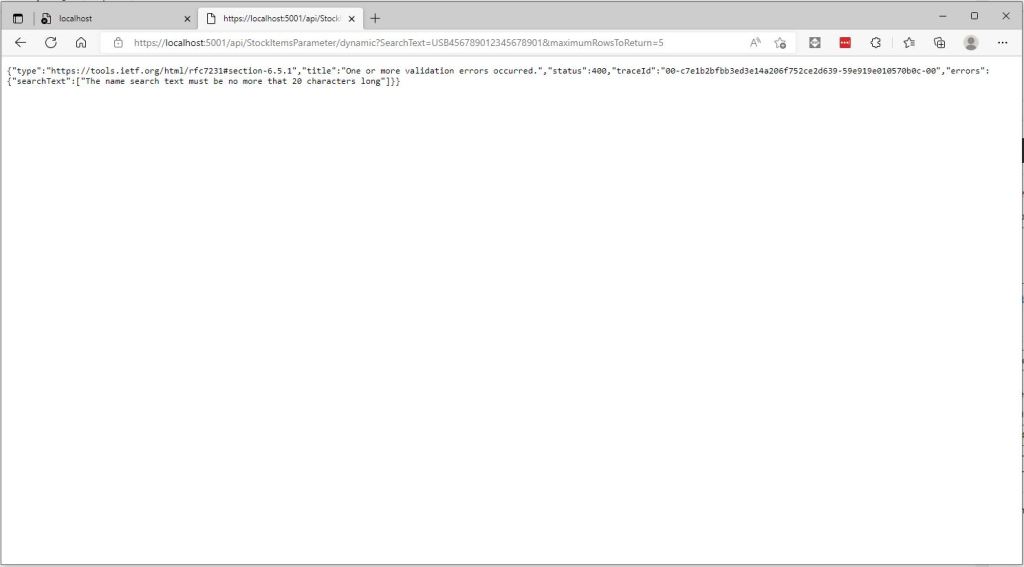

The “dynamic” type function worked but broke when the Javascript Object Notation(JSON) was invalid, or fields were missing, and it didn’t enforce the payload was correct.

namespace WebhookHttpTrigger

{

public static class Dynamic

{

[FunctionName("Dynamic")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] dynamic input,

ILogger log)

{

log.LogInformation($"C# HTTP Dynamic trigger function processed a request PacketId:{input.packetId}.");

return new OkObjectResult("Hello, This HTTP triggered Dynamic function executed successfully.");

}

}

}

The “TypedAutomagic” function worked, it ensured the Javascript Object Notation(JSON) was valid, the payload format was correct but didn’t enforce the System.ComponentModel.DataAnnotations attributes.

namespace WebhookHttpTrigger

{

public static class TypedAutomagic

{

[FunctionName("TypedAutomagic")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] UplinkPayload payload,

ILogger log)

{

log.LogInformation($"C# HTTP trigger function typed TypedAutomagic UplinkPayload processed a request PacketId:{payload.PacketId}");

return new OkObjectResult("Hello, This HTTP triggered automagic function executed successfully.");

}

}

}

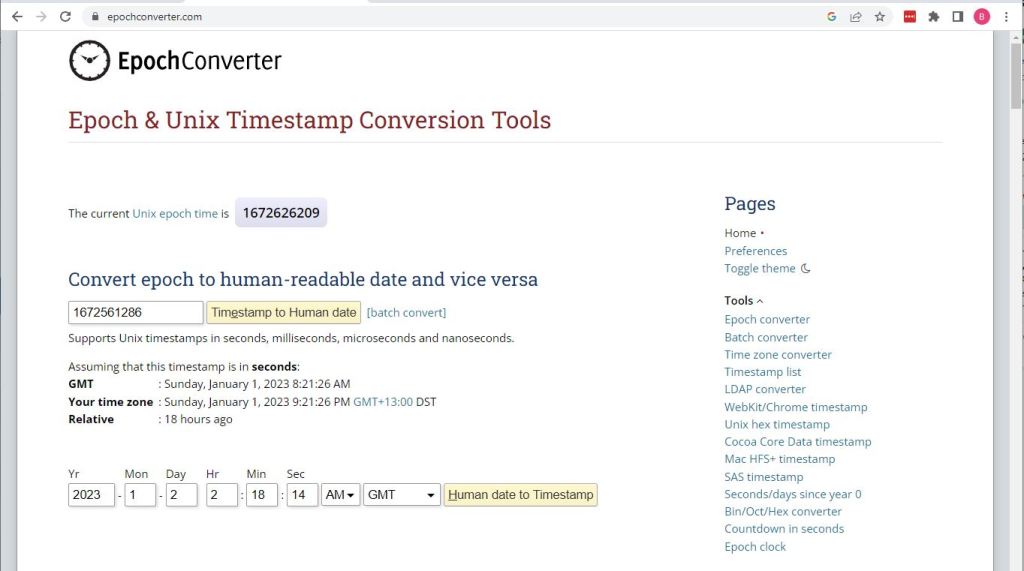

The “TypedAutomagic” implementation also detected when the JSON property values in the payload couldn’t be deserialised successfully, but if the hiveRxTime was invalid the value was set to 1/1/0001 12:00:00 am.

The “TypedDeserializeObject” function worked, it ensured the Javascript Object Notation(JSON) was valid, the payload format was correct but also didn’t enforce the System.ComponentModel.DataAnnotations attributes.

namespace WebhookHttpTrigger

{

public static class TypedDeserializeObject

{

[FunctionName("TypedDeserializeObject")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] string httpPayload,

ILogger log)

{

UplinkPayload uplinkPayload;

try

{

uplinkPayload = JsonConvert.DeserializeObject<UplinkPayload>(httpPayload);

}

catch(Exception ex)

{

log.LogWarning(ex, "JsonConvert.DeserializeObject failed");

return new BadRequestObjectResult(ex.Message);

}

log.LogInformation($"C# HTTP trigger function typed DeserializeObject UplinkPayload processed a request PacketId:{uplinkPayload.PacketId}");

return new OkObjectResult("Hello, This HTTP triggered DeserializeObject function executed successfully.");

}

}

}

The “TypedDeserializeObjectAnnotations” function worked, it ensured the Javascript Object Notation (JSON) was valid, the payload format was correct and enforced the System.ComponentModel.DataAnnotations attributes.

namespace WebhookHttpTrigger

{

public static class TypedDeserializeObjectAnnotations

{

[FunctionName("TypedDeserializeObjectAnnotations")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] string httpPayload,

ILogger log)

{

UplinkPayload uplinkPayload;

try

{

uplinkPayload = JsonConvert.DeserializeObject<UplinkPayload>(httpPayload);

}

catch (Exception ex)

{

log.LogWarning(ex, "JsonConvert.DeserializeObject failed");

return new BadRequestObjectResult(ex.Message);

}

var context = new ValidationContext(uplinkPayload, serviceProvider: null, items: null);

var results = new List<ValidationResult>();

var isValid = Validator.TryValidateObject(uplinkPayload, context, results,true);

if (!isValid)

{

log.LogWarning("Validator.TryValidateObject failed results:{results}", results);

return new BadRequestObjectResult(results);

}

log.LogInformation($"C# HTTP trigger function typed DeserializeObject UplinkPayload processed a request PacketId:{uplinkPayload.PacketId}");

return new OkObjectResult("Hello, This HTTP triggered DeserializeObject function executed successfully.");

}

}

}

I built an ASP .NET Core WebAPI version with two uplink method implementations, one which used dependency injection (DI) and the other that didn’t. I also added code to validate the deserialisation of HiveRxTimeUtc.

....

[HttpPost]

public async Task<IActionResult> Post([FromBody] UplinkPayload payload)

{

if ( payload.HiveRxTimeUtc == DateTime.MinValue)

{

_logger.LogWarning("HiveRxTimeUtc validation failed");

return this.BadRequest();

}

QueueClient queueClient = _queueServiceClient.GetQueueClient("uplink");

await queueClient.SendMessageAsync(Convert.ToBase64String(JsonSerializer.SerializeToUtf8Bytes(payload)));

return this.Ok();

}

...

[HttpPost]

public async Task<IActionResult> Post([FromBody] UplinkPayload payload)

{

// Check that the post data is good

if (!this.ModelState.IsValid)

{

_logger.LogWarning("QueuedController validation failed {0}", this.ModelState.ToString());

return this.BadRequest(this.ModelState);

}

if ( payload.HiveRxTimeUtc == DateTime.MinValue)

{

_logger.LogWarning("HiveRxTimeUtc validation failed");

return this.BadRequest();

}

try

{

QueueClient queueClient = new QueueClient(_configuration.GetConnectionString("AzureWebApi"), "uplink");

//await queueClient.CreateIfNotExistsAsync();

await queueClient.SendMessageAsync(Convert.ToBase64String(JsonSerializer.SerializeToUtf8Bytes(payload)));

}

catch (Exception ex)

{

_logger.LogError(ex,"Unable to open/create queue or send message", ex);

return this.Problem("Unable to open queue (creating if it doesn't exist) or send message", statusCode: 500, title: "Uplink payload not sent");

}

return this.Ok();

}

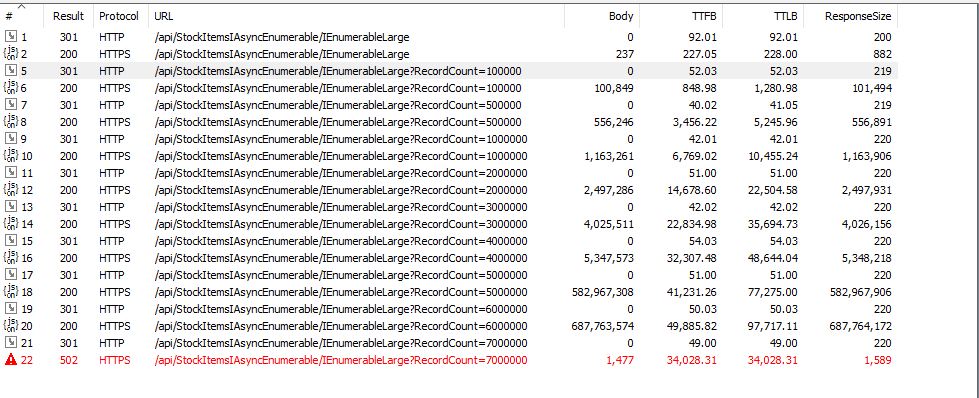

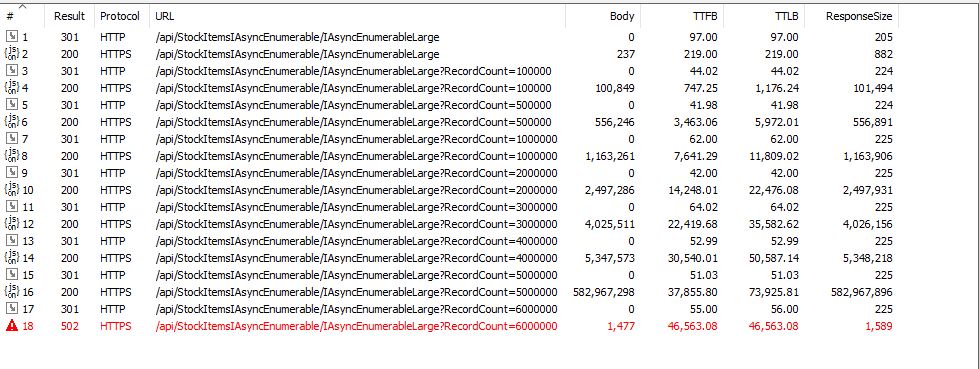

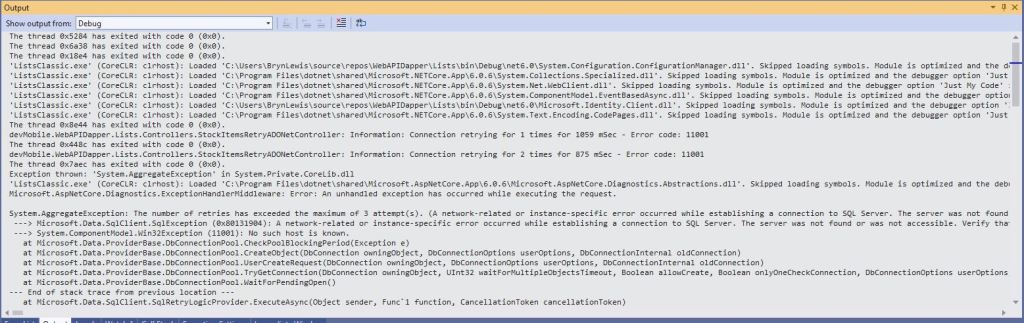

In Telerik Fiddler I could see calls to the Azure Functions and the ASP .NET Core WebAPI were taking similar time to execute (Though I did see 5+ seconds) and the ASP .NET Core WebAPI appeared to take much longer to startup. (I did see 100+ seconds when I made four requests as the ASP .NET Core WebAPI was starting)

I’m going to use the ASP .NET Core WebAPI with dependency injection (DI) approach just because “it’s always better with DI”.

I noticed some other “oddness” while implementing then testing the Azure Http Trigger functions and ASP .NET Core WebAPI which I will cover off in some future posts.