Web cache validation with eTags

On a couple of the systems I work on there are a number of queries (often complex spatial searches) which are very resource intensive but are quite readily cached. In these systems we have used HTTP GET and HEAD Request methods together so that the client only re-GETs the query results after a HEAD method indicates there have been updates.

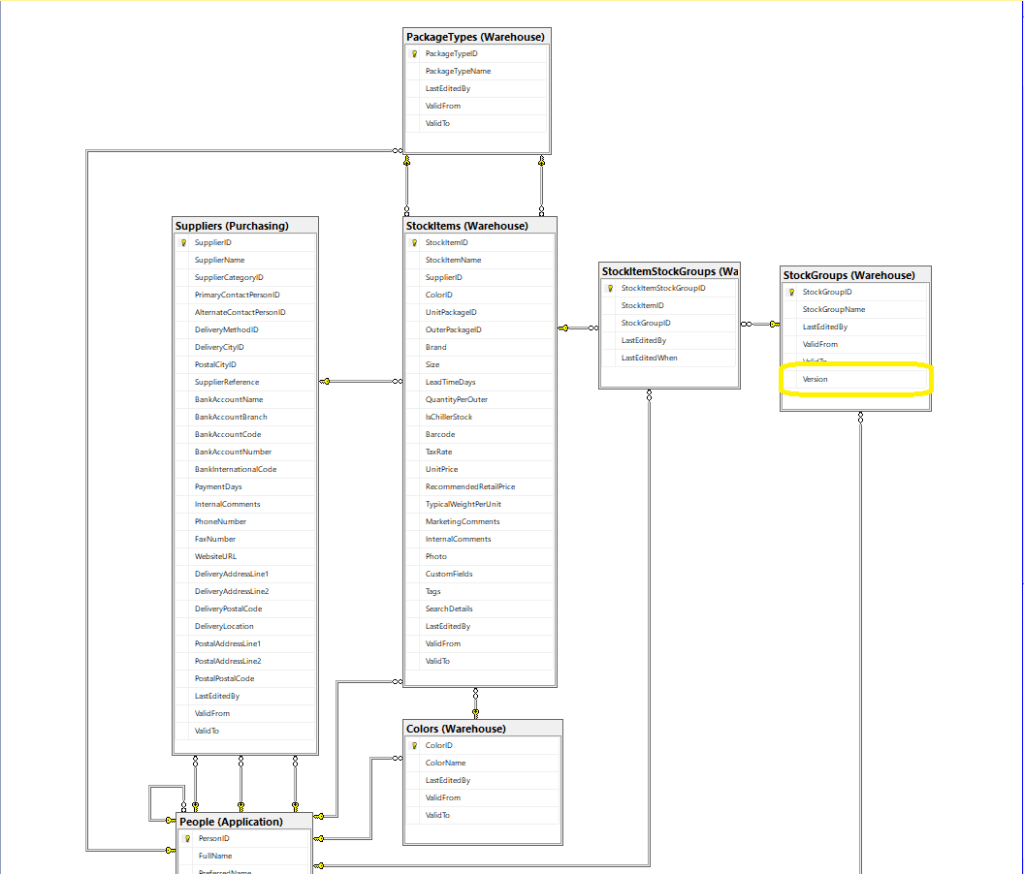

I have been trying to keep the number of changes to my Microsoft SQL Azure World Wide Importers database to a minimum but for this post I have added a rowversion column to the StockGroups table. The rowversion data type is an automatically generated, unique 8 byte binary(12 bytes Base64 encoded) number within a database.

Adding a rowversion table to an existing System Versioned table in the SQL Server Management Studio Designer is painful so I used…

ALTER TABLE [Warehouse].[StockGroups] ADD [Version] [timestamp] NULL

To reduce complexity the embedded SQL is contains two commands (normally I wouldn’t do this) one for retrieving the list StockGroups the other for retrieving the maximum StockGroup rowversion. If a StockGroup is changed the rowversion will be “automagically” updated and the maximum value will change.

[HttpGet]

public async Task<ActionResult<IEnumerable<Model.StockGroupListDtoV1>>> Get()

{

IEnumerable<Model.StockGroupListDtoV1> response = null;

try

{

using (SqlConnection db = new SqlConnection(this.connectionString))

{

var parameters = new DynamicParameters();

parameters.Add("@RowVersion", dbType: DbType.Binary, direction: ParameterDirection.Output, size: ETagBytesLength);

response = await db.QueryAsync<Model.StockGroupListDtoV1>(sql: @"SELECT [StockGroupID] as ""ID"", [StockGroupName] as ""Name""FROM [Warehouse].[StockGroups] ORDER BY Name; SELECT @RowVersion=MAX(Version) FROM [Warehouse].[StockGroups]", param: parameters, commandType: CommandType.Text);

if (response.Any())

{

byte[] rowVersion = parameters.Get<byte[]>("RowVersion");

this.HttpContext.Response.Headers.Add("ETag", Convert.ToBase64String(rowVersion));

}

}

}

catch (SqlException ex)

{

logger.LogError(ex, "Retrieving list of StockGroups");

return this.StatusCode(StatusCodes.Status500InternalServerError);

}

return this.Ok(response);

}

I used Telerik Fiddler to to capture the GET response payload.

HTTP/1.1 200 OK

Transfer-Encoding: chunked

Content-Type: application/json; charset=utf-8

ETag: AAAAAAABrdE=

Server: Microsoft-IIS/10.0

X-Powered-By: ASP.NET

Date: Sat, 26 Jun 2021 06:12:16 GMT

136

[

{"id":5,"name":"Airline Novelties"},

{"id":2,"name":"Clothing"},

{"id":6,"name":"Computing Novelties"},

{"id":8,"name":"Furry Footwear"},

{"id":3,"name":"Mugs"},

{"id":1,"name":"Novelty Items"},

{"id":10,"name":"Packaging Material"},

{"id":9,"name":"Toys"},

{"id":4,"name":"T-Shirts"},

{"id":7,"name":"USB Novelties"}

]

0

The HEAD method requests the maximum rwoversion value from the StockGroups table and compares it to the eTag. In a more complex scenario this could be a call to a local cache to see if a query result has bee refreshed.

[HttpHead]

public async Task<ActionResult> Head([Required][FromHeader(Name = "ETag")][MinLength(ETagBase64Length, ErrorMessage = "eTag length invalid too short")][MaxLength(ETagBase64Length, ErrorMessage = "eTag length {0} invalid too long")] string eTag)

{

byte[] headerVersion = new byte[ETagBytesLength];

if (!Convert.TryFromBase64String(eTag, headerVersion, out _))

{

logger.LogInformation("eTag invalid format");

return this.BadRequest("eTag invalid format");

}

try

{

using (SqlConnection db = new SqlConnection(this.connectionString))

{

byte[] databaseVersion = await db.ExecuteScalarAsync<byte[]>(sql: "SELECT MAX(Version) FROM [Warehouse].[StockGroups]", commandType: CommandType.Text);

if (headerVersion.SequenceEqual(databaseVersion))

{

return this.StatusCode(StatusCodes.Status304NotModified);

}

}

}

catch (SqlException ex)

{

logger.LogError(ex, "Retrieving StockItem list");

return this.StatusCode(StatusCodes.Status500InternalServerError);

}

return this.Ok();

}

I used Fiddler to to capture a HEAD response payload a 304 Not modified.

HTTP/1.1 304 Not Modified

Server: Microsoft-IIS/10.0

X-Powered-By: ASP.NET

Date: Sat, 26 Jun 2021 22:09:02 GMT

I then modified the database and the response changed to 200 OK indicating the local cache should be updated with a GET.

HTTP/1.1 200 OK

Transfer-Encoding: chunked

Server: Microsoft-IIS/10.0

X-Powered-By: ASP.NET

Date: Sat, 26 Jun 2021 22:09:59 GMT

This approach combined with the use of the If-Match, If-Modified-Since, If-None-Match and If-Unmodified-since allows web and client side caches to use previously requested results when there have been no changes. This can significantly reduce the amount of network traffic and server requests.

As part of my testing I modified the eTag so it was invalid (to check the Convert.ToBase64String and Convert.TryFromBase64String error handling) and the response was much smaller than I expected.

HTTP/1.1 400 Bad Request

Content-Length: 240

Content-Type: application/problem+json; charset=utf-8

Server: Microsoft-IIS/10.0

X-Powered-By: ASP.NET

Date: Sat, 26 Jun 2021 06:28:11 GMT

This was unlike the helpful validation messages returned by the GET method of the StockItems pagination example code

{

"type":"https://tools.ietf.org/html/rfc7231#section-6.5.1",

"title":"One or more validation errors occurred.",

"status":400,

"traceId":"00-bd68c94bf05f5c4ca8752011d6a60533-48e966211dec4847-00",

"errors":

{

"PageSize":["PageSize must be present and greater than 0"],

"PageNumber":["PageNumber must be present and greater than 0"]

}

}

The lack of diagnostic information was not helpful and I’ll explore this further in a future post. I often work on Fintech applications which are “insert only”, or nothing is deleted just marked as inactive/readonly so this approach is viable.