I have used Entity Framework Core a “full fat” Object Relational Mapper (ORM) for a couple of projects, and it supports mapping to spatial data types using the NetTopologySuite library. On Stackoverflow there was some discussion about Dapper’s spatial support so I thought I would try it out.

The DapperSpatialNetTopologySuite project has Dapper SQLMapper TypeHandler, Microsoft SQL Server stored procedure name and ASP.NET Core Minimal API for each location column handler implementation.

app.MapGet("/Spatial/NearbyGeography", async (double latitude, double longitude, int distance, [FromServices] IDapperContext dapperContext) =>

{

var origin = new Point(longitude, latitude) { SRID = 4326 };

using (var connection = dapperContext.ConnectionCreate())

{

var results = await connection.QueryWithRetryAsync<Model.ListingNearbyListGeographyDto>("ListingsSpatialNearbyNTS_____", new { origin, distance }, commandType: CommandType.StoredProcedure);

return results;

}

})

.Produces<IList<Model.ListingNearbyListGeographyDto>>(StatusCodes.Status200OK)

.Produces<ProblemDetails>(StatusCodes.Status400BadRequest)

.WithOpenApi();

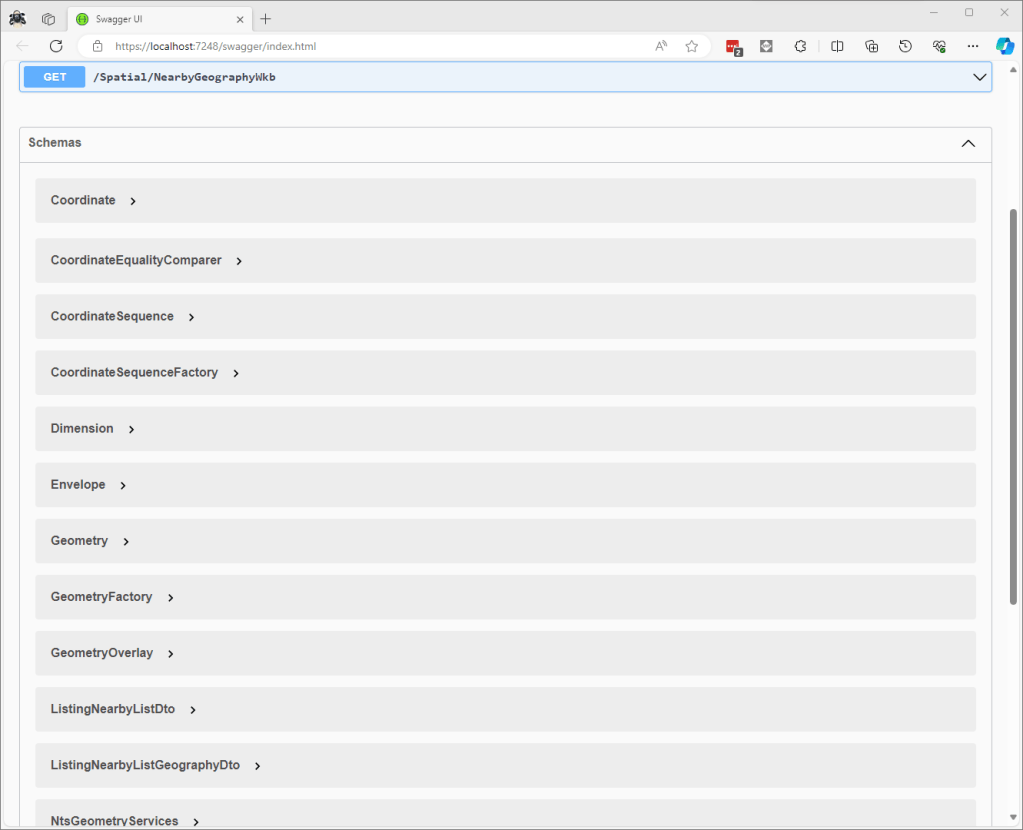

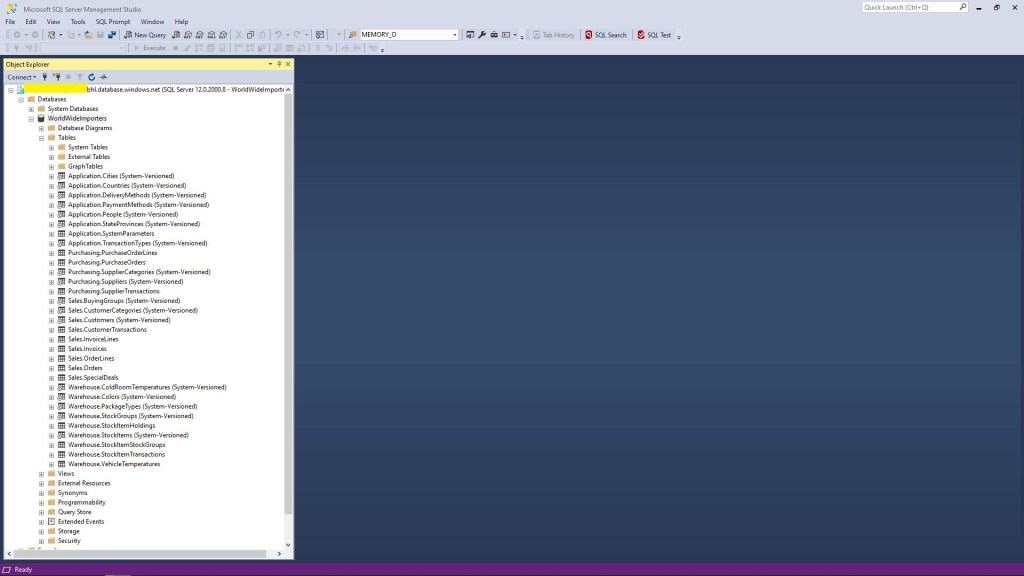

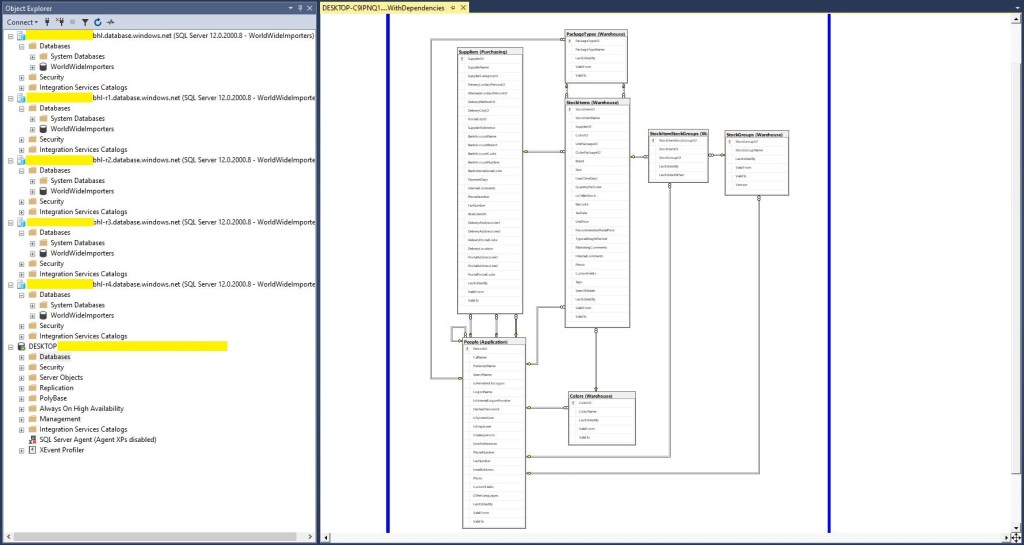

After adding the NetTopologySuite spatial library to the project the schemas list got a lot bigger.

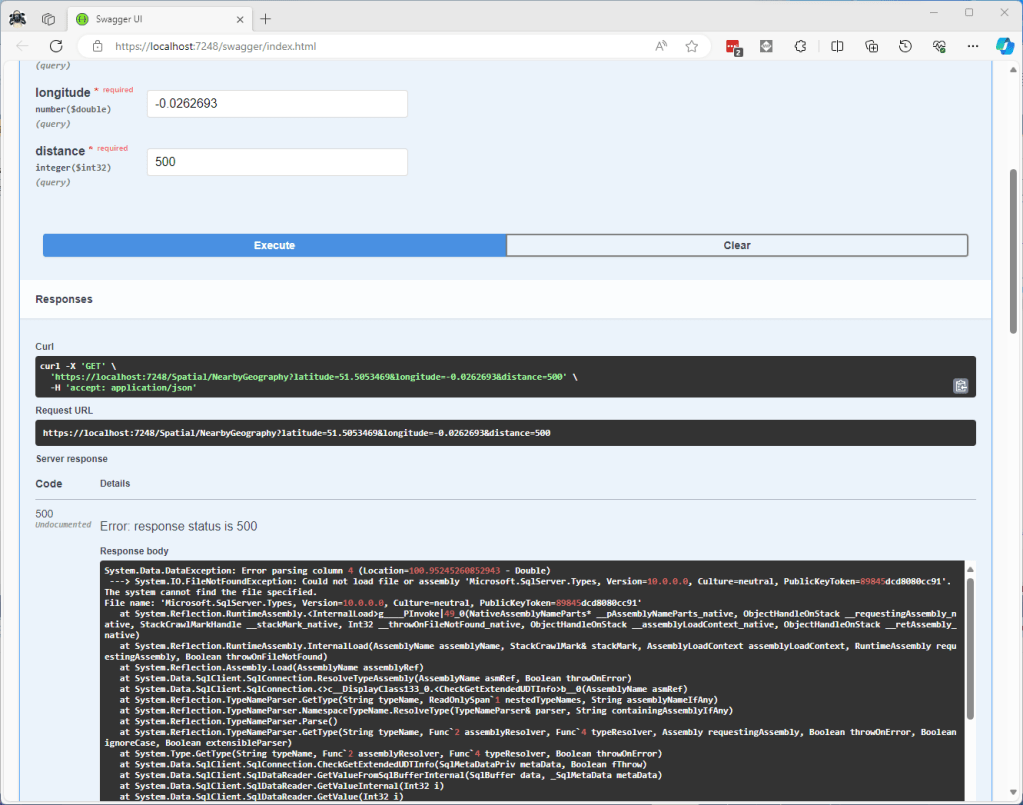

My first attempt inspired by a really old Marc Gravell post and the NetTopologySuite.IO.SqlServerBytes documentation didn’t work.

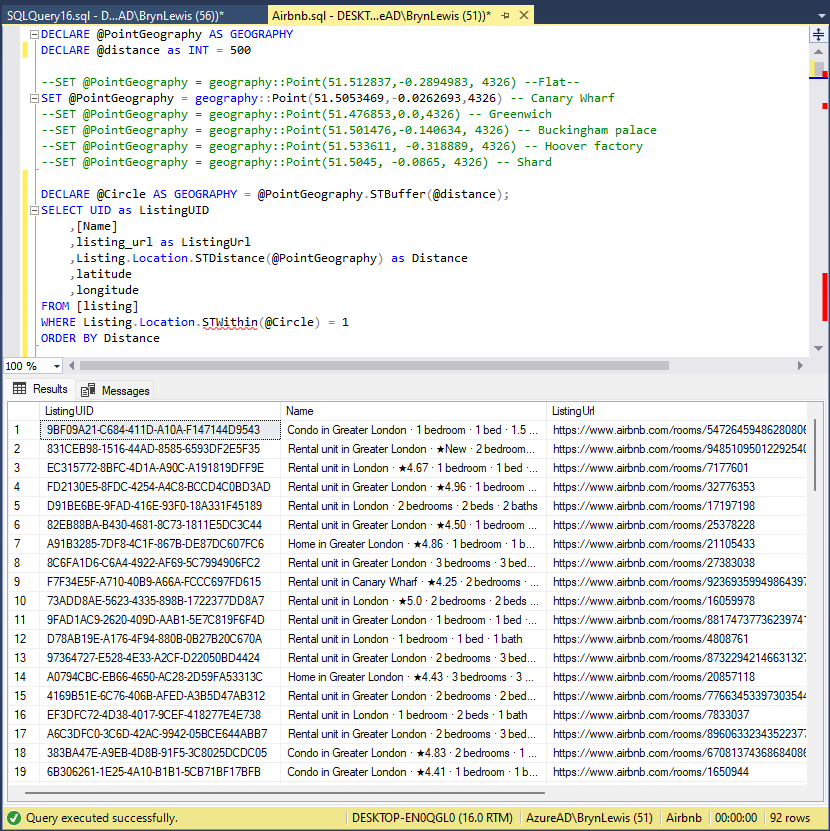

CREATE PROCEDURE [dbo].[ListingsSpatialNearbyNTSLocation]

@Origin AS GEOGRAPHY,

@distance AS INTEGER

AS

BEGIN

DECLARE @Circle AS GEOGRAPHY = @Origin.STBuffer(@distance);

SELECT TOP(50) UID AS ListingUID

,[Name]

,listing_url as ListingUrl

,Listing.Location.STDistance(@Origin) as Distance

,Listing.Location

FROM Listing

WHERE (Listing.Location.STWithin(@Circle) = 1)

ORDER BY Distance

END

I could see the Dapper SQLMapper TypeHandler for the @origin parameter getting called but the not locations

Then found a Brice Lambson post about how to use the NetTopologySuite.IO.SqlServerBytes library to read and write geography and geometry columns.

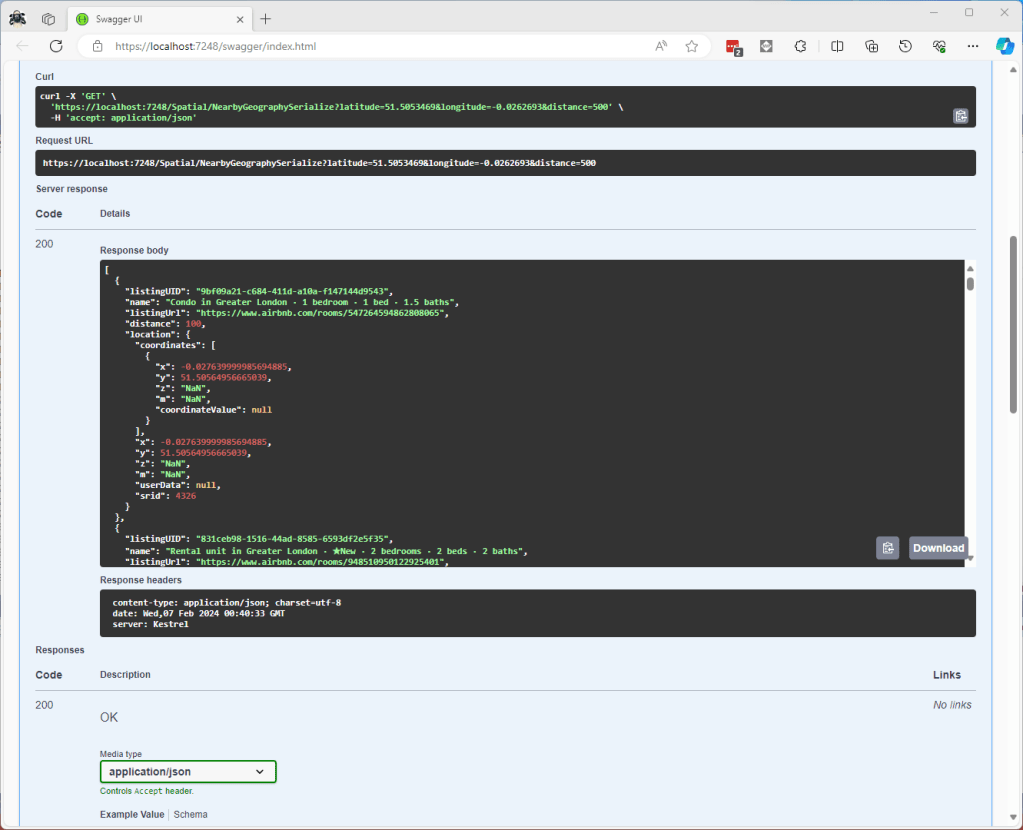

CREATE PROCEDURE [dbo].[ListingsSpatialNearbyNTSSerialize]

@Origin AS GEOGRAPHY,

@distance AS INTEGER

AS

BEGIN

DECLARE @Circle AS GEOGRAPHY = @Origin.STBuffer(@distance);

SELECT TOP(50) UID AS ListingUID

,[Name]

,listing_url as ListingUrl

,Listing.Location.STDistance(@Origin) as Distance

,Location.Serialize() as Location

FROM [listing]

WHERE (Location.STWithin(@Circle) = 1)

ORDER BY Distance

END

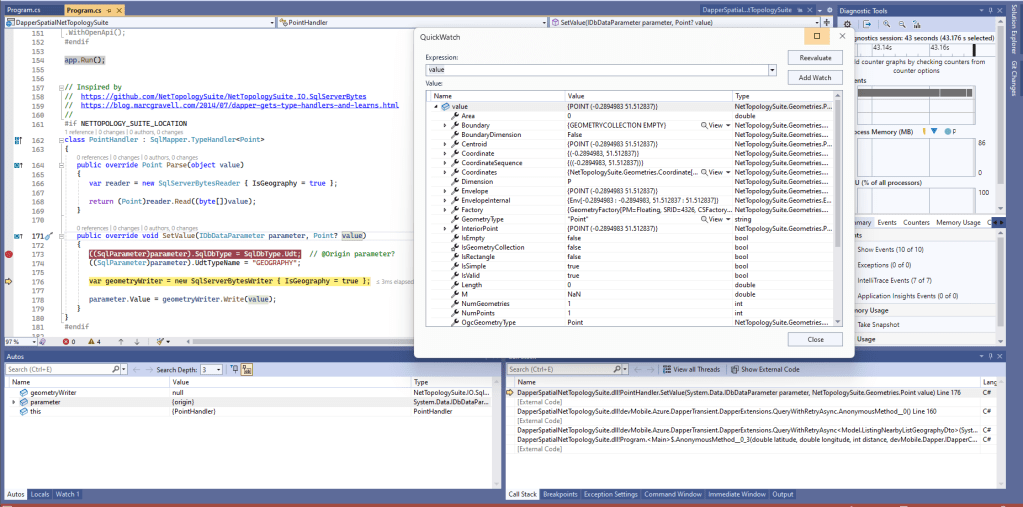

class PointHandlerSerialise : SqlMapper.TypeHandler<Point>

{

public override Point Parse(object value)

{

var reader = new SqlServerBytesReader { IsGeography = true };

return (Point)reader.Read((byte[])value);

}

public override void SetValue(IDbDataParameter parameter, Point? value)

{

((SqlParameter)parameter).SqlDbType = SqlDbType.Udt; // @Origin parameter?

((SqlParameter)parameter).UdtTypeName = "GEOGRAPHY";

var writer = new SqlServerBytesWriter { IsGeography = true };

parameter.Value = writer.Write(value);

}

}

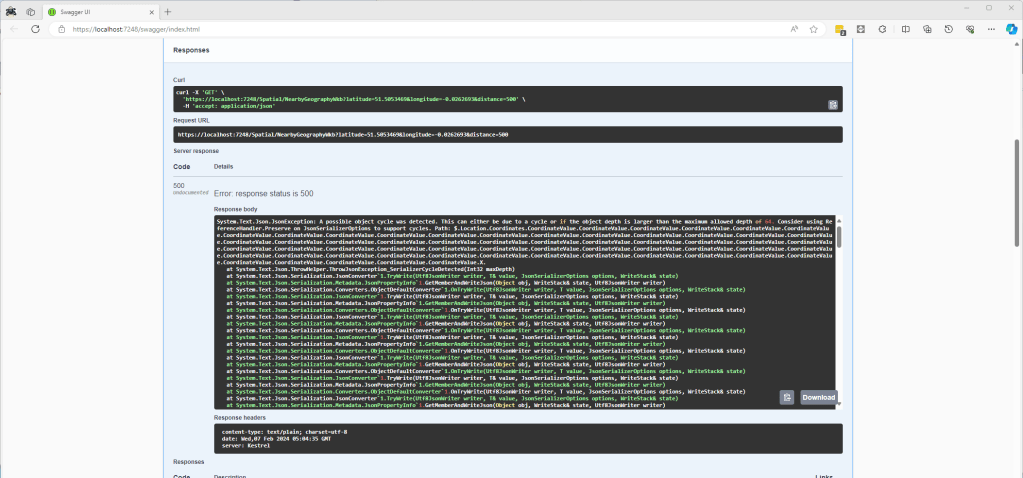

Once the location column serialisation was working (I could see a valid response in the debugger) the generation of the response was failing with a “System.Text.Json.JsonException: A possible object cycle was detected. This can either be due to a cycle or if the object depth is larger than the maximum allowed depth of 64″.

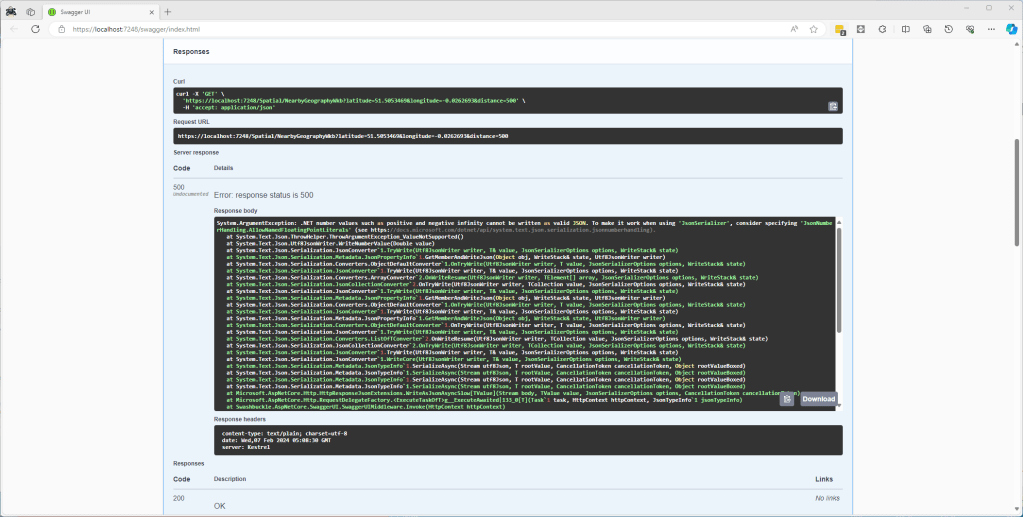

After fixing that issue the response generation failed with “System.ArgumentException: .NET number values such as positive and negative infinity cannot be written as valid JSON.”

Fixing these two issues required adjustment of two HttpJsonOptions

//...

builder.Services.ConfigureHttpJsonOptions(options =>

{

options.SerializerOptions.ReferenceHandler = ReferenceHandler.IgnoreCycles;

options.SerializerOptions.NumberHandling = JsonNumberHandling.AllowNamedFloatingPointLiterals;

});

var app = builder.Build();

//...

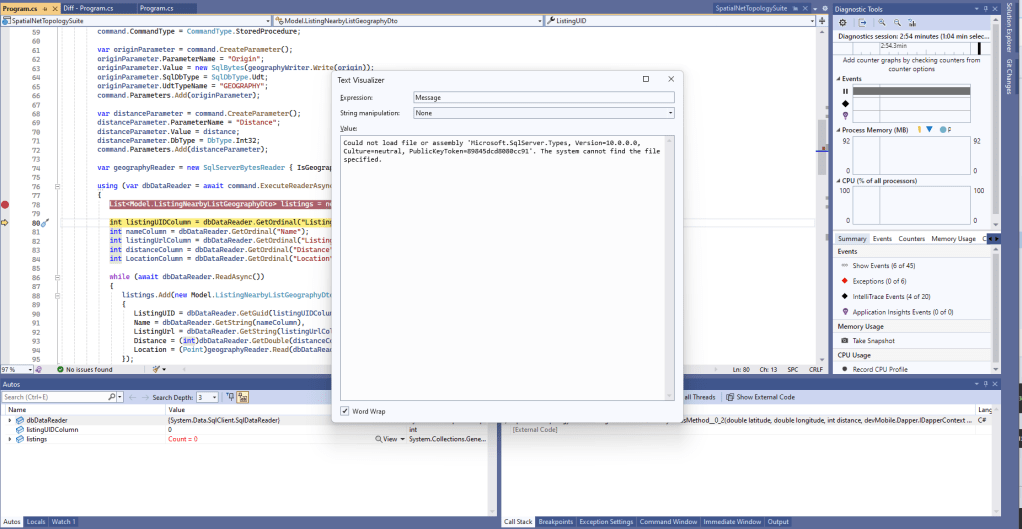

After digging into the Dapper source code I wondered how ADO.Net handled loading Microsoft.SQLServer.Types library

app.MapGet("/Listing/Search/Ado", async (double latitude, double longitude, int distance, [FromServices] IDapperContext dapperContext) =>

{

var origin = new Point(longitude, latitude) { SRID = 4326 };

using (SqlConnection connection = (SqlConnection)dapperContext.ConnectionCreate())

{

await connection.OpenAsync();

var geographyWriter = new SqlServerBytesWriter { IsGeography = true };

using (SqlCommand command = connection.CreateCommand())

{

command.CommandText = "ListingsSpatialNearbyNTSLocation";

command.CommandType = CommandType.StoredProcedure;

var originParameter = command.CreateParameter();

originParameter.ParameterName = "Origin";

originParameter.Value = new SqlBytes(geographyWriter.Write(origin));

originParameter.SqlDbType = SqlDbType.Udt;

originParameter.UdtTypeName = "GEOGRAPHY";

command.Parameters.Add(originParameter);

var distanceParameter = command.CreateParameter();

distanceParameter.ParameterName = "Distance";

distanceParameter.Value = distance;

distanceParameter.DbType = DbType.Int32;

command.Parameters.Add(distanceParameter);

var geographyReader = new SqlServerBytesReader { IsGeography = true };

using (var dbDataReader = await command.ExecuteReaderAsync())

{

List<Model.ListingNearbyListGeographyDto> listings = new List<Model.ListingNearbyListGeographyDto>();

int listingUIDColumn = dbDataReader.GetOrdinal("ListingUID");

int nameColumn = dbDataReader.GetOrdinal("Name");

int listingUrlColumn = dbDataReader.GetOrdinal("ListingUrl");

int distanceColumn = dbDataReader.GetOrdinal("Distance");

int LocationColumn = dbDataReader.GetOrdinal("Location");

while (await dbDataReader.ReadAsync())

{

listings.Add(new Model.ListingNearbyListGeographyDto

{

ListingUID = dbDataReader.GetGuid(listingUIDColumn),

Name = dbDataReader.GetString(nameColumn),

ListingUrl = dbDataReader.GetString(listingUrlColumn),

Distance = (int)dbDataReader.GetDouble(distanceColumn),

Location = (Point)geographyReader.Read(dbDataReader.GetSqlBytes(LocationColumn).Value)

});

}

return listings;

}

}

}

})

.Produces<IList<Model.ListingNearbyListGeographyDto>>(StatusCodes.Status200OK)

.Produces<ProblemDetails>(StatusCodes.Status400BadRequest)

.WithOpenApi();

The ADO.Net implementation worked and didn’t produce any exceptions.

In the Visual Studio 2022 debugger I could see the Microsoft.SQLServer.Types exception but this wasn’t “bubbling” up to the response generation code.

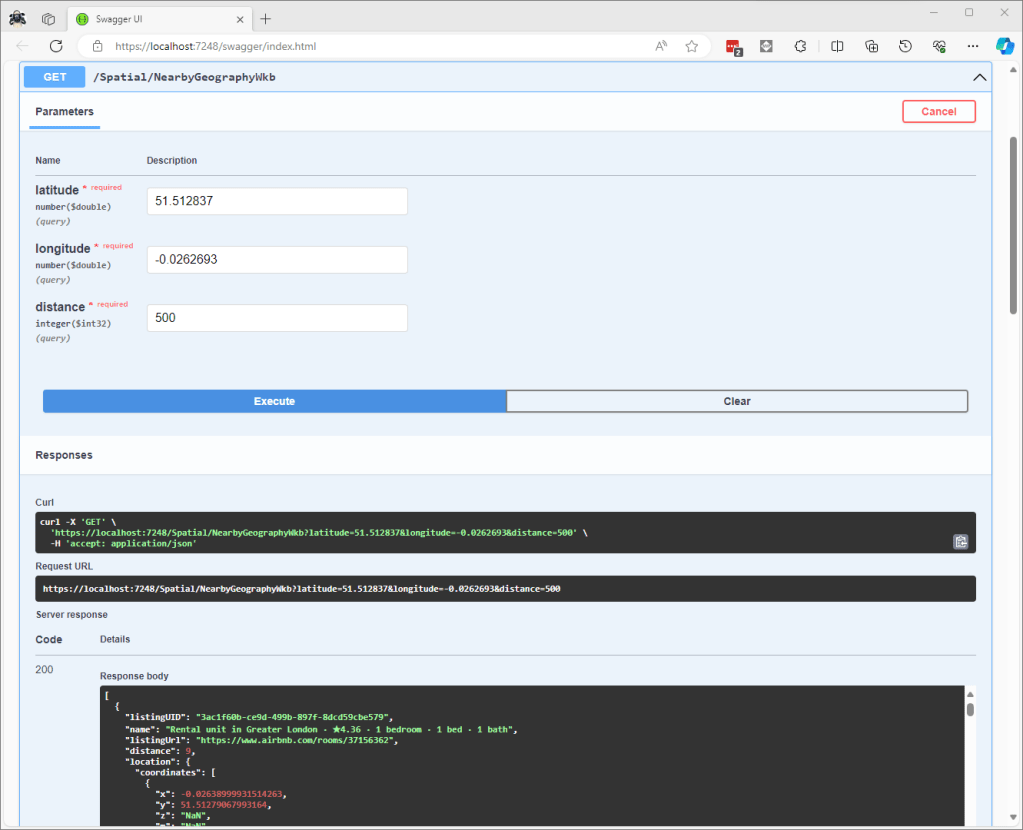

The location columns could also be returned as Open Geospatial Consortium (OGC) Well-Known Binary (WKB) format using the STAsBinary method.

CREATE PROCEDURE [dbo].[ListingsSpatialNearbyNTSWkb]

@Origin AS GEOGRAPHY,

@distance AS INTEGER

AS

BEGIN

DECLARE @Circle AS GEOGRAPHY = @Origin.STBuffer(@distance);

SELECT TOP(50) UID AS ListingUID

,[Name]

,listing_url as ListingUrl

,Listing.Location.STDistance(@Origin) as Distance

,Location.STAsBinary() as Location

FROM [listing]

WHERE (Location.STWithin(@Circle) = 1)

ORDER BY Distance

END

Then converted to and from NTS Point values using WKBReader and SqlServerBytesWriter

SqlMapper.AddTypeHandler(new PointHandlerWkb());

//...

class PointHandlerWkb : SqlMapper.TypeHandler<Point>

{

public override Point Parse(object value)

{

var reader = new WKBReader();

return (Point)reader.Read((byte[])value);

}

public override void SetValue(IDbDataParameter parameter, Point? value)

{

((SqlParameter)parameter).SqlDbType = SqlDbType.Udt; // @Origin parameter?

((SqlParameter)parameter).UdtTypeName = "GEOGRAPHY";

var geometryWriter = new SqlServerBytesWriter { IsGeography = true };

parameter.Value = geometryWriter.Write(value);

}

}

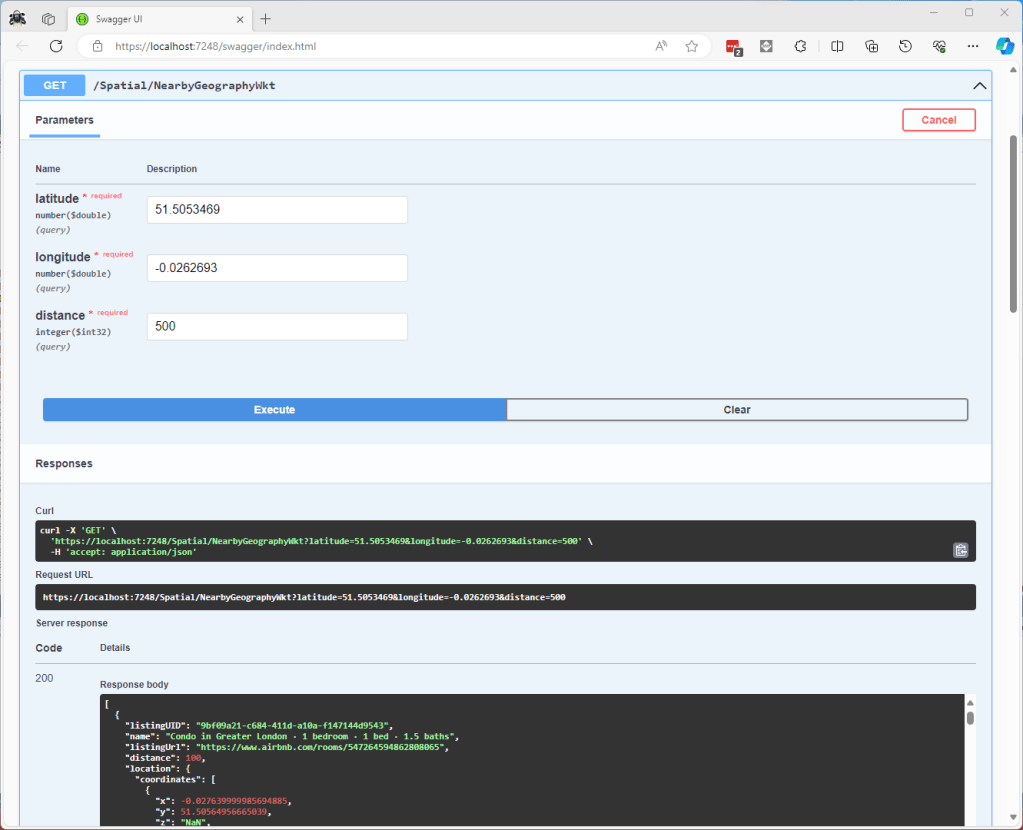

The location columns could also be returned as Open Geospatial Consortium (OGC) Well Known Text(WKT) format using the STAsText and SqlServerBytesWriter;

CREATE PROCEDURE [dbo].[ListingsSpatialNearbyNTSWkt]

@Origin AS GEOGRAPHY,

@distance AS INTEGER

AS

BEGIN

DECLARE @Circle AS GEOGRAPHY = @Origin.STBuffer(@distance);

SELECT TOP(50) UID AS ListingUID

,[Name]

,listing_url as ListingUrl

,Listing.Location.STDistance(@Origin) as Distance

,Location.STAsText() as Location

FROM [listing]

WHERE (Location.STWithin(@Circle) = 1)

ORDER BY Distance

END

Then converted to and from NTS Point values using WKTReader and SqlServerBytesWriter

class PointHandlerWkt : SqlMapper.TypeHandler<Point>

{

public override Point Parse(object value)

{

WKTReader wktReader = new WKTReader();

return (Point)wktReader.Read(value.ToString());

}

public override void SetValue(IDbDataParameter parameter, Point? value)

{

((SqlParameter)parameter).SqlDbType = SqlDbType.Udt; // @Origin parameter?

((SqlParameter)parameter).UdtTypeName = "GEOGRAPHY";

parameter.Value = new SqlServerBytesWriter() { IsGeography = true }.Write(value);

}

}

I have focused on getting the spatial queries to work and will stress/performance test my implementations in a future post. I will also revisit the /Spatial/NearbyGeography method to see if I can get it to work without “Location.Serialize() as Location”.

Downloaded Microsoft.Data.SqlClient source code and in SqlConnection.cs this doesn’t help….

// UDT SUPPORT

private Assembly ResolveTypeAssembly(AssemblyName asmRef, bool throwOnError)

{

Debug.Assert(TypeSystemAssemblyVersion != null, "TypeSystemAssembly should be set !");

if (string.Equals(asmRef.Name, "Microsoft.SqlServer.Types", StringComparison.OrdinalIgnoreCase))

{

if (asmRef.Version != TypeSystemAssemblyVersion && SqlClientEventSource.Log.IsTraceEnabled())

{

SqlClientEventSource.Log.TryTraceEvent("SqlConnection.ResolveTypeAssembly | SQL CLR type version change: Server sent {0}, client will instantiate {1}", asmRef.Version, TypeSystemAssemblyVersion);

}

asmRef.Version = TypeSystemAssemblyVersion;

}

try

{

return Assembly.Load(asmRef);

}

catch (Exception e)

{

if (throwOnError || !ADP.IsCatchableExceptionType(e))

{

throw;

}

else

{

return null;

}

}

}