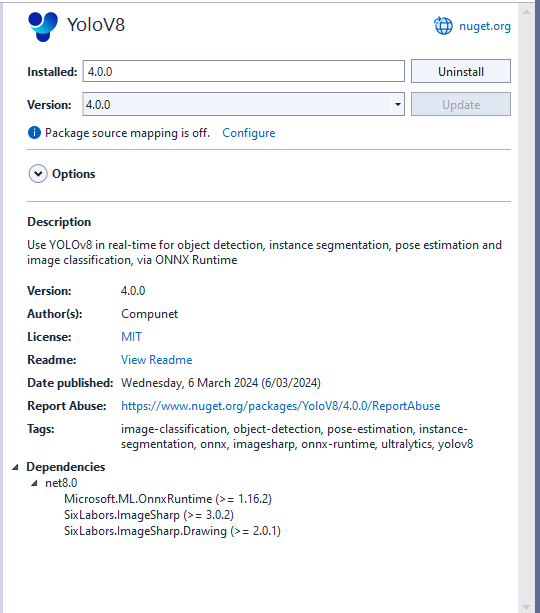

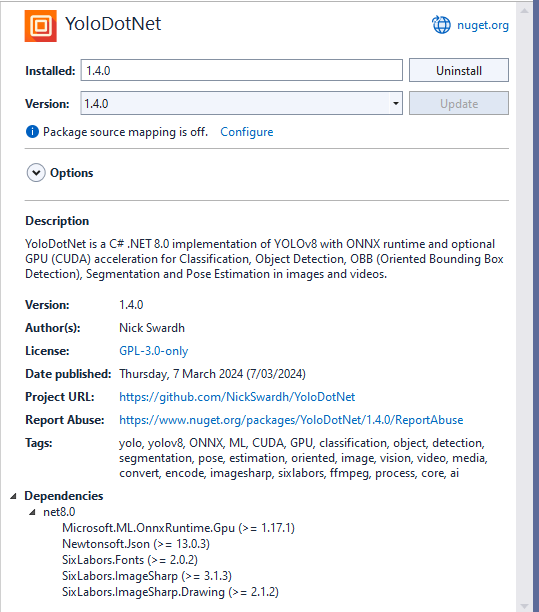

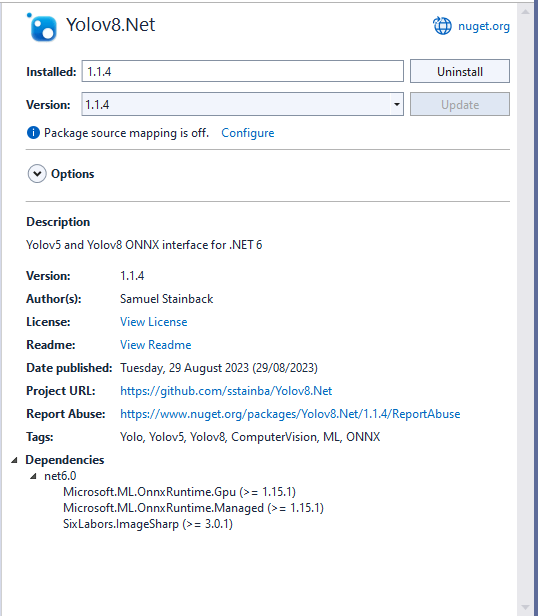

After building some proof-of-concept applications I have decided to use the YoloV8 by dme-compunet NuGet because it supports async await and code with async await is always better (yeah right).

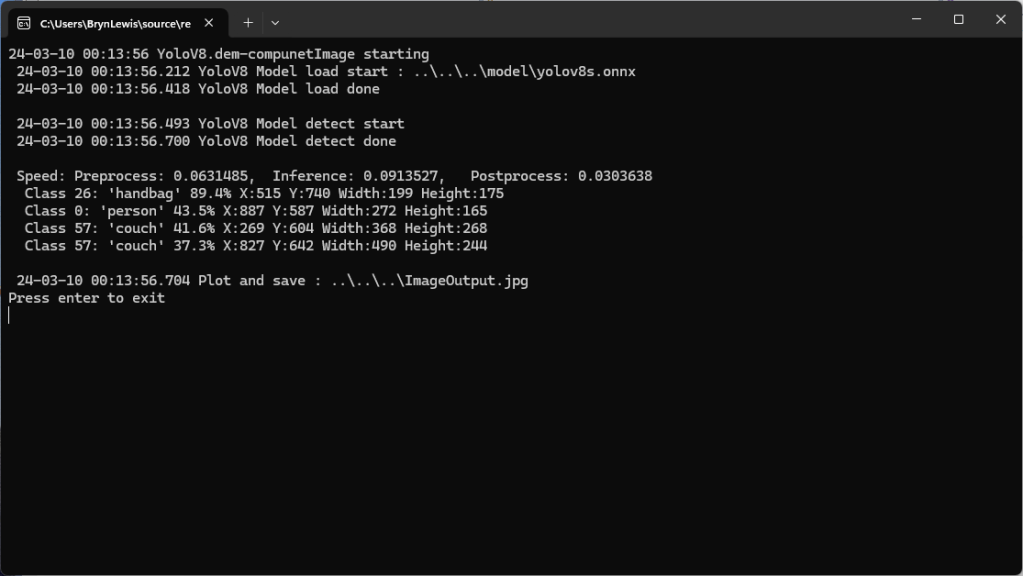

The YoloV8.Detect.SecurityCamera.File sample downloads images from the security camera to the local file system, then calls DetectAsync with the local file path.

private static async void ImageUpdateTimerCallback(object state)

{

//...

try

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV8 Security Camera Image File processing start");

using (Stream cameraStream = await _httpClient.GetStreamAsync(_applicationSettings.CameraUrl))

using (Stream fileStream = System.IO.File.Create(_applicationSettings.ImageFilepath))

{

await cameraStream.CopyToAsync(fileStream);

}

DetectionResult result = await _predictor.DetectAsync(_applicationSettings.ImageFilepath);

Console.WriteLine($"Speed: {result.Speed}");

foreach (var prediction in result.Boxes)

{

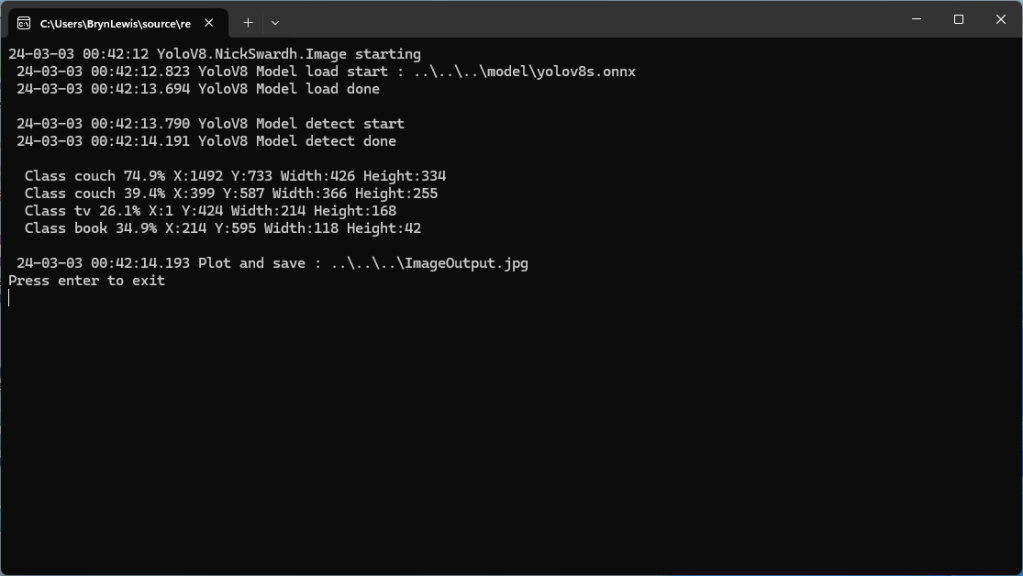

Console.WriteLine($" Class {prediction.Class} {(prediction.Confidence * 100.0):f1}% X:{prediction.Bounds.X} Y:{prediction.Bounds.Y} Width:{prediction.Bounds.Width} Height:{prediction.Bounds.Height}");

}

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV8 Security Camera Image processing done");

}

catch (Exception ex)

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss} YoloV8 Security camera image download or YoloV8 prediction failed {ex.Message}");

}

//...

}

The YoloV8.Detect.SecurityCamera.Bytes sample downloads images from the security camera as an array of bytes then calls DetectAsync.

private static async void ImageUpdateTimerCallback(object state)

{

//...

try

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV8 Security Camera Image Bytes processing start");

byte[] bytes = await _httpClient.GetByteArrayAsync(_applicationSettings.CameraUrl);

DetectionResult result = await _predictor.DetectAsync(bytes);

Console.WriteLine($"Speed: {result.Speed}");

foreach (var prediction in result.Boxes)

{

Console.WriteLine($" Class {prediction.Class} {(prediction.Confidence * 100.0):f1}% X:{prediction.Bounds.X} Y:{prediction.Bounds.Y} Width:{prediction.Bounds.Width} Height:{prediction.Bounds.Height}");

}

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV8 Security Camera Image processing done");

}

catch (Exception ex)

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss} YoloV8 Security camera image download or YoloV8 prediction failed {ex.Message}");

}

//...

}

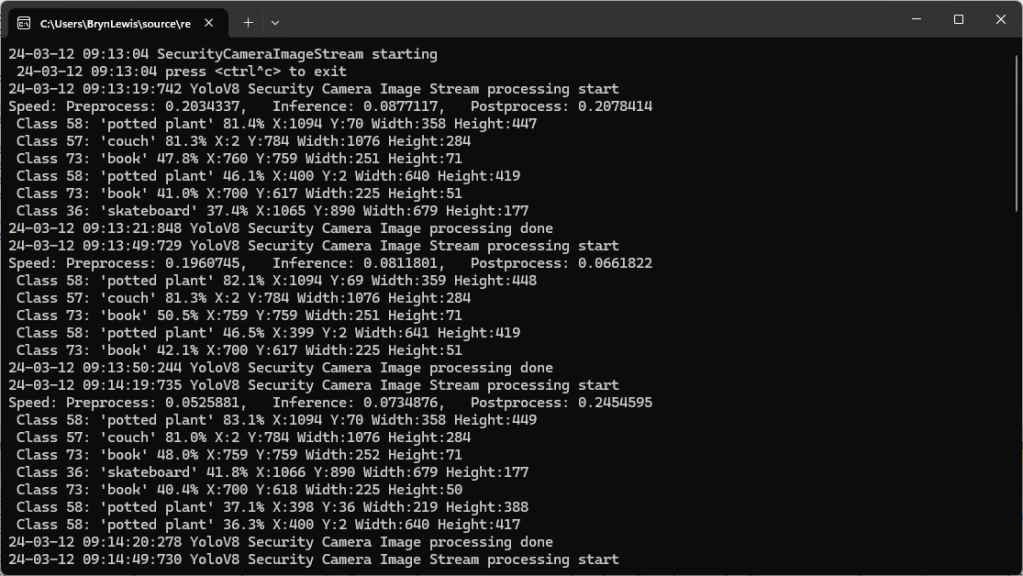

The YoloV8.Detect.SecurityCamera.Stream sample “streams” the image from the security camera to DetectAsync.

private static async void ImageUpdateTimerCallback(object state)

{

// ...

try

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV8 Security Camera Image Stream processing start");

DetectionResult result;

using (System.IO.Stream cameraStream = await _httpClient.GetStreamAsync(_applicationSettings.CameraUrl))

{

result = await _predictor.DetectAsync(cameraStream);

}

Console.WriteLine($"Speed: {result.Speed}");

foreach (var prediction in result.Boxes)

{

Console.WriteLine($" Class {prediction.Class} {(prediction.Confidence * 100.0):f1}% X:{prediction.Bounds.X} Y:{prediction.Bounds.Y} Width:{prediction.Bounds.Width} Height:{prediction.Bounds.Height}");

}

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV8 Security Camera Image processing done");

}

catch (Exception ex)

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss} YoloV8 Security camera image download or YoloV8 prediction failed {ex.Message}");

}

//...

}

The ImageSelector parameter of DetectAsync caught my attention as I hadn’t seen this approach used before. The developers who wrote the NuGet package are definitely smarter than me so I figured I might learn something useful digging deeper.

My sample object detection applications all call

public static async Task<DetectionResult> DetectAsync(this YoloV8 predictor, ImageSelector selector)

{

return await Task.Run(() => predictor.Detect(selector));

}

Which then invokes

public static DetectionResult Detect(this YoloV8 predictor, ImageSelector selector)

{

predictor.ValidateTask(YoloV8Task.Detect);

return predictor.Run(selector, (outputs, image, timer) =>

{

var output = outputs[0].AsTensor<float>();

var parser = new DetectionOutputParser(predictor.Metadata, predictor.Parameters);

var boxes = parser.Parse(output, image);

var speed = timer.Stop();

return new DetectionResult

{

Boxes = boxes,

Image = image,

Speed = speed,

};

});

public TResult Run<TResult>(ImageSelector selector, PostprocessContext<TResult> postprocess) where TResult : YoloV8Result

{

using var image = selector.Load(true);

var originSize = image.Size;

var timer = new SpeedTimer();

timer.StartPreprocess();

var input = Preprocess(image);

var inputs = MapNamedOnnxValues([input]);

timer.StartInference();

using var outputs = Infer(inputs);

var list = new List<NamedOnnxValue>(outputs);

timer.StartPostprocess();

return postprocess(list, originSize, timer);

}

}

It looks like most of the image loading magic of ImageSelector class is implemented using the SixLabors library…

public class ImageSelector<TPixel> where TPixel : unmanaged, IPixel<TPixel>

{

private readonly Func<Image<TPixel>> _factory;

public ImageSelector(Image image)

{

_factory = image.CloneAs<TPixel>;

}

public ImageSelector(string path)

{

_factory = () => Image.Load<TPixel>(path);

}

public ImageSelector(byte[] data)

{

_factory = () => Image.Load<TPixel>(data);

}

public ImageSelector(Stream stream)

{

_factory = () => Image.Load<TPixel>(stream);

}

internal Image<TPixel> Load(bool autoOrient)

{

var image = _factory();

if (autoOrient)

image.Mutate(x => x.AutoOrient());

return image;

}

public static implicit operator ImageSelector<TPixel>(Image image) => new(image);

public static implicit operator ImageSelector<TPixel>(string path) => new(path);

public static implicit operator ImageSelector<TPixel>(byte[] data) => new(data);

public static implicit operator ImageSelector<TPixel>(Stream stream) => new(stream);

}

Learnt something new must be careful to apply it only where it adds value.