While exploring some of the functionality of MiniProfiler there were some 3rd party examples which caught my attention.

using (SqlConnection connection = new SqlConnection(@"Data Source=...; Initial Catalog=SyncDB; Trusted_Connection=Yes"))

{

using (ProfiledDbConnection profiledDbConnection = new ProfiledDbConnection(connection, MiniProfiler.Current))

{

if (profiledDbConnection.State != System.Data.ConnectionState.Open)

profiledDbConnection.Open();

using (SqlCommand command = new SqlCommand("Select * From Authors", connection))

{

using (ProfiledDbCommand profiledDbCommand = new ProfiledDbCommand(command, connection, MiniProfiler.Current))

{

var data = profiledDbCommand.ExecuteReader();

//Write code here to populate the list of Authors

}

}

}

“Inspired” by code like this my first attempt to retrieve a list of stock items didn’t look right.

[HttpGet("AdoProfiledOtt")]

public async Task<ActionResult<IEnumerable<Model.StockItemListDtoV1>>> GetAdoProfiledOtt()

{

List<Model.StockItemListDtoV1> response = new List<Model.StockItemListDtoV1>();

using (SqlConnection connection = new SqlConnection(configuration.GetConnectionString("default")))

{

using (ProfiledDbConnection profiledDbConnection = new ProfiledDbConnection(connection, MiniProfiler.Current))

{

await profiledDbConnection.OpenAsync();

using (SqlCommand command = new SqlCommand(sqlCommandText, connection))

{

using (ProfiledDbCommand profiledDbCommand = new ProfiledDbCommand(command, profiledDbConnection, MiniProfiler.Current))

{

using (SqlDataReader reader = await command.ExecuteReaderAsync())

{

using (ProfiledDbDataReader profiledDbDataReader = new ProfiledDbDataReader(reader, MiniProfiler.Current))

{

var rowParser = profiledDbDataReader.GetRowParser<Model.StockItemListDtoV1>();

while (await profiledDbDataReader.ReadAsync())

{

response.Add(rowParser(profiledDbDataReader));

}

}

}

}

}

await profiledDbConnection.CloseAsync();

}

}

}

Often Dapper has functionality like closing the connection if it needed to open it to reduce the amount of code required and this code looked verbose.

[HttpGet]

public async Task<ActionResult<IEnumerable<Model.StockItemListDtoV1>>> Get()

{

IEnumerable<Model.StockItemListDtoV1> response = null;

using (SqlConnection db = new SqlConnection(this.connectionString))

{

response = await db.QueryWithRetryAsync<Model.StockItemListDtoV1>(sql: @"SELECT [StockItemID] as ""ID"", [StockItemName] as ""Name"", [RecommendedRetailPrice], [TaxRate] FROM [Warehouse].[StockItems]", commandType: CommandType.Text);

}

return this.Ok(response);

}

It seemed a bit odd that so many “usings” were needed so I had a look at ProfiledDBConnection.cs

/// <summary>

/// Initializes a new instance of the <see cref="ProfiledDbConnection"/> class.

/// Returns a new <see cref="ProfiledDbConnection"/> that wraps <paramref name="connection"/>,

/// providing query execution profiling. If profiler is null, no profiling will occur.

/// </summary>

/// <param name="connection"><c>Your provider-specific flavour of connection, e.g. SqlConnection, OracleConnection</c></param>

/// <param name="profiler">The currently started <see cref="MiniProfiler"/> or null.</param>

/// <exception cref="ArgumentNullException">Throws when <paramref name="connection"/> is <c>null</c>.</exception>

public ProfiledDbConnection(DbConnection connection, IDbProfiler? profiler)

{

_connection = connection ?? throw new ArgumentNullException(nameof(connection));

_connection.StateChange += StateChangeHandler;

if (profiler != null)

{

_profiler = profiler;

}

}

...

/// <summary>

/// Dispose the underlying connection.

/// </summary>

/// <param name="disposing">false if preempted from a <c>finalizer</c></param>

protected override void Dispose(bool disposing)

{

if (disposing && _connection != null)

{

_connection.StateChange -= StateChangeHandler;

_connection.Dispose();

}

base.Dispose(disposing);

_connection = null!;

_profiler = null;

}

One less “using” required as ProfiledDbConnection “automagically” disposes the SqlConnection. It also seemed a bit odd that the SqlCommand had a “using” so I had a look at ProfiledDbCommand.cs

/// <summary>

/// Initializes a new instance of the <see cref="ProfiledDbCommand"/> class.

/// </summary>

/// <param name="command">The command.</param>

/// <param name="connection">The connection.</param>

/// <param name="profiler">The profiler.</param>

/// <exception cref="ArgumentNullException">Throws when <paramref name="command"/> is <c>null</c>.</exception>

public ProfiledDbCommand(DbCommand command, DbConnection? connection, IDbProfiler? profiler)

{

_command = command ?? throw new ArgumentNullException(nameof(command));

if (connection != null)

{

_connection = connection;

UnwrapAndAssignConnection(connection);

}

if (profiler != null)

{

_profiler = profiler;

}

}

...

/// <summary>

/// Releases all resources used by this command.

/// </summary>

/// <param name="disposing">false if this is being disposed in a <c>finalizer</c>.</param>

protected override void Dispose(bool disposing)

{

if (disposing && _command != null)

{

_command.Dispose();

}

_command = null!;

base.Dispose(disposing);

}

Another “using” not required as ProfiledDbCommand “automagically” disposes the SqlCommand as well. It also seemed a bit odd that the SqlDataReader had a using so I had a look at profileDbDataReader.cs

/// <summary>

/// Initializes a new instance of the <see cref="ProfiledDbDataReader"/> class (with <see cref="CommandBehavior.Default"/>).

/// </summary>

/// <param name="reader">The reader.</param>

/// <param name="profiler">The profiler.</param>

public ProfiledDbDataReader(DbDataReader reader, IDbProfiler profiler) : this(reader, CommandBehavior.Default, profiler) { }

/// <summary>

/// Initializes a new instance of the <see cref="ProfiledDbDataReader"/> class.

/// </summary>

/// <param name="reader">The reader.</param>

/// <param name="behavior">The behavior specified during command execution.</param>

/// <param name="profiler">The profiler.</param>

public ProfiledDbDataReader(DbDataReader reader, CommandBehavior behavior, IDbProfiler? profiler)

{

WrappedReader = reader;

Behavior = behavior;

_profiler = profiler;

}

...

/// <summary>

/// The <see cref="DbDataReader"/> that is being used.

/// </summary>

public DbDataReader WrappedReader { get; }

/// <inheritdoc cref="DbDataReader.Dispose(bool)"/>

protected override void Dispose(bool disposing)

{

// reader can be null when we're not profiling, but we've inherited from ProfiledDbCommand and are returning a

// an unwrapped reader from the base command

WrappedReader?.Dispose();

base.Dispose(disposing);

}

Another “using” not required as ProfiledDbDataReader “automagically” disposes the SqlDataReader. This was my final version of profiling the System.Data.SqlClient code to retrieve a list of stock items.

[HttpGet("AdoProfiled")]

public async Task<ActionResult<IEnumerable<Model.StockItemListDtoV1>>> GetProfiledAdo()

{

List<Model.StockItemListDtoV1> response = new List<Model.StockItemListDtoV1>();

using (ProfiledDbConnection profiledDbConnection = new ProfiledDbConnection((SqlConnection)dapperContext.ConnectionCreate(), MiniProfiler.Current))

{

await profiledDbConnection.OpenAsync();

using (ProfiledDbCommand profiledDbCommand = new ProfiledDbCommand(new SqlCommand(sqlCommandText), profiledDbConnection, MiniProfiler.Current))

{

DbDataReader reader = await profiledDbCommand.ExecuteReaderAsync();

using (ProfiledDbDataReader profiledDbDataReader = new ProfiledDbDataReader(reader, MiniProfiler.Current))

{

var rowParser = profiledDbDataReader.GetRowParser<Model.StockItemListDtoV1>();

while (await profiledDbDataReader.ReadAsync())

{

response.Add(rowParser(profiledDbDataReader));

}

}

}

}

return this.Ok(response);

}

The profileDbDataReader.cs implementation was “sparse” and when loading a longer list of stock items there were some ReadAsync calls which took a bit longer.

/// <summary>

/// The profiled database data reader.

/// </summary>

public class ProfiledDbDataReader : DbDataReader

{

private readonly IDbProfiler? _profiler;

/// <summary>

/// Initializes a new instance of the <see cref="ProfiledDbDataReader"/> class (with <see cref="CommandBehavior.Default"/>).

/// </summary>

/// <param name="reader">The reader.</param>

/// <param name="profiler">The profiler.</param>

public ProfiledDbDataReader(DbDataReader reader, IDbProfiler profiler) : this(reader, CommandBehavior.Default, profiler) { }

/// <summary>

/// Initializes a new instance of the <see cref="ProfiledDbDataReader"/> class.

/// </summary>

/// <param name="reader">The reader.</param>

/// <param name="behavior">The behavior specified during command execution.</param>

/// <param name="profiler">The profiler.</param>

public ProfiledDbDataReader(DbDataReader reader, CommandBehavior behavior, IDbProfiler? profiler)

{

WrappedReader = reader;

Behavior = behavior;

_profiler = profiler;

}

/// <summary>Gets the behavior specified during command execution.</summary>

public CommandBehavior Behavior { get; }

/// <inheritdoc cref="DbDataReader.Depth"/>

public override int Depth => WrappedReader.Depth;

/// <inheritdoc cref="DbDataReader.FieldCount"/>

public override int FieldCount => WrappedReader.FieldCount;

/// <inheritdoc cref="DbDataReader.HasRows"/>

public override bool HasRows => WrappedReader.HasRows;

/// <inheritdoc cref="DbDataReader.IsClosed"/>

public override bool IsClosed => WrappedReader.IsClosed;

/// <inheritdoc cref="DbDataReader.RecordsAffected"/>

public override int RecordsAffected => WrappedReader.RecordsAffected;

/// <summary>

/// The <see cref="DbDataReader"/> that is being used.

/// </summary>

public DbDataReader WrappedReader { get; }

/// <inheritdoc cref="DbDataReader.this[string]"/>

public override object this[string name] => WrappedReader[name];

/// <inheritdoc cref="DbDataReader.this[int]"/>

public override object this[int ordinal] => WrappedReader[ordinal];

...

/// <inheritdoc cref="DbDataReader.GetString(int)"/>

public override string GetString(int ordinal) => WrappedReader.GetString(ordinal);

/// <inheritdoc cref="DbDataReader.GetValue(int)"/>

public override object GetValue(int ordinal) => WrappedReader.GetValue(ordinal);

/// <inheritdoc cref="DbDataReader.GetValues(object[])"/>

public override int GetValues(object[] values) => WrappedReader.GetValues(values);

/// <inheritdoc cref="DbDataReader.IsDBNull(int)"/>

public override bool IsDBNull(int ordinal) => WrappedReader.IsDBNull(ordinal);

/// <inheritdoc cref="DbDataReader.IsDBNullAsync(int, CancellationToken)"/>

public override Task<bool> IsDBNullAsync(int ordinal, CancellationToken cancellationToken) => WrappedReader.IsDBNullAsync(ordinal, cancellationToken);

/// <inheritdoc cref="DbDataReader.NextResult()"/>

public override bool NextResult() => WrappedReader.NextResult();

/// <inheritdoc cref="DbDataReader.NextResultAsync(CancellationToken)"/>

public override Task<bool> NextResultAsync(CancellationToken cancellationToken) => WrappedReader.NextResultAsync(cancellationToken);

/// <inheritdoc cref="DbDataReader.Read()"/>

public override bool Read() => WrappedReader.Read();

/// <inheritdoc cref="DbDataReader.ReadAsync(CancellationToken)"/>

public override Task<bool> ReadAsync(CancellationToken cancellationToken) => WrappedReader.ReadAsync(cancellationToken);

/// <inheritdoc cref="DbDataReader.Close()"/>

public override void Close()

{

// reader can be null when we're not profiling, but we've inherited from ProfiledDbCommand and are returning a

// an unwrapped reader from the base command

WrappedReader?.Close();

_profiler?.ReaderFinish(this);

}

/// <inheritdoc cref="DbDataReader.GetSchemaTable()"/>

public override DataTable? GetSchemaTable() => WrappedReader.GetSchemaTable();

/// <inheritdoc cref="DbDataReader.Dispose(bool)"/>

protected override void Dispose(bool disposing)

{

// reader can be null when we're not profiling, but we've inherited from ProfiledDbCommand and are returning a

// an unwrapped reader from the base command

WrappedReader?.Dispose();

base.Dispose(disposing);

}

}

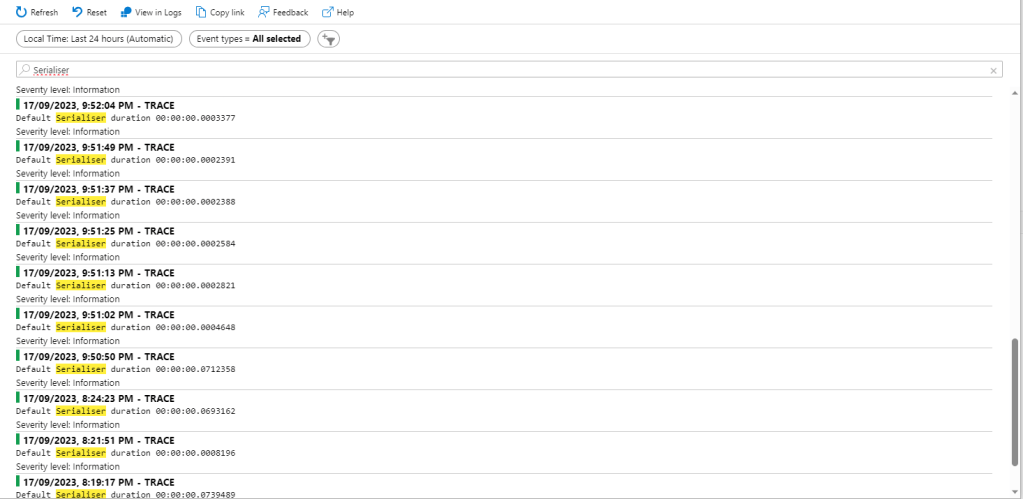

In the [HttpGet(“DapperProfiledQueryMultipleStep”)] method I wrapped ReadAsync and could see in the profiling that every so often a call did take significantly longer.

using (MiniProfiler.Current.Step("invoiceSummaryLine.ReadAsync"))

{

response.InvoiceLines = await invoiceSummary.ReadAsync<Model.InvoiceLineSummaryListDtoV1>();

}

I did consider modifying profileDbDataReader.cs to add some instrumentation to the Read… and Get… methods but, the authors of miniprofiler are way way smarter than me so there must be a reason why they didn’t.