The myriota Azure IoT Hub Cloud Identity Translation Gateway downlink message handler was getting a bit “chunky”. So, I started by stripping the code back to the absolute bare minimum that would “work”.

public async Task AzureIoTHubMessageHandler(Message message, object userContext)

{

Models.DeviceConnectionContext context = (Models.DeviceConnectionContext)userContext;

_logger.LogInformation("Downlink- IoT Hub TerminalId:{termimalId} LockToken:{LockToken}", context.TerminalId, message.LockToken);

try

{

await context.DeviceClient.CompleteAsync(message);

}

catch (Exception ex)

{

await context.DeviceClient.RejectAsync(message);

_logger.LogError(ex, "Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} failed", context.TerminalId, message.LockToken );

}

}

Then the code was then extended so it worked for “sunny day” scenarios. The payload formatter was successfully retrieved from the configured Azure Storage Blob, CS-Script successfully compiled the payload formatter, the message payload was valid text, the message text was valid Javascript Object Notation(JSON), the JSON was successfully processed by the compiled payload formatter, and finally the payload was accepted by the Myriota Cloud API.

public async Task AzureIoTHubMessageHandler(Message message, object userContext)

{

Models.DeviceConnectionContext context = (Models.DeviceConnectionContext)userContext;

_logger.LogInformation("Downlink- IoT Hub TerminalId:{termimalId} LockToken:{LockToken}", context.TerminalId, message.LockToken);

string payloadFormatter;

// Use default formatter and replace with message specific formatter if configured.

if (!message.Properties.TryGetValue(Constants.IoTHubDownlinkPayloadFormatterProperty, out payloadFormatter) || string.IsNullOrEmpty(payloadFormatter))

{

payloadFormatter = context.PayloadFormatterDownlink;

}

_logger.LogInformation("Downlink- IoT Hub TerminalID:{termimalId} LockToken:{LockToken} Payload formatter:{payloadFormatter} ", context.TerminalId, message.LockToken, payloadFormatter);

try

{

IFormatterDownlink payloadFormatterDownlink = await _payloadFormatterCache.DownlinkGetAsync(payloadFormatter);

byte[] messageBytes = message.GetBytes();

string messageText = Encoding.UTF8.GetString(messageBytes);

JObject messageJson = JObject.Parse(messageText);

byte[] payloadBytes = payloadFormatterDownlink.Evaluate(message.Properties, context.TerminalId, messageJson, messageBytes);

string messageId = await _myriotaModuleAPI.SendAsync(context.TerminalId, payloadBytes);

_logger.LogInformation("Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} MessageID:{messageId} sent", context.TerminalId, message.LockToken, messageId);

await context.DeviceClient.CompleteAsync(message);

}

catch (Exception ex)

{

await context.DeviceClient.RejectAsync(message);

_logger.LogError(ex, "Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} failed", context.TerminalId, message.LockToken);

}

}

Then code was then extended to handle message payloads which were problematic but not “failures”

public async Task AzureIoTHubMessageHandler(Message message, object userContext)

{

Models.DeviceConnectionContext context = (Models.DeviceConnectionContext)userContext;

_logger.LogInformation("Downlink- IoT Hub TerminalId:{termimalId} LockToken:{LockToken}", context.TerminalId, message.LockToken);

string payloadFormatter;

// Use default formatter and replace with message specific formatter if configured.

if (!message.Properties.TryGetValue(Constants.IoTHubDownlinkPayloadFormatterProperty, out payloadFormatter) || string.IsNullOrEmpty(payloadFormatter))

{

payloadFormatter = context.PayloadFormatterDownlink;

}

_logger.LogInformation("Downlink- IoT Hub TerminalID:{termimalId} LockToken:{LockToken} Payload formatter:{payloadFormatter} ", context.TerminalId, message.LockToken, payloadFormatter);

try

{

// If this fails payload broken

byte[] messageBytes = message.GetBytes();

string messageText = string.Empty;

JObject messageJson = null;

// These will fail for some messages, gets bytes only

try

{

messageText = Encoding.UTF8.GetString(messageBytes);

messageJson = JObject.Parse(messageText);

}

catch (ArgumentException aex)

{

_logger.LogInformation("Downlink-DeviceID:{DeviceId} LockToken:{LockToken} messageBytes:{2} not valid Text", context.TerminalId, message.LockToken, BitConverter.ToString(messageBytes));

}

catch( JsonReaderException jex)

{

_logger.LogInformation("Downlink-DeviceID:{DeviceId} LockToken:{LockToken} messageText:{2} not valid json", context.TerminalId, message.LockToken, BitConverter.ToString(messageBytes));

}

IFormatterDownlink payloadFormatterDownlink = await _payloadFormatterCache.DownlinkGetAsync(payloadFormatter);

byte[] payloadBytes = payloadFormatterDownlink.Evaluate(message.Properties, context.TerminalId, messageJson, messageBytes);

string messageId = await _myriotaModuleAPI.SendAsync(context.TerminalId, payloadBytes);

await context.DeviceClient.CompleteAsync(message);

_logger.LogInformation("Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} MessageID:{messageId} sent", context.TerminalId, message.LockToken, messageId);

}

catch (Exception ex)

{

await context.DeviceClient.RejectAsync(message);

_logger.LogError(ex, "Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} failed", context.TerminalId, message.LockToken);

}

finally

{

message.Dispose();

}

}

Then finally the code was modified to gracefully handle broken payloads returned by the payload formatter evaluation, some comments were added, and the non-managed resources of the DeviceClient.Message disposed.

public async Task AzureIoTHubMessageHandler(Message message, object userContext)

{

Models.DeviceConnectionContext context = (Models.DeviceConnectionContext)userContext;

_logger.LogInformation("Downlink- IoT Hub TerminalId:{termimalId} LockToken:{LockToken}", context.TerminalId, message.LockToken);

// Use default formatter and replace with message specific formatter if configured.

string payloadFormatter;

if (!message.Properties.TryGetValue(Constants.IoTHubDownlinkPayloadFormatterProperty, out payloadFormatter) || string.IsNullOrEmpty(payloadFormatter))

{

payloadFormatter = context.PayloadFormatterDownlink;

}

_logger.LogInformation("Downlink- IoT Hub TerminalID:{termimalId} LockToken:{LockToken} Payload formatter:{payloadFormatter} ", context.TerminalId, message.LockToken, payloadFormatter);

try

{

// If this fails payload broken

byte[] messageBytes = message.GetBytes();

// This will fail for some messages, payload formatter gets bytes only

string messageText = string.Empty;

try

{

messageText = Encoding.UTF8.GetString(messageBytes);

}

catch (ArgumentException aex)

{

_logger.LogInformation("Downlink- IoT Hub TerminalID:{TerminalId} LockToken:{LockToken} messageBytes:{2} not valid Text", context.TerminalId, message.LockToken, BitConverter.ToString(messageBytes));

}

// This will fail for some messages, payload formatter gets bytes only

JObject? messageJson = null;

try

{

messageJson = JObject.Parse(messageText);

}

catch ( JsonReaderException jex)

{

_logger.LogInformation("Downlink- IoT Hub TerminalID:{TerminalId} LockToken:{LockToken} messageText:{2} not valid json", context.TerminalId, message.LockToken, BitConverter.ToString(messageBytes));

}

// This shouldn't fail, but it could for lots of diffent reasons, invalid path to blob, syntax error, interface broken etc.

IFormatterDownlink payloadFormatterDownlink = await _payloadFormatterCache.DownlinkGetAsync(payloadFormatter);

// This shouldn't fail, but it could for lots of different reasons, null references, divide by zero, out of range etc.

byte[] payloadBytes = payloadFormatterDownlink.Evaluate(message.Properties, context.TerminalId, messageJson, messageBytes);

// Validate payload before calling Myriota control message send API method

if (payloadBytes is null)

{

_logger.LogWarning("Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} payload formatter:{payloadFormatter} Evaluate returned null", context.TerminalId, message.LockToken, payloadFormatter);

await context.DeviceClient.RejectAsync(message);

return;

}

if ((payloadBytes.Length < Constants.DownlinkPayloadMinimumLength) || (payloadBytes.Length > Constants.DownlinkPayloadMaximumLength))

{

_logger.LogWarning("Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} payloadData length:{Length} invalid must be {DownlinkPayloadMinimumLength} to {DownlinkPayloadMaximumLength} bytes", context.TerminalId, message.LockToken, payloadBytes.Length, Constants.DownlinkPayloadMinimumLength, Constants.DownlinkPayloadMaximumLength);

await context.DeviceClient.RejectAsync(message);

return;

}

// This shouldn't fail, but it could few reasons mainly connectivity & message queuing etc.

_logger.LogInformation("Downlink- IoT Hub TerminalID:{TerminalId} LockToken:{LockToken} PayloadData:{payloadData} Length:{Length} sending", context.TerminalId, message.LockToken, Convert.ToHexString(payloadBytes), payloadBytes.Length);

// Finally send the message using Myriota API

string messageId = await _myriotaModuleAPI.SendAsync(context.TerminalId, payloadBytes);

_logger.LogInformation("Downlink- IoT Hub TerminalID:{TerminalId} LockToken:{LockToken} MessageID:{messageId} sent", context.TerminalId, message.LockToken, messageId);

await context.DeviceClient.CompleteAsync(message);

_logger.LogInformation("Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} MessageID:{messageId} sent", context.TerminalId, message.LockToken, messageId);

}

catch (Exception ex)

{

await context.DeviceClient.RejectAsync(message);

_logger.LogError(ex, "Downlink- IoT Hub TerminalID:{terminalId} LockToken:{LockToken} failed", context.TerminalId, message.LockToken);

}

finally

{

// Mop up the non managed resources of message

message.Dispose();

}

}

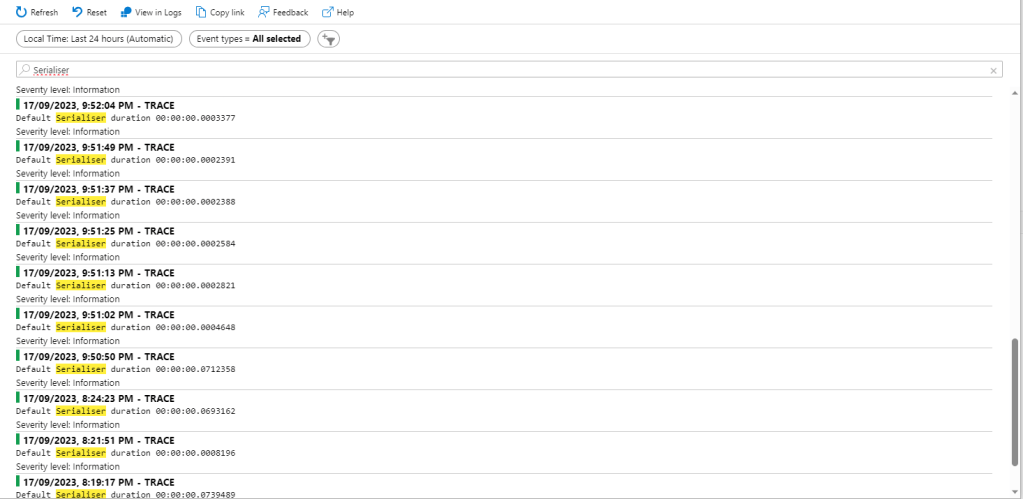

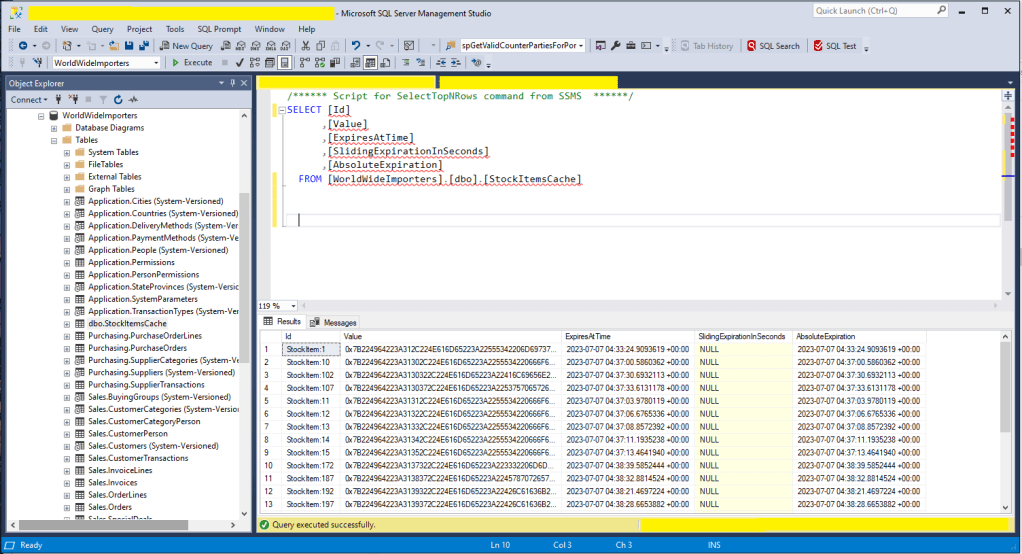

As the code was being extended, I tested different failures to make sure the Application Insights logging messages were useful. The first failure mode tested was the Azure Storage Blob, path was broken or the blob was missing.

Then a series of “broken” payload formatters were created to test CS-Script compile time failures.

// Broken interface implementation

using System;

using System.Collections.Generic;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

public class FormatterDownlink : PayloadFormatter.IFormatterDownlink

{

public byte[] Evaluate(IDictionary<string, string> properties, string terminalId, byte[] payloadBytes)

{

return payloadBytes;

}

}

// Broken syntax

using System;

using System.Collections.Generic;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

public class FormatterDownlink : PayloadFormatter.IFormatterDownlink

{

public byte[] Evaluate(IDictionary<string, string> properties, string terminalId, JObject payloadJson, byte[] payloadBytes)

{

return payloadBytes

}

}

// Runtime error

using System;

using System.Collections.Generic;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

public class FormatterDownlink : PayloadFormatter.IFormatterDownlink

{

public byte[] Evaluate(IDictionary<string, string> properties, string terminalId, JObject payloadJson, byte[] payloadBytes)

{

payloadBytes[20] = 0;

return payloadBytes;

}

}

Invalid Myriota Cloud API send control message payload

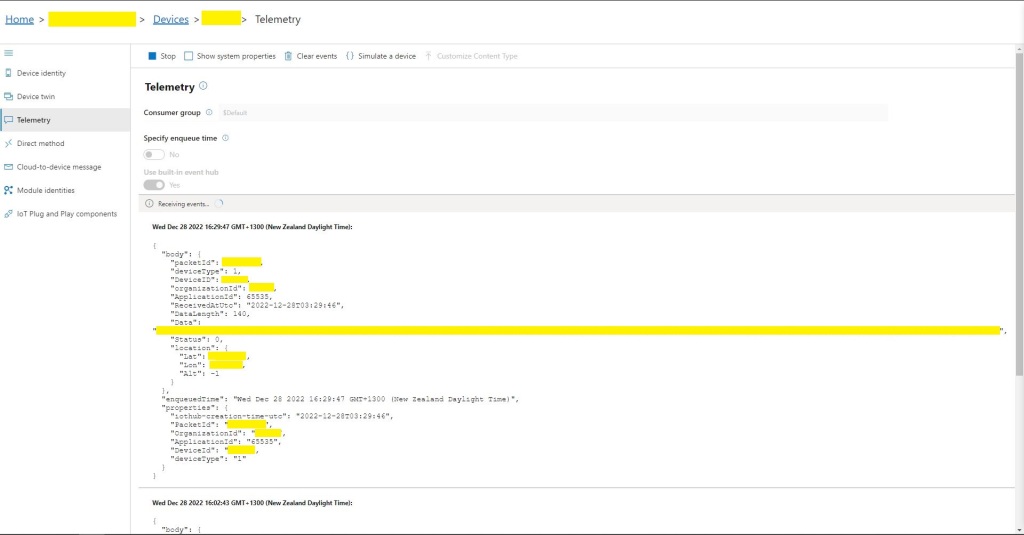

The final test was sending a downlink message which was valid JSON, contained the correct information for the specified payload formatter and was successfully processed by the Myriota Cloud API.

After a couple of hours the I think the downlink messageHandler implementation was significantly improved.