The myriota Azure IoT Hub Cloud Identity Translation Gateway payload formatters use compiled C# code to convert uplink/downlink packet payloads to JSON/byte array. While trying out different formatters I had “compile” and “evaluation” errors which would have been a lot easier to debug if there was more diagnostic information in the Azure Application Insights logging.

namespace PayloadFormatter // Additional namespace for shortening interface when usage in formatter code

{

using System.Collections.Generic;

using Newtonsoft.Json.Linq;

public interface IFormatterUplink

{

public JObject Evaluate(IDictionary<string, string> properties, string terminalId, DateTime timestamp, byte[] payloadBytes);

}

public interface IFormatterDownlink

{

public byte[] Evaluate(IDictionary<string, string> properties, string terminalId, JObject? payloadJson, byte[] payloadBytes);

}

}

An uplink payload formatter is loaded from Azure Storage Blob, compiled with Oleg Shilo’s CS-Script then cached in memory with Alastair Crabtree’s LazyCache.

// Get the payload formatter from Azure Storage container, compile, and then cache binary.

IFormatterUplink formatterUplink;

try

{

formatterUplink = await _payloadFormatterCache.UplinkGetAsync(context.PayloadFormatterUplink, cancellationToken);

}

catch (Azure.RequestFailedException aex)

{

_logger.LogError(aex, "Uplink- PayloadID:{0} payload formatter load failed", payload.Id);

return payload;

}

catch (NullReferenceException nex)

{

_logger.LogError(nex, "Uplink- PayloadID:{id} formatter:{formatter} compilation failed missing interface", payload.Id, context.PayloadFormatterUplink);

return payload;

}

catch (CSScriptLib.CompilerException cex)

{

_logger.LogError(cex, "Uplink- PayloadID:{id} formatter:{formatter} compiler failed", payload.Id, context.PayloadFormatterUplink);

return payload;

}

catch (Exception ex)

{

_logger.LogError(ex, "Uplink- PayloadID:{id} formatter:{formatter} compilation failed", payload.Id, context.PayloadFormatterUplink);

return payload;

}

If the Azure Storage blob is missing or the payload formatter code incorrect an exception is thrown. I added specialised exception handers for Azure.RequestFailedException, NullReferenceException and CSScriptLib.CompilerException to add more detail to the Azure Application Insights logging.

// Process the payload with configured formatter

Dictionary<string, string> properties = new Dictionary<string, string>();

JObject telemetryEvent;

try

{

telemetryEvent = formatterUplink.Evaluate(properties, packet.TerminalId, packet.Timestamp, payloadBytes);

}

catch (Exception ex)

{

_logger.LogError(ex, "Uplink- PayloadId:{0} TerminalId:{1} Value:{2} Bytes:{3} payload formatter evaluate failed", payload.Id, packet.TerminalId, packet.Value, Convert.ToHexString(payloadBytes));

return payload;

}

if (telemetryEvent is null)

{

_logger.LogError("Uplink- PayloadId:{0} TerminalId:{1} Value:{2} Bytes:{3} payload formatter evaluate failed returned null", payload.Id, packet.TerminalId, packet.Value, Convert.ToHexString(payloadBytes));

return payload;

}

The Evaluate method can return many different types of exception so in the initial version only the “generic” exception is caught and logged.

using System;

using System.Collections.Generic;

using Newtonsoft.Json;

using Newtonsoft.Json.Linq;

public class FormatterUplink : PayloadFormatter.IFormatterUplink

{

public JObject Evaluate(IDictionary<string, string> properties, string terminalId, DateTime timestamp, byte[] payloadBytes)

{

JObject telemetryEvent = new JObject();

telemetryEvent.Add("Bytes", BitConverter.ToString(payloadBytes));

telemetryEvent.Add("Bytes", BitConverter.ToString(payloadBytes));

return telemetryEvent;

}

}

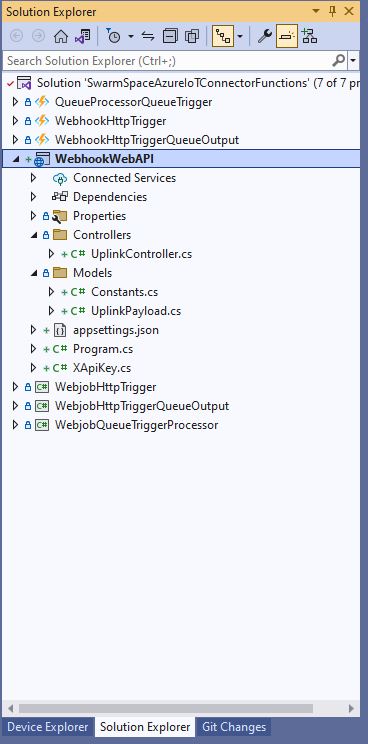

There are a number (which should grow over time) of test uplink/downlink payload formatters for testing different compile and execution failures.

I used Azure Storage Explorer to upload my test payload formatters to the uplink/downlink Azure Storage containers.