After figuring out that calling an Azure Http Trigger function to load the cache wasn’t going to work reliably, I have revisited the architecture one last time and significantly refactored the SwarmSpaceAzuureIoTConnector project.

The application now has a StartUpService which loads the Azure DeviceClient cache (Lazy Cache) in the background as the application starts up. If an uplink message is received from a SwarmDevice before, it has been loaded by the FunctionsStartup the DeviceClient information is cached and another connection to the Azure IoT Hub is not established.

...

using Microsoft.Azure.Functions.Extensions.DependencyInjection;

[assembly: FunctionsStartup(typeof(devMobile.IoT.SwarmSpaceAzureIoTConnector.Connector.StartUpService))]

namespace devMobile.IoT.SwarmSpaceAzureIoTConnector.Connector

{

...

public class StartUpService : BackgroundService

{

private readonly ILogger<StartUpService> _logger;

private readonly ISwarmSpaceBumblebeeHive _swarmSpaceBumblebeeHive;

private readonly Models.ApplicationSettings _applicationSettings;

private readonly IAzureDeviceClientCache _azureDeviceClientCache;

public StartUpService(ILogger<StartUpService> logger, IAzureDeviceClientCache azureDeviceClientCache, ISwarmSpaceBumblebeeHive swarmSpaceBumblebeeHive, IOptions<Models.ApplicationSettings> applicationSettings)//, IOptions<Models.AzureIoTSettings> azureIoTSettings)

{

_logger = logger;

_azureDeviceClientCache = azureDeviceClientCache;

_swarmSpaceBumblebeeHive = swarmSpaceBumblebeeHive;

_applicationSettings = applicationSettings.Value;

}

protected override async Task ExecuteAsync(CancellationToken cancellationToken)

{

await Task.Yield();

_logger.LogInformation("StartUpService.ExecuteAsync start");

try

{

_logger.LogInformation("BumblebeeHiveCacheRefresh start");

foreach (SwarmSpace.BumblebeeHiveClient.Device device in await _swarmSpaceBumblebeeHive.DeviceListAsync(cancellationToken))

{

_logger.LogInformation("BumblebeeHiveCacheRefresh DeviceId:{DeviceId} DeviceName:{DeviceName}", device.DeviceId, device.DeviceName);

Models.AzureIoTDeviceClientContext context = new Models.AzureIoTDeviceClientContext()

{

OrganisationId = _applicationSettings.OrganisationId,

DeviceType = (byte)device.DeviceType,

DeviceId = (uint)device.DeviceId,

};

await _azureDeviceClientCache.GetOrAddAsync(context.DeviceId, context);

}

_logger.LogInformation("BumblebeeHiveCacheRefresh finish");

}

catch (Exception ex)

{

_logger.LogError(ex, "StartUpService.ExecuteAsync error");

throw;

}

_logger.LogInformation("StartUpService.ExecuteAsync finish");

}

}

}

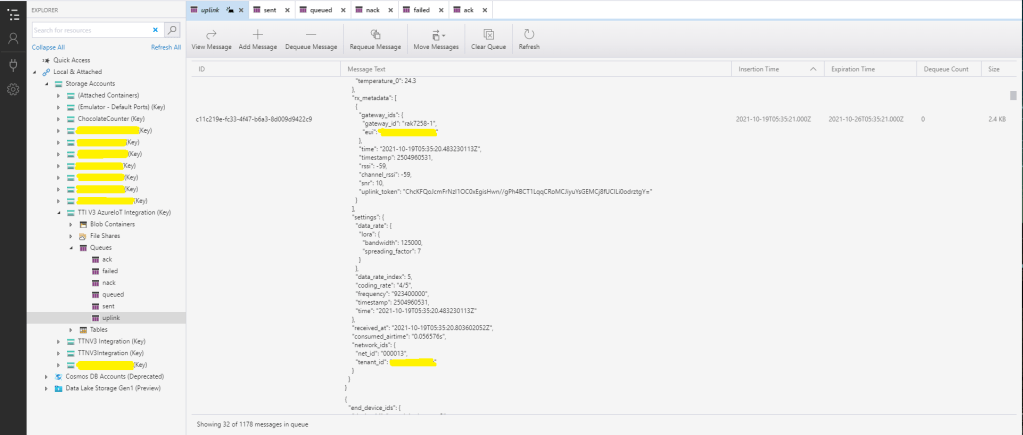

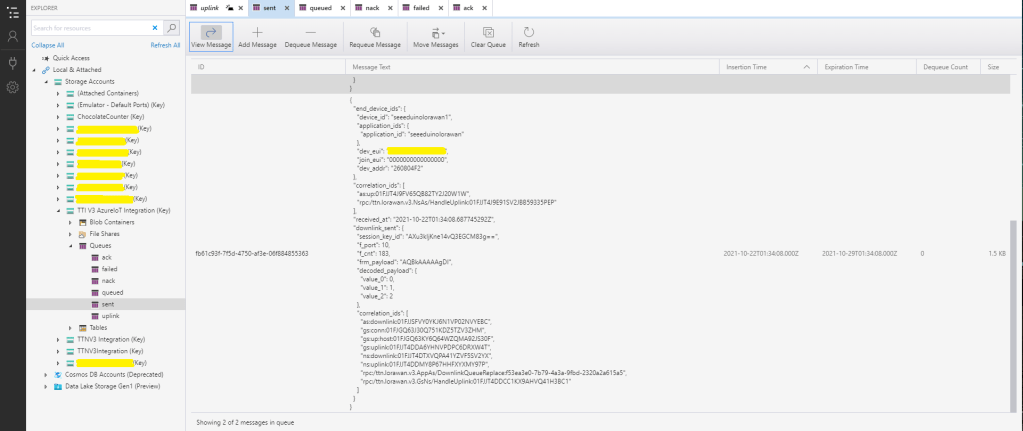

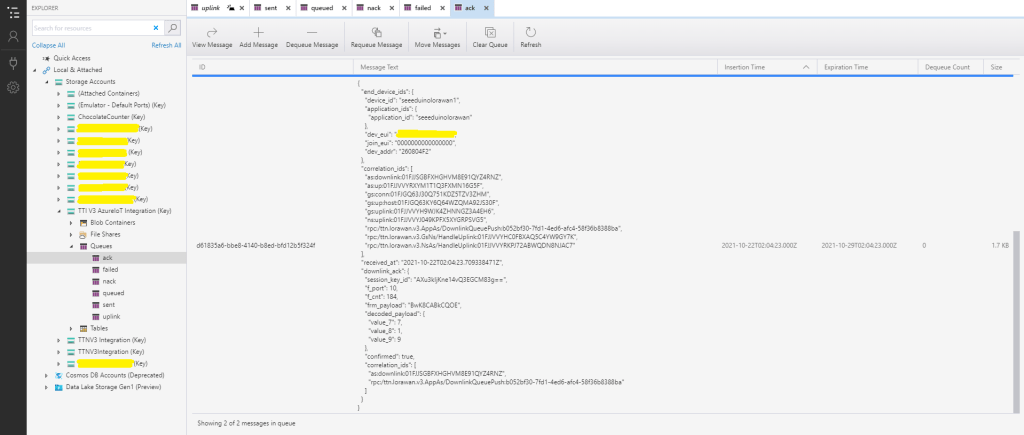

The uplink and downlink payload formatters are stored in Azure Blob Storage are compiled (CS-Script) as they are loaded then cached (Lazy Cache)

private async Task<IFormatterDownlink> DownlinkLoadAsync(int userApplicationId)

{

BlobClient blobClient = new BlobClient(_payloadFormatterConnectionString, _applicationSettings.PayloadFormattersDownlinkContainer, $"{userApplicationId}.cs");

if (!await blobClient.ExistsAsync())

{

_logger.LogInformation("PayloadFormatterDownlink- UserApplicationId:{0} Container:{1} not found using default:{2}", userApplicationId, _applicationSettings.PayloadFormattersUplinkContainer, _applicationSettings.PayloadFormatterUplinkBlobDefault);

blobClient = new BlobClient(_payloadFormatterConnectionString, _applicationSettings.PayloadFormatterDownlinkBlobDefault, _applicationSettings.PayloadFormatterDownlinkBlobDefault);

}

BlobDownloadResult downloadResult = await blobClient.DownloadContentAsync();

return CSScript.Evaluator.LoadCode<PayloadFormatter.IFormatterDownlink>(downloadResult.Content.ToString());

}

The uplink and downlink formatters can be edited in Visual Studio 2022 with syntax highlighting (currently they have to be manually uploaded).

The SwarmSpaceBumbleebeehive module no longer has public login or logout methods.

public interface ISwarmSpaceBumblebeeHive

{

public Task<ICollection<Device>> DeviceListAsync(CancellationToken cancellationToken);

public Task SendAsync(uint organisationId, uint deviceId, byte deviceType, ushort userApplicationId, byte[] payload);

}

The DeviceListAsync and SendAsync methods now call the BumblebeeHive login method after configurable period of inactivity.

public async Task<ICollection<Device>> DeviceListAsync(CancellationToken cancellationToken)

{

if ((_TokenActivityAtUtC + _bumblebeeHiveSettings.TokenValidFor) < DateTime.UtcNow)

{

await Login();

}

using (HttpClient httpClient = _httpClientFactory.CreateClient())

{

Client client = new Client(httpClient);

client.BaseUrl = _bumblebeeHiveSettings.BaseUrl;

httpClient.DefaultRequestHeaders.Add("Authorization", $"bearer {_token}");

return await client.GetDevicesAsync(null, null, null, null, null, null, null, null, null, cancellationToken);

}

}

I’m looking at building a webby user interface where users an interactivity list, create, edit, delete formatters with syntax highlighter support, and the executing the formatter with sample payloads.

This approach uses most of the existing building blocks, and that’s it no more changes.