The Seeedstudio reComputer J3011 has two processors an ARM64 CPU and an Nividia Jetson Orin 8G. To speed up inferencing on the Nividia Jetson Orin 8G with TensorRT I built an Open Neural Network Exchange(ONNX) TensorRT Execution Provider.

The Open Neural Network Exchange(ONNX) model used was trained on Roboflow Universe by Ugur ozdemir dataset which has 23696 images. The initial version of the TensorRT integration used the builder.UseTensorrt method of the IYoloV8Builder interface.

...

YoloV8Builder builder = new YoloV8Builder();

builder.UseOnnxModel(_applicationSettings.ModelPath);

if (_applicationSettings.UseTensorrt)

{

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss.fff} Using TensorRT");

builder.UseTensorrt(_applicationSettings.DeviceId);

}

...

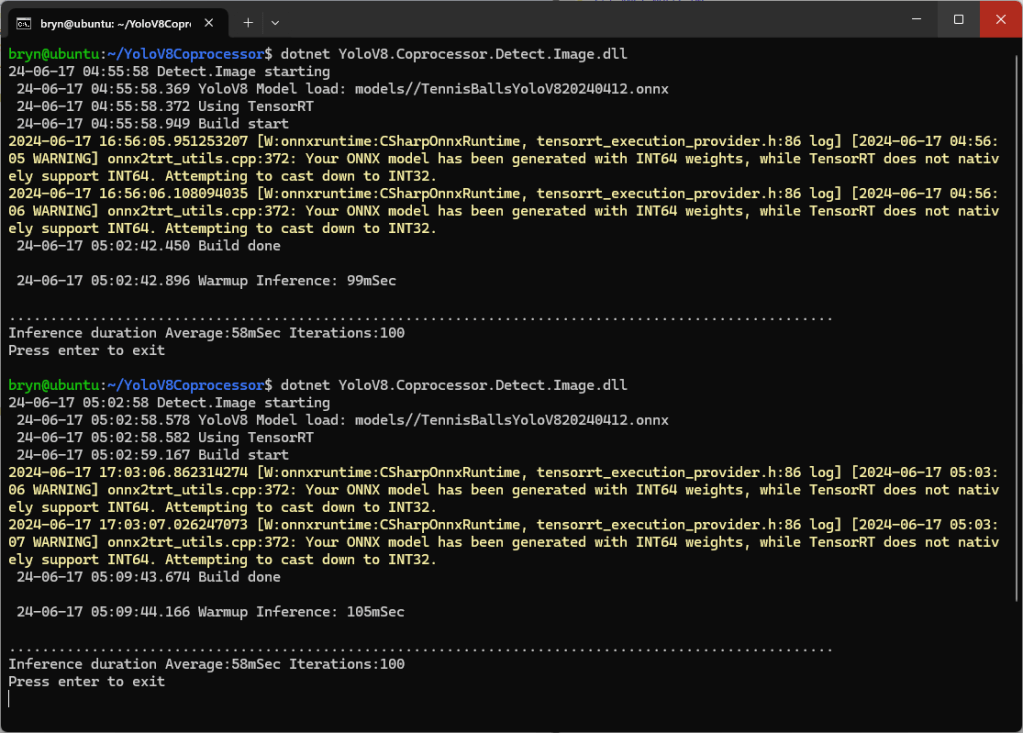

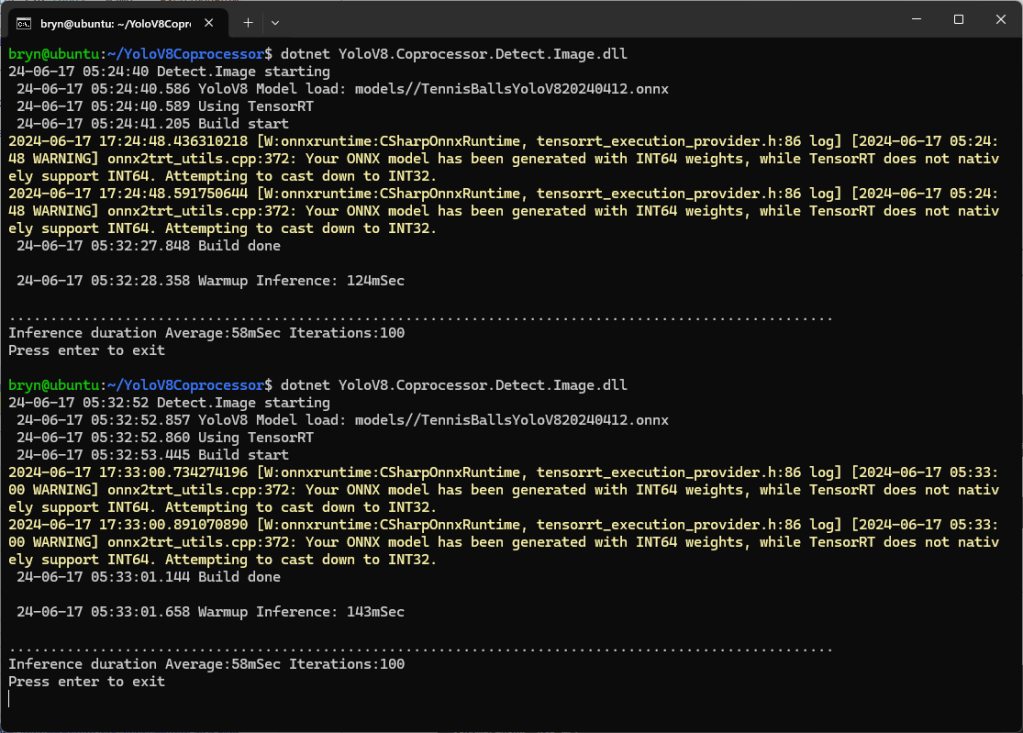

When the YoloV8.Coprocessor.Detect.Image application was configured to use the NVIDIA TensorRT Execution provider the average inference time was 58mSec but it took roughly 7 minutes to build and optimise the engine each time the application was run.

The TensorRT Execution provider has a number of configuration options but the IYoloV8Builder interface had to modified with UseCuda, UseRocm, UseTensorrt and UseTvm overloads implemented to allow additional configuration settings.

...

public class YoloV8Builder : IYoloV8Builder

{

...

public IYoloV8Builder UseOnnxModel(BinarySelector model)

{

_model = model;

return this;

}

#if GPURELEASE

public IYoloV8Builder UseCuda(int deviceId) => WithSessionOptions(SessionOptions.MakeSessionOptionWithCudaProvider(deviceId));

public IYoloV8Builder UseCuda(OrtCUDAProviderOptions options) => WithSessionOptions(SessionOptions.MakeSessionOptionWithCudaProvider(options));

public IYoloV8Builder UseRocm(int deviceId) => WithSessionOptions(SessionOptions.MakeSessionOptionWithRocmProvider(deviceId));

// Couldn't test this don't have suitable hardware

public IYoloV8Builder UseRocm(OrtROCMProviderOptions options) => WithSessionOptions(SessionOptions.MakeSessionOptionWithRocmProvider(options));

public IYoloV8Builder UseTensorrt(int deviceId) => WithSessionOptions(SessionOptions.MakeSessionOptionWithTensorrtProvider(deviceId));

public IYoloV8Builder UseTensorrt(OrtTensorRTProviderOptions options) => WithSessionOptions(SessionOptions.MakeSessionOptionWithTensorrtProvider(options));

// Couldn't test this don't have suitable hardware

public IYoloV8Builder UseTvm(string settings = "") => WithSessionOptions(SessionOptions.MakeSessionOptionWithTvmProvider(settings));

#endif

...

}

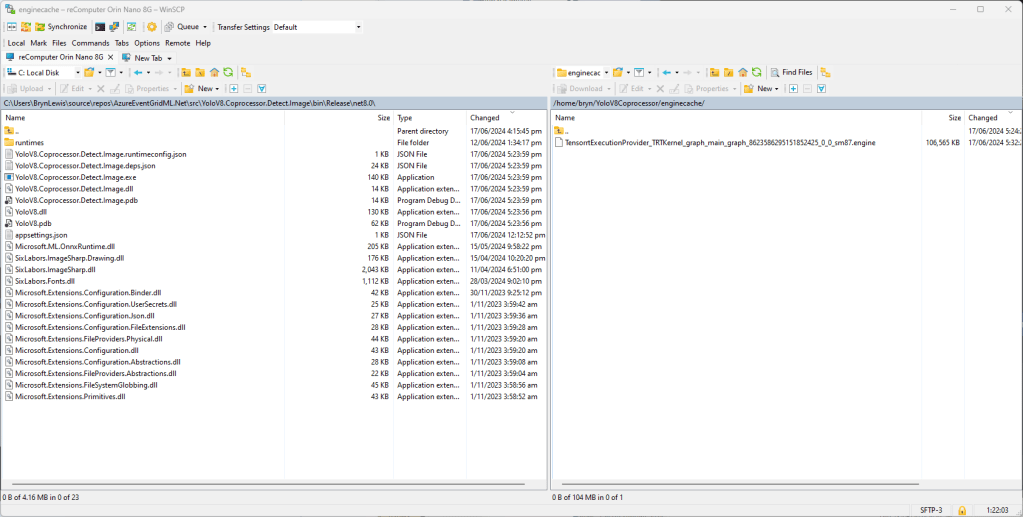

The trt_engine_cache_enable and trt_engine_cache_path TensorRT Execution provider session options configured the engine to be cached when it’s built for the first time so when a new inference session is created the engine can be loaded directly from disk.

...

YoloV8Builder builder = new YoloV8Builder();

builder.UseOnnxModel(_applicationSettings.ModelPath);

if (_applicationSettings.UseTensorrt)

{

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss.fff} Using TensorRT");

OrtTensorRTProviderOptions tensorRToptions = new OrtTensorRTProviderOptions();

Dictionary<string, string> optionKeyValuePairs = new Dictionary<string, string>();

optionKeyValuePairs.Add("trt_engine_cache_enable", "1");

optionKeyValuePairs.Add("trt_engine_cache_path", "enginecache/");

tensorRToptions.UpdateOptions(optionKeyValuePairs);

builder.UseTensorrt(tensorRToptions);

}

...

In order to validate that the loaded engine loaded from the trt_engine_cache_path is usable for the current inference, an engine profile is also cached and loaded along with engine

If current input shapes are in the range of the engine profile, the loaded engine can be safely used. If input shapes are out of range, the profile will be updated and the engine will be recreated based on the new profile.

When the YoloV8.Coprocessor.Detect.Image application was configured to use NVIDIA TensorRT and the engine was cached the average inference time was 58mSec and the Build method took roughly 10sec to execute after the application had been run once.

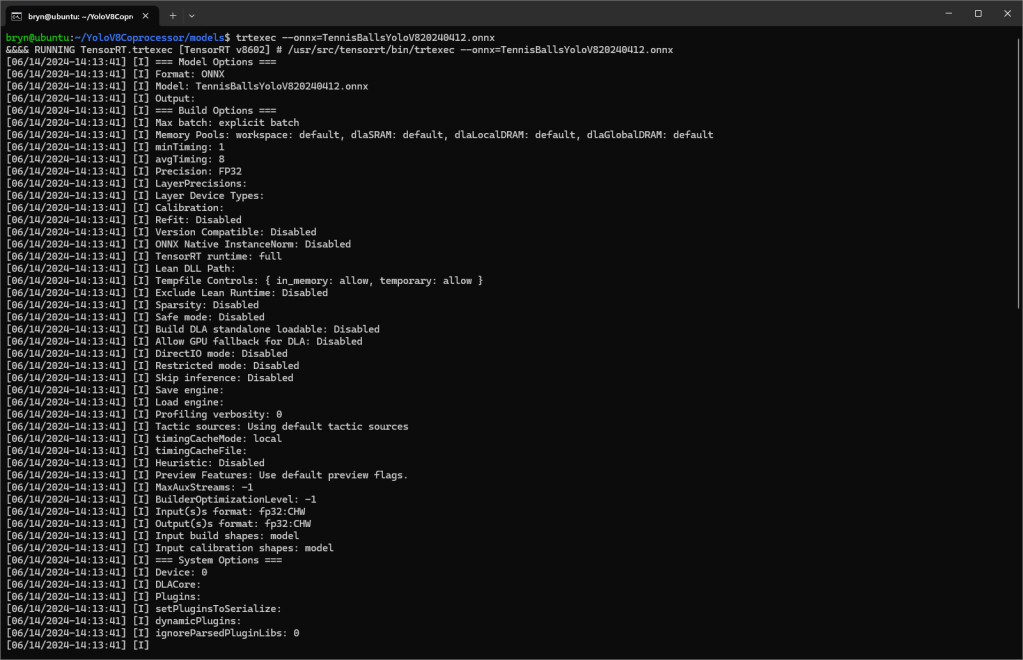

The trtexec utility can “pre-generate” engines but there doesn’t appear a way to use them with the TensorRT Execution provider.