The Seeedstudio reComputer J3011 has two processors an ARM64 CPU and an Nividia Jetson Orin 8G. To speed up inferencing with the Nividia Jetson Orin 8G with Compute Unified Device Architecture (CUDA) I built an Open Neural Network Exchange(ONNX) CUDA Execution Provider.

The Open Neural Network Exchange(ONNX) model used was trained on Roboflow Universe by Ugur ozdemir dataset which has 23696 images.

// load the app settings into configuration

var configuration = new ConfigurationBuilder()

.AddJsonFile("appsettings.json", false, true)

.Build();

_applicationSettings = configuration.GetSection("ApplicationSettings").Get<Model.ApplicationSettings>();

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss.fff} YoloV8 Model load: {_applicationSettings.ModelPath}");

YoloV8Builder builder = new YoloV8Builder();

builder.UseOnnxModel(_applicationSettings.ModelPath);

if (_applicationSettings.UseCuda)

{

builder.UseCuda(_applicationSettings.DeviceId) ;

}

if (_applicationSettings.UseTensorrt)

{

builder.UseTensorrt(_applicationSettings.DeviceId);

}

/*

builder.WithConfiguration(c =>

{

});

*/

/*

builder.WithSessionOptions(new Microsoft.ML.OnnxRuntime.SessionOptions()

{

});

*/

using (var image = await SixLabors.ImageSharp.Image.LoadAsync<Rgba32>(_applicationSettings.ImageInputPath))

using (var predictor = builder.Build())

{

var result = await predictor.DetectAsync(image);

Console.WriteLine();

Console.WriteLine($"Speed: {result.Speed}");

Console.WriteLine();

foreach (var prediction in result.Boxes)

{

Console.WriteLine($" Class {prediction.Class} {(prediction.Confidence * 100.0):f1}% X:{prediction.Bounds.X} Y:{prediction.Bounds.Y} Width:{prediction.Bounds.Width} Height:{prediction.Bounds.Height}");

}

Console.WriteLine();

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss.fff} Plot and save : {_applicationSettings.ImageOutputPath}");

using (var imageOutput = await result.PlotImageAsync(image))

{

await imageOutput.SaveAsJpegAsync(_applicationSettings.ImageOutputPath);

}

}

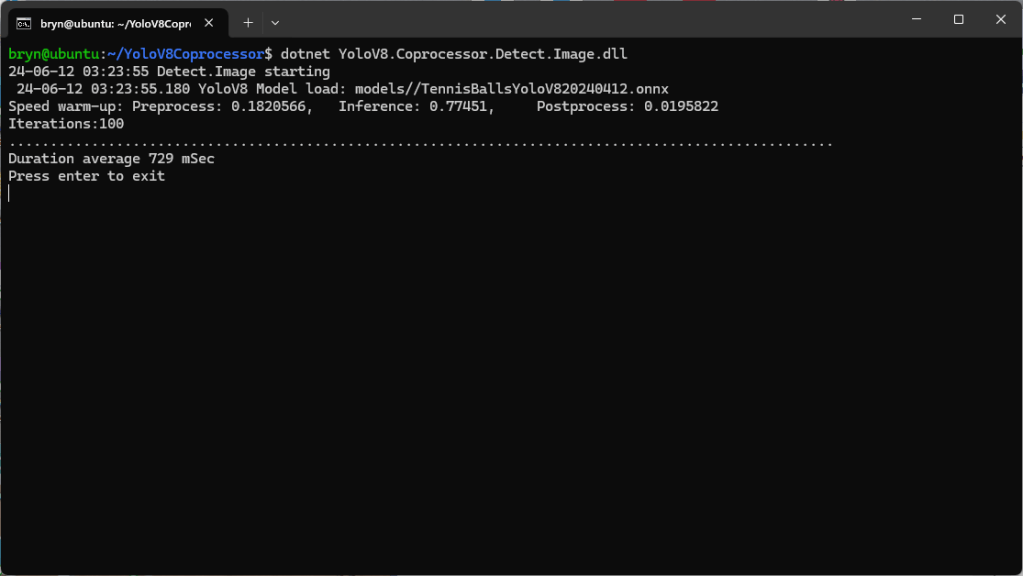

When configured to run the YoloV8.Coprocessor.Detect.Image on the ARM64 CPU the average inference time was 729 mSec.

The first time ran the YoloV8.Coprocessor.Detect.Image application configured to use CUDA for inferencing it failed badly.

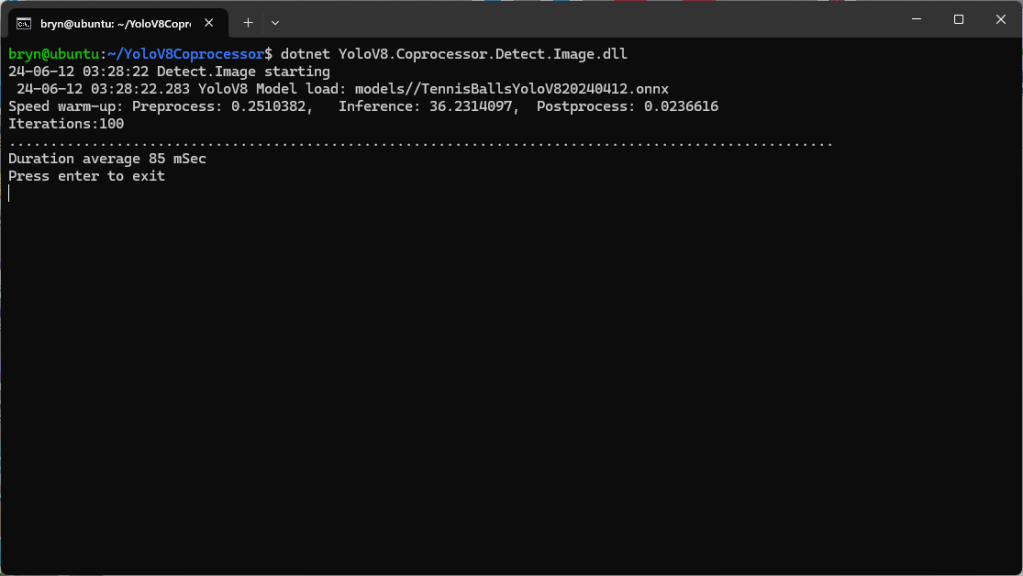

The YoloV8.Coprocessor.Detect.Image application was then configured to use CUDA and the average inferencing time was 85mSec.

It took a couple of weeks to get the YoloV8.Coprocessor.Detect.Image application inferencing on the Nividia Jetson Orin 8G coprocessor and this will be covered in detail in another posts.