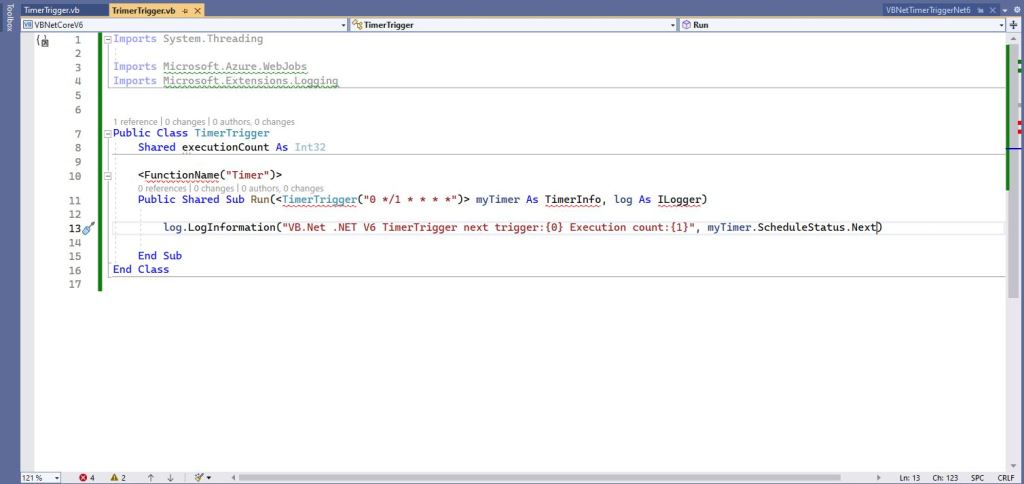

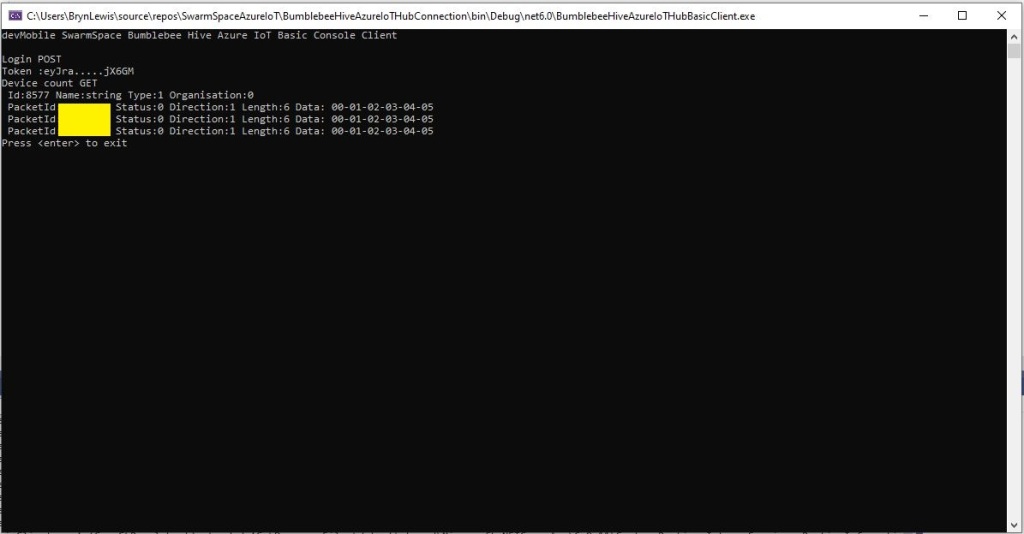

To figure out how to poll the Swarm Hive API I have built yet another “nasty” Proof of Concept (PoC) which gets ToDevice and FromDevice messages. Initially I have focused on polling as the volume of messages from my single device is pretty low (WebHooks will be covered in a future post).

Like my Azure IoT The Things Industry connector I use Alastair Crabtrees’s LazyCache to store Azured IoT Hub DeviceClient instances.

NOTE: Swarm Space technical support clarified the parameter values required to get FromDevice and ToDevice messages using the Bumbleebee Hive API.

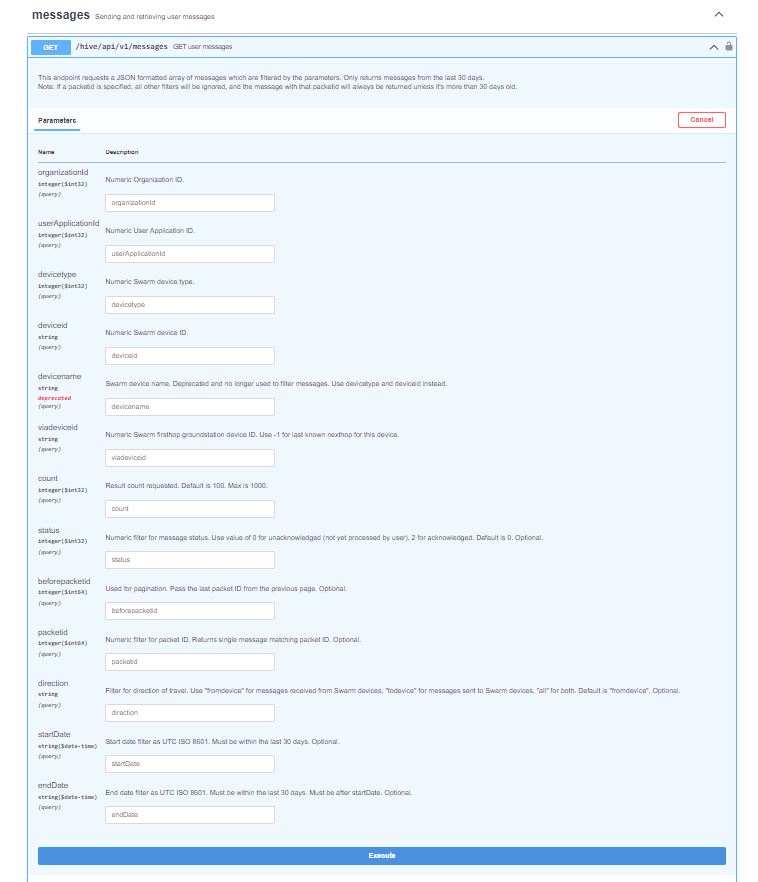

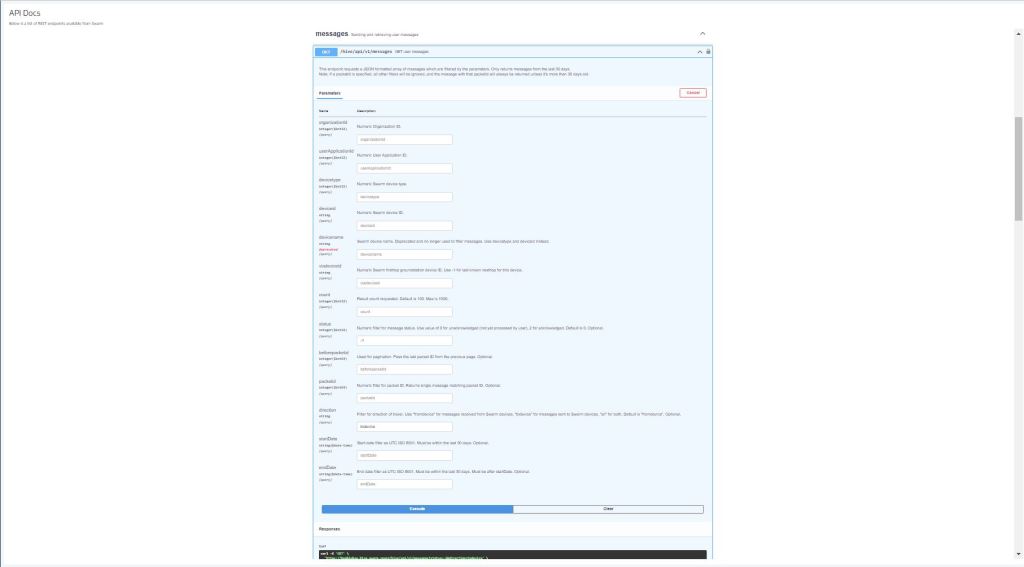

The Messages Get method has a lot of parameters for filtering and paging the response message lists. Many of the parameters have default values so can be null or left blank.

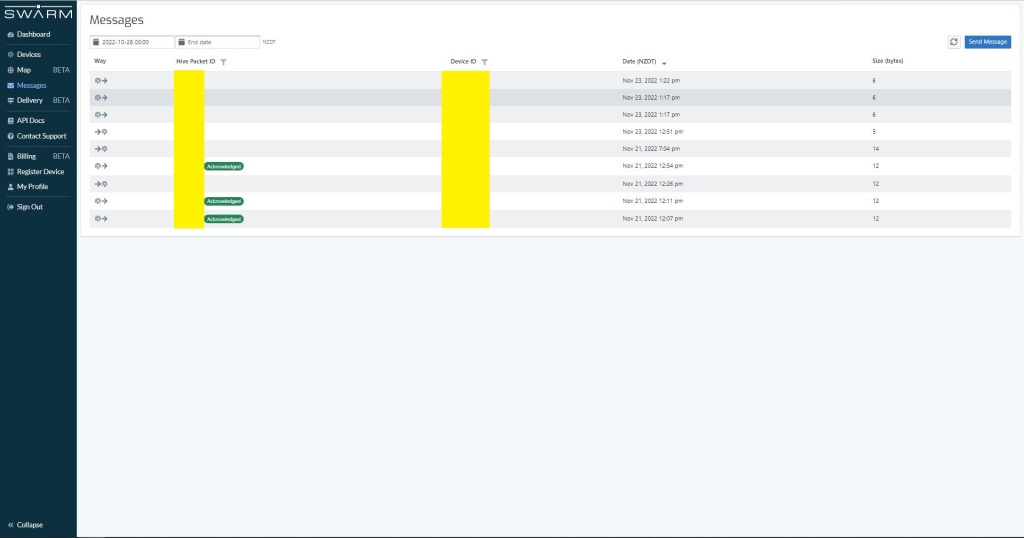

I started off by seeing if I could duplicate the functionality of the user interface and get a list of all ToDevice and FromDevice messages.

I first called the Messages Get method with the direction set to “fromdevice” (Odd this is a string rather than an enumeration) and the messages I had sent from my Sparkfun Satellite Transceiver Breakout – Swarm M138 were displayed.

I then called the Messages Get method with the direction set to “all” and only the FromDevice messages were displayed which I wasn’t expecting.

I then called the Messages Get method with the direction set to “FromDevice and no messages were displayed which I wasn’t expecting

I then called the Message Get method with the messageId of a ToDevice message and the detailed message information was displayed.

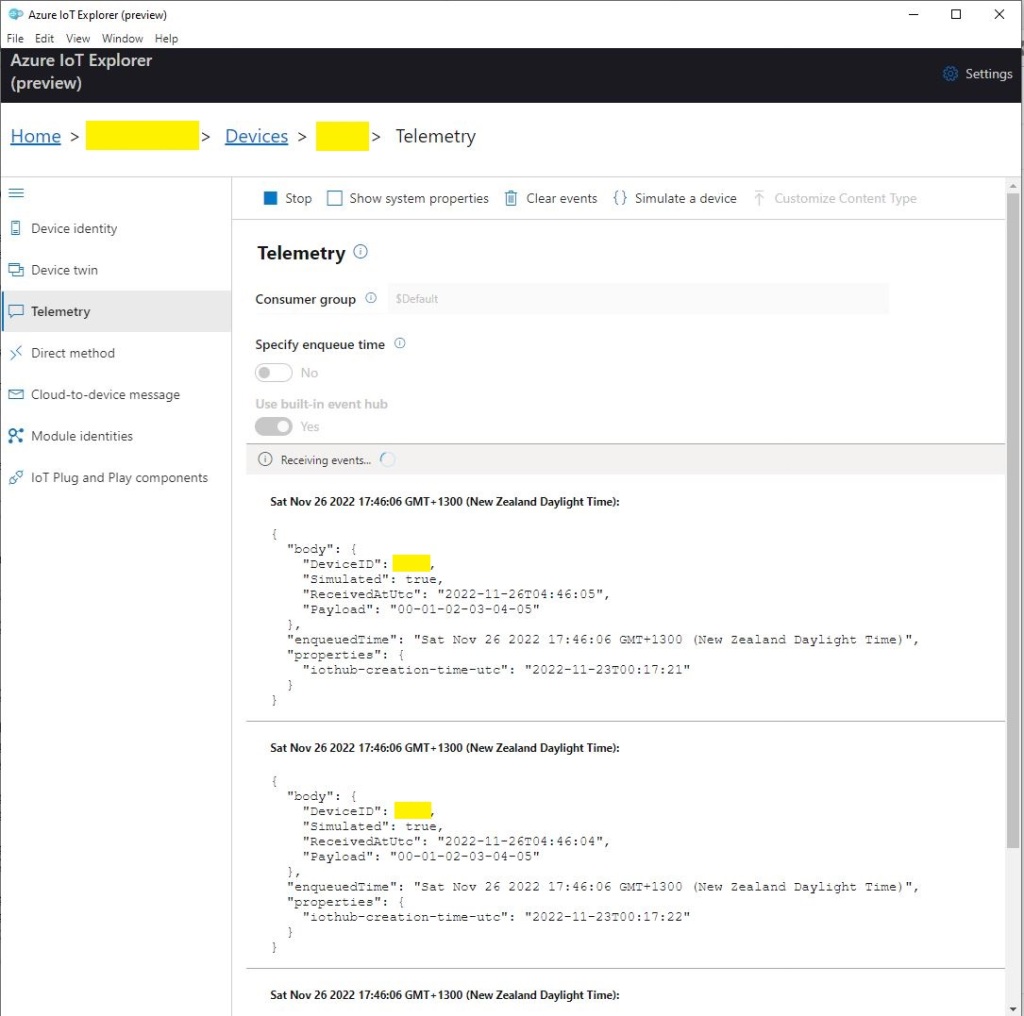

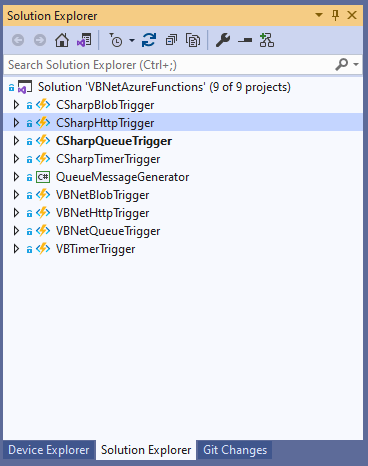

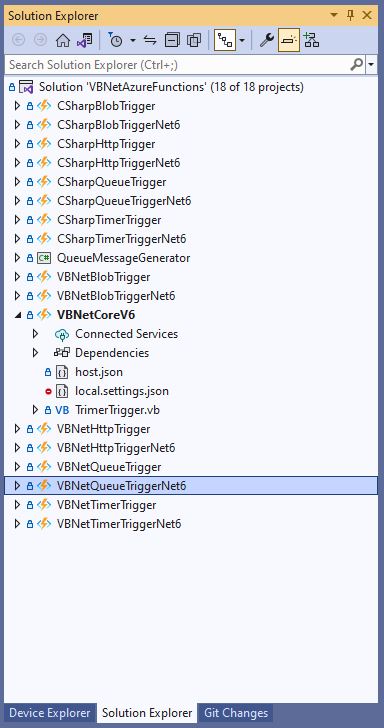

For testing I configured 5 devices (a real device and the others simulated) in my Azure IoT Hub with the Swarm Device ID ued as the Azure IoT Hub device ID.

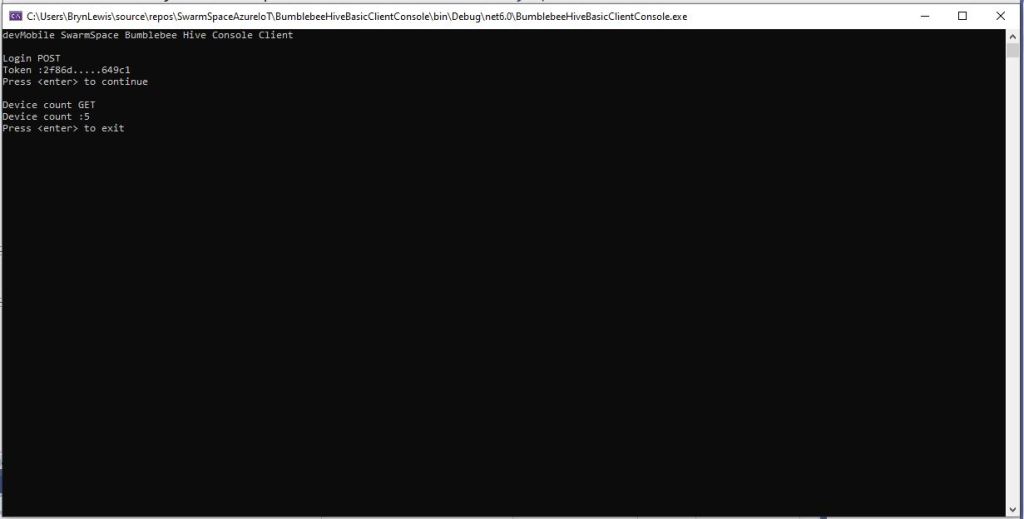

My console application calls the Swarm Bumblebee Hive API Login method, then uses Azure IoT Hub DeviceClient SendEventAsync upload device telemetry.

The console application stores the Swarm Hive API username, password and the Azure IoT Hub Device Connection string locally using the UserSecretsConfigurationExtension.

internal class Program

{

private static string AzureIoTHubConnectionString = "";

private readonly static IAppCache _DeviceClients = new CachingService();

static async Task Main(string[] args)

{

Debug.WriteLine("devMobile.SwarmSpace.Hive.AzureIoTHubBasicClient starting");

IConfiguration configuration = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json")

.AddUserSecrets("b4073481-67e9-41bd-bf98-7d2029a0b391").Build();

AzureIoTHubConnectionString = configuration.GetConnectionString("AzureIoTHub");

using (HttpClient httpClient = new HttpClient())

{

BumblebeeHiveClient.Client client = new BumblebeeHiveClient.Client(httpClient);

client.BaseUrl = configuration.GetRequiredSection("SwarmConnection").GetRequiredSection("BaseURL").Value;

BumblebeeHiveClient.LoginForm loginForm = new BumblebeeHiveClient.LoginForm();

loginForm.Username = configuration.GetRequiredSection("SwarmConnection").GetRequiredSection("UserName").Value;

loginForm.Password = configuration.GetRequiredSection("SwarmConnection").GetRequiredSection("Password").Value;

BumblebeeHiveClient.Response response = await client.PostLoginAsync(loginForm);

Debug.WriteLine($"Token :{response.Token[..5]}.....{response.Token[^5..]}");

string apiKey = "bearer " + response.Token;

httpClient.DefaultRequestHeaders.Add("Authorization", apiKey);

var devices = await client.GetDevicesAsync(null, null, null, null, null, null, null, null, null);

foreach (BumblebeeHiveClient.Device device in devices)

{

Debug.WriteLine($" Id:{device.DeviceId} Name:{device.DeviceName} Type:{device.DeviceType} Organisation:{device.OrganizationId}");

DeviceClient deviceClient = await _DeviceClients.GetOrAddAsync<DeviceClient>(device.DeviceId.ToString(), (ICacheEntry x) => IoTHubConnectAsync(device.DeviceId.ToString()), memoryCacheEntryOptions);

}

foreach (BumblebeeHiveClient.Device device in devices)

{

DeviceClient deviceClient = await _DeviceClients.GetAsync<DeviceClient>(device.DeviceId.ToString());

var messages = await client.GetMessagesAsync(null, null, null, device.DeviceId.ToString(), null, null, null, null, null, null, "all", null, null);

foreach (var message in messages)

{

Debug.WriteLine($" PacketId:{message.PacketId} Status:{message.Status} Direction:{message.Direction} Length:{message.Len} Data: {BitConverter.ToString(message.Data)}");

JObject telemetryEvent = new JObject

{

{ "DeviceID", device.DeviceId },

{ "ReceivedAtUtc", DateTime.UtcNow.ToString("s", CultureInfo.InvariantCulture) },

};

telemetryEvent.Add("Payload",BitConverter.ToString(message.Data));

using (Message telemetryMessage = new Message(Encoding.ASCII.GetBytes(JsonConvert.SerializeObject(telemetryEvent))))

{

telemetryMessage.Properties.Add("iothub-creation-time-utc", message.HiveRxTime.ToString("s", CultureInfo.InvariantCulture));

await deviceClient.SendEventAsync(telemetryMessage);

};

//BumblebeeHiveClient.PacketPostReturn packetPostReturn = await client.AckRxMessageAsync(message.PacketId, null);

}

}

foreach (BumblebeeHiveClient.Device device in devices)

{

DeviceClient deviceClient = await _DeviceClients.GetAsync<DeviceClient>(device.DeviceId.ToString());

await deviceClient.CloseAsync();

}

}

}

private static async Task<DeviceClient> IoTHubConnectAsync(string deviceId)

{

DeviceClient deviceClient;

deviceClient = DeviceClient.CreateFromConnectionString(AzureIoTHubConnectionString, deviceId, TransportSettings);

await deviceClient.OpenAsync();

return deviceClient;

}

private static readonly MemoryCacheEntryOptions memoryCacheEntryOptions = new MemoryCacheEntryOptions()

{

Priority = CacheItemPriority.NeverRemove

};

private static readonly ITransportSettings[] TransportSettings = new ITransportSettings[]

{

new AmqpTransportSettings(TransportType.Amqp_Tcp_Only)

{

AmqpConnectionPoolSettings = new AmqpConnectionPoolSettings()

{

Pooling = true,

}

}

};

}

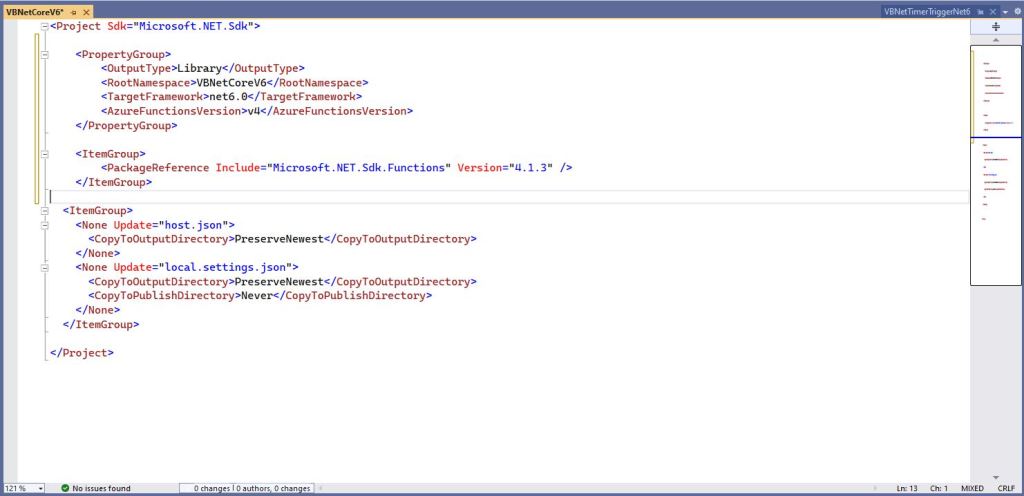

While testing I disabled the message RxAck functionality so I could repeatedly call the MessagesGet method so I didn’t have to send new messages and burn through my 50 free messages.

.

Updated parameters based on feedback from Swarm technical support

Need to have status set to -1