For the last month I have been working with preview releases of ML.Net with a focus on the Open Neural Network Exchange(ONNX) support. As part of my “day job” we have been running Object Detection models on X64 based industrial computers, but we are looking at moving to ARM64 as devices which support -20° to +60° operation appear to be easier to source.

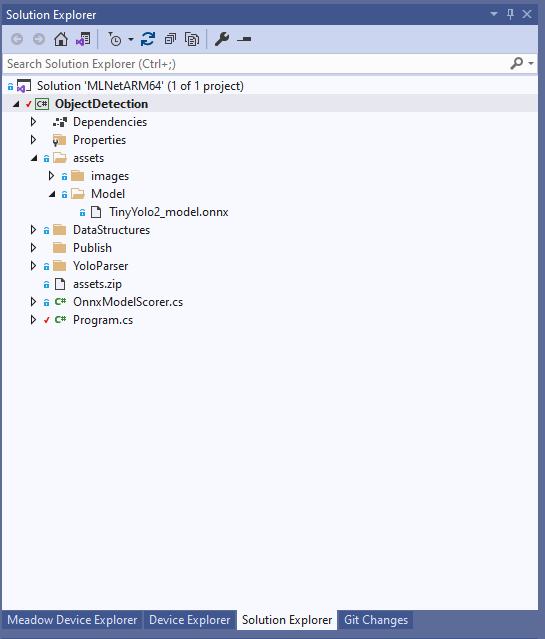

The first step of my Proof of Concept(PoC) was to get the ONNX Object Detection sample working on a Raspberry Pi 4 running the 64bit version of Bullseye. I created a new solution which contained only the ONNX Object detection console application which would run on my desktop.

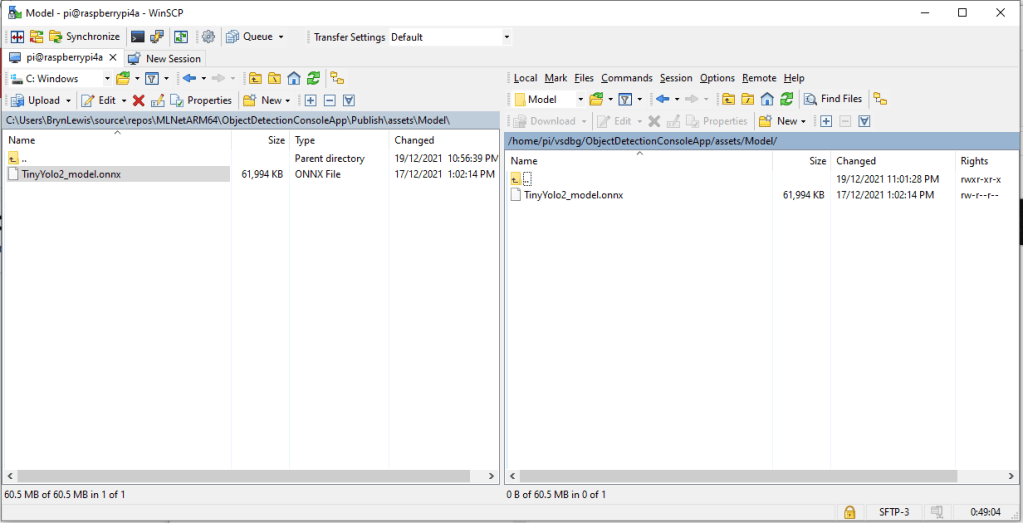

To deploy applications I sometimes copy the contents of the “publish” directory to the device with WinSCP.

I also use Visual Studio Code with some scripts, or a modified version of Raspberry Debugger which supports deployment and debugging of applications to device running a 64 bit OS.

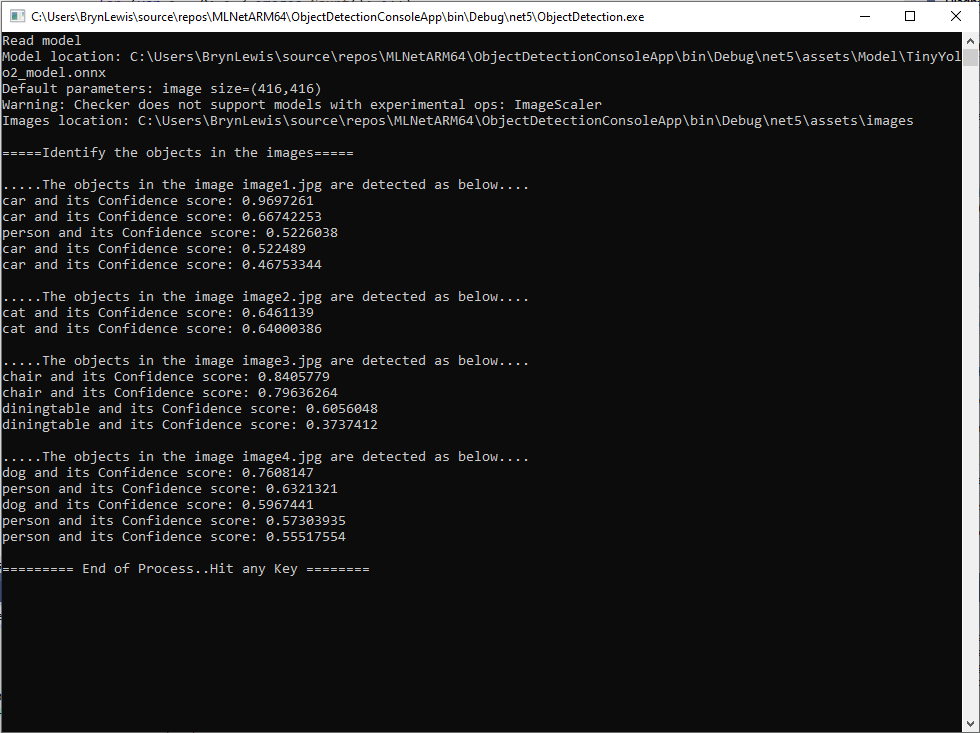

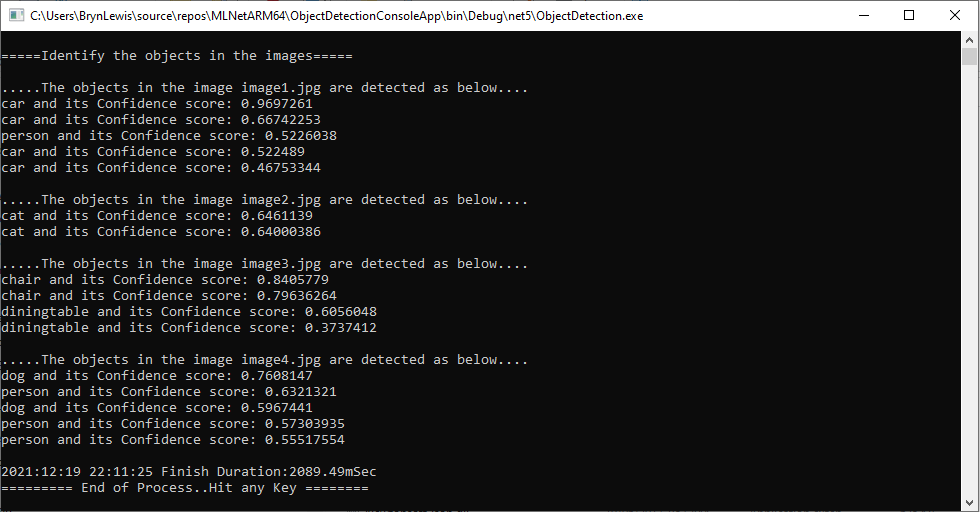

After updating my NuGet packages to the “release” versions the Object Detection console application would run on my desktop and process the sample images.

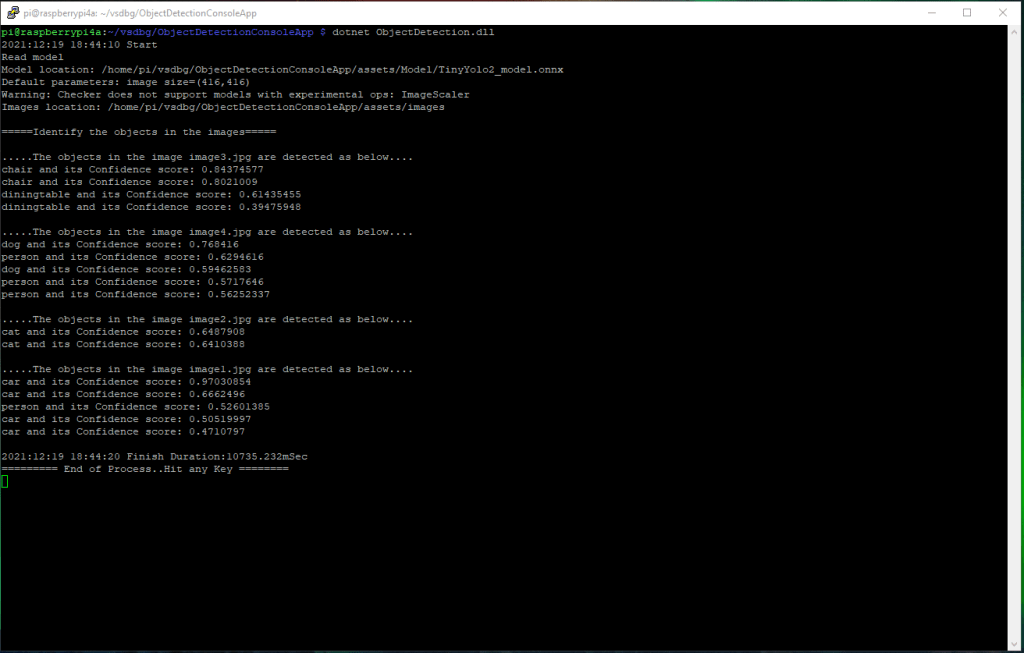

Getting the Object Detection console application running on my Raspberry Pi4 took a couple of attempts…

The first issue was the location of the sample images (changed assetsRelativePath)

using System;

using System.IO;

using System.Collections.Generic;

using System.Drawing;

using System.Drawing.Drawing2D;

using System.Linq;

using Microsoft.ML;

using ObjectDetection.YoloParser;

using ObjectDetection.DataStructures;

namespace ObjectDetection

{

class Program

{

public static void Main()

{

var assetsRelativePath = @"assets";

DateTime startedAtUtc = DateTime.UtcNow;

Console.WriteLine($"{startedAtUtc:yyyy:MM:dd HH:mm:ss} Start");

string assetsPath = GetAbsolutePath(assetsRelativePath);

var modelFilePath = Path.Combine(assetsPath, "Model", "TinyYolo2_model.onnx");

var imagesFolder = Path.Combine(assetsPath, "images");

var outputFolder = Path.Combine(assetsPath, "images", "output");

// Initialize MLContext

MLContext mlContext = new MLContext();

try

{

// Load Data

IEnumerable<ImageNetData> images = ImageNetData.ReadFromFile(imagesFolder);

IDataView imageDataView = mlContext.Data.LoadFromEnumerable(images);

// Create instance of model scorer

var modelScorer = new OnnxModelScorer(imagesFolder, modelFilePath, mlContext);

// Use model to score data

IEnumerable<float[]> probabilities = modelScorer.Score(imageDataView);

// Post-process model output

YoloOutputParser parser = new YoloOutputParser();

var boundingBoxes =

probabilities

.Select(probability => parser.ParseOutputs(probability))

.Select(boxes => parser.FilterBoundingBoxes(boxes, 5, .5F));

// Draw bounding boxes for detected objects in each of the images

for (var i = 0; i < images.Count(); i++)

{

string imageFileName = images.ElementAt(i).Label;

IList<YoloBoundingBox> detectedObjects = boundingBoxes.ElementAt(i);

DrawBoundingBox(imagesFolder, outputFolder, imageFileName, detectedObjects);

LogDetectedObjects(imageFileName, detectedObjects);

}

DateTime finishedAtUtc = DateTime.UtcNow;

TimeSpan duration = finishedAtUtc - startedAtUtc;

Console.WriteLine($"{finishedAtUtc:yyyy:MM:dd HH:mm:ss} Finish Duration:{duration.TotalMilliseconds}mSec");

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString());

}

Console.WriteLine("========= End of Process..Hit any Key ========");

Console.ReadLine();

}

public static string GetAbsolutePath(string relativePath)

{

FileInfo _dataRoot = new FileInfo(typeof(Program).Assembly.Location);

string assemblyFolderPath = _dataRoot.Directory.FullName;

string fullPath = Path.Combine(assemblyFolderPath, relativePath);

return fullPath;

}

private static void DrawBoundingBox(string inputImageLocation, string outputImageLocation, string imageName, IList<YoloBoundingBox> filteredBoundingBoxes)

{

Image image = Image.FromFile(Path.Combine(inputImageLocation, imageName));

var originalImageHeight = image.Height;

var originalImageWidth = image.Width;

foreach (var box in filteredBoundingBoxes)

{

// Get Bounding Box Dimensions

var x = (uint)Math.Max(box.Dimensions.X, 0);

var y = (uint)Math.Max(box.Dimensions.Y, 0);

var width = (uint)Math.Min(originalImageWidth - x, box.Dimensions.Width);

var height = (uint)Math.Min(originalImageHeight - y, box.Dimensions.Height);

// Resize To Image

x = (uint)originalImageWidth * x / OnnxModelScorer.ImageNetSettings.imageWidth;

y = (uint)originalImageHeight * y / OnnxModelScorer.ImageNetSettings.imageHeight;

width = (uint)originalImageWidth * width / OnnxModelScorer.ImageNetSettings.imageWidth;

height = (uint)originalImageHeight * height / OnnxModelScorer.ImageNetSettings.imageHeight;

// Bounding Box Text

string text = $"{box.Label} ({(box.Confidence * 100).ToString("0")}%)";

using (Graphics thumbnailGraphic = Graphics.FromImage(image))

{

thumbnailGraphic.CompositingQuality = CompositingQuality.HighQuality;

thumbnailGraphic.SmoothingMode = SmoothingMode.HighQuality;

thumbnailGraphic.InterpolationMode = InterpolationMode.HighQualityBicubic;

// Define Text Options

Font drawFont = new Font("Arial", 12, FontStyle.Bold);

SizeF size = thumbnailGraphic.MeasureString(text, drawFont);

SolidBrush fontBrush = new SolidBrush(Color.Black);

Point atPoint = new Point((int)x, (int)y - (int)size.Height - 1);

// Define BoundingBox options

Pen pen = new Pen(box.BoxColor, 3.2f);

SolidBrush colorBrush = new SolidBrush(box.BoxColor);

// Draw text on image

thumbnailGraphic.FillRectangle(colorBrush, (int)x, (int)(y - size.Height - 1), (int)size.Width, (int)size.Height);

thumbnailGraphic.DrawString(text, drawFont, fontBrush, atPoint);

// Draw bounding box on image

thumbnailGraphic.DrawRectangle(pen, x, y, width, height);

}

}

if (!Directory.Exists(outputImageLocation))

{

Directory.CreateDirectory(outputImageLocation);

}

image.Save(Path.Combine(outputImageLocation, imageName));

}

private static void LogDetectedObjects(string imageName, IList<YoloBoundingBox> boundingBoxes)

{

Console.WriteLine($".....The objects in the image {imageName} are detected as below....");

foreach (var box in boundingBoxes)

{

Console.WriteLine($"{box.Label} and its Confidence score: {box.Confidence}");

}

Console.WriteLine("");

}

}

}

The next issue was the location of the ONNX model on the device. I modified the properties of the TinyYolo2_model.onnx file so it was copied to the publish folder if it had been modified.

I then checked this was working as expected with WinSCP.

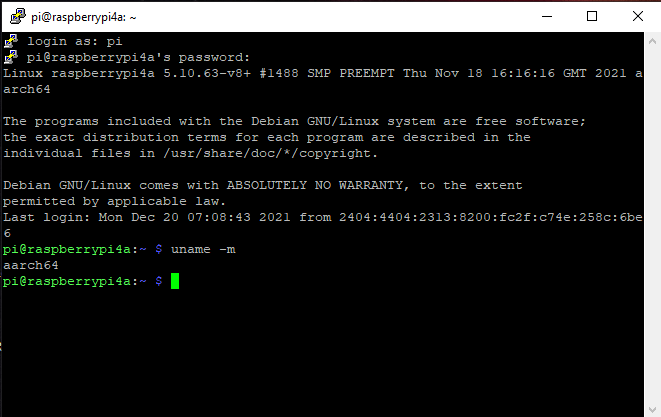

The platform specific runtime was missing so I confirmed the processor architecture with uname.

I noticed that the Object Detection console application took significantly longer to run on the Raspberry PI4 so I added some code to display the duration.

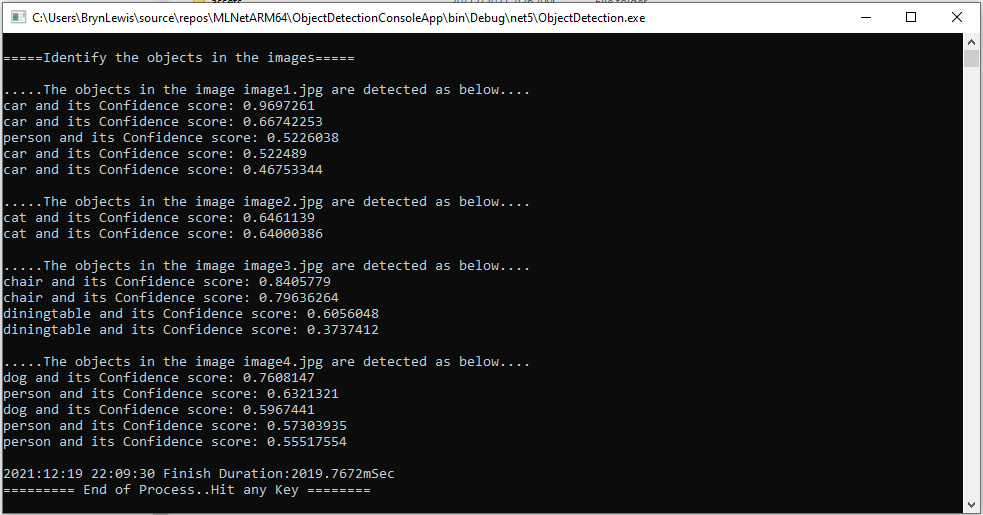

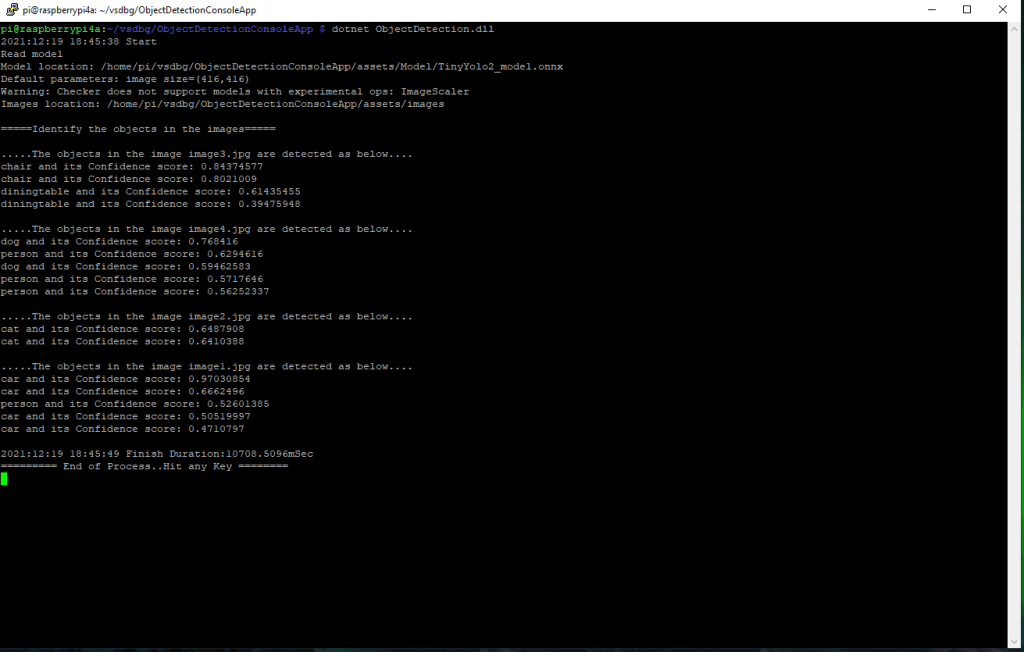

For my application I’m only interested in the Minimum Bounding Boxes(MBRs) so I disabled the code for drawing MBRs on the images.

Removing the code for drawing the MBRs on the images improved performance less than I was expecting.