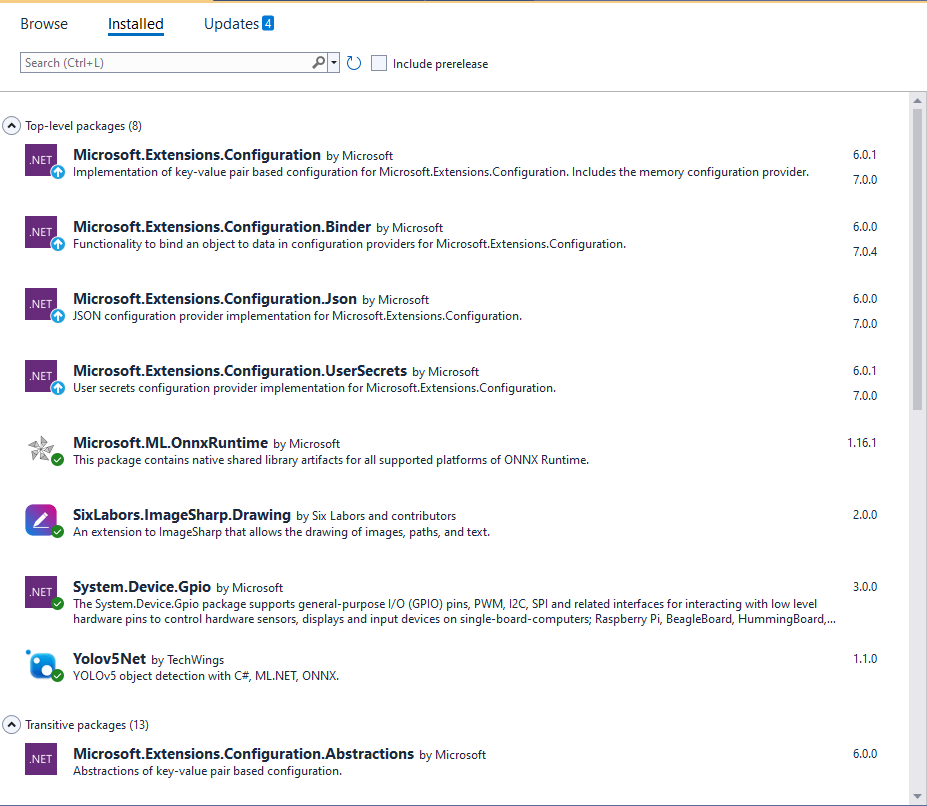

This post is about “revisiting” my ML.Net YoloV5 + Camera on ARM64 Raspberry PI application, updating it to .NET 6, the latest version of the TechWings yolov5-net (library formerly from mentalstack) and the latest version of the ML.Net Open Neural Network Exchange(ONNX) libraries.

The updated TechWings yolov5-net library now uses Six Labors ImageSharp for markup rather than System.Drawing.Common. (I found System.Drawing.Common a massive Pain in the Arse (PiTA))

private static async void ImageUpdateTimerCallback(object state)

{

DateTime requestAtUtc = DateTime.UtcNow;

// Just incase - stop code being called while photo already in progress

if (_cameraBusy)

{

return;

}

_cameraBusy = true;

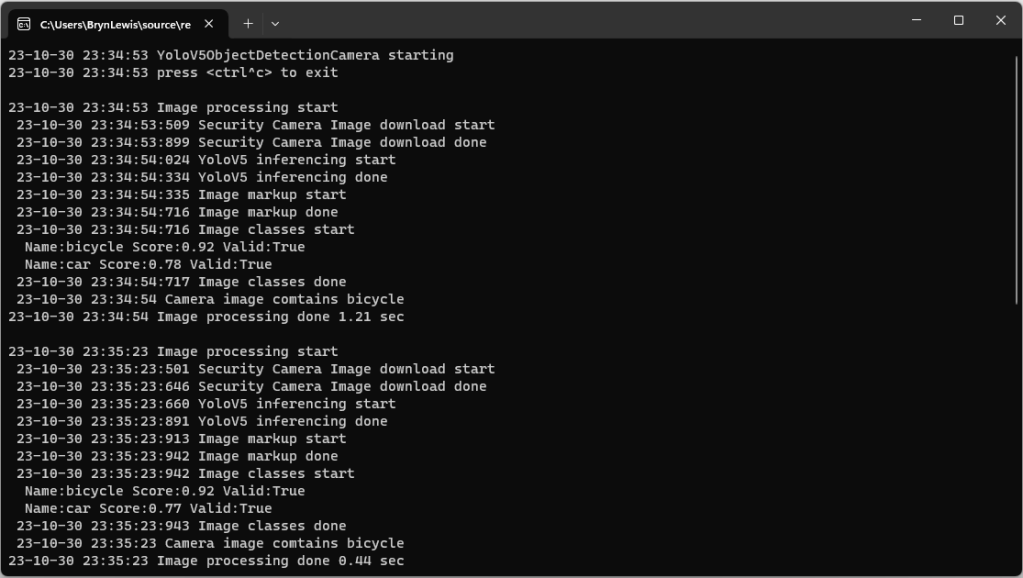

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss} Image processing start");

try

{

#if SECURITY_CAMERA

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} Security Camera Image download start");

using (Stream cameraStream = await _httpClient.GetStreamAsync(_applicationSettings.CameraUrl))

using (Stream fileStream = File.Create(_applicationSettings.ImageInputFilenameLocal))

{

await cameraStream.CopyToAsync(fileStream);

}

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} Security Camera Image download done");

#endif

List<YoloPrediction> predictions;

// Process the image on local file system

using (Image<Rgba32> image = await Image.LoadAsync<Rgba32>(_applicationSettings.ImageInputFilenameLocal))

{

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV5 inferencing start");

predictions = _scorer.Predict(image);

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} YoloV5 inferencing done");

#if OUTPUT_IMAGE_MARKUP

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} Image markup start");

var font = new Font(new FontCollection().Add(_applicationSettings.ImageOutputMarkupFontPath), _applicationSettings.ImageOutputMarkupFontSize);

foreach (var prediction in predictions) // iterate predictions to draw results

{

double score = Math.Round(prediction.Score, 2);

var (x, y) = (prediction.Rectangle.Left - 3, prediction.Rectangle.Top - 23);

image.Mutate(a => a.DrawPolygon(Pens.Solid(prediction.Label.Color, 1),

new PointF(prediction.Rectangle.Left, prediction.Rectangle.Top),

new PointF(prediction.Rectangle.Right, prediction.Rectangle.Top),

new PointF(prediction.Rectangle.Right, prediction.Rectangle.Bottom),

new PointF(prediction.Rectangle.Left, prediction.Rectangle.Bottom)

));

image.Mutate(a => a.DrawText($"{prediction.Label.Name} ({score})",

font, prediction.Label.Color, new PointF(x, y)));

}

await image.SaveAsJpegAsync(_applicationSettings.ImageOutputFilenameLocal);

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} Image markup done");

#endif

}

#if PREDICTION_CLASSES

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} Image classes start");

foreach (var prediction in predictions)

{

Console.WriteLine($" Name:{prediction.Label.Name} Score:{prediction.Score:f2} Valid:{prediction.Score > _applicationSettings.PredictionScoreThreshold}");

}

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss:fff} Image classes done");

#endif

#if PREDICTION_CLASSES_OF_INTEREST

IEnumerable<string> predictionsOfInterest = predictions.Where(p => p.Score > _applicationSettings.PredictionScoreThreshold).Select(c => c.Label.Name).Intersect(_applicationSettings.PredictionLabelsOfInterest, StringComparer.OrdinalIgnoreCase);

if (predictionsOfInterest.Any())

{

Console.WriteLine($" {DateTime.UtcNow:yy-MM-dd HH:mm:ss} Camera image comtains {String.Join(",", predictionsOfInterest)}");

}

#endif

}

catch (Exception ex)

{

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss} Camera image download, upload or post procesing failed {ex.Message}");

}

finally

{

_cameraBusy = false;

}

TimeSpan duration = DateTime.UtcNow - requestAtUtc;

Console.WriteLine($"{DateTime.UtcNow:yy-MM-dd HH:mm:ss} Image processing done {duration.TotalSeconds:f2} sec");

Console.WriteLine();

}

The names of the input image, output image and yoloV5 model file are configured in the appsettings.json (on device) or secrets.json (Visual Studio 2022 desktop) file. The location (ImageOutputMarkupFontPath) and size (ImageOutputMarkupFontSize) of the font used are configurable to make it easier run the application on different devices and operating systems.

{

"ApplicationSettings": {

"ImageTimerDue": "0.00:00:15",

"ImageTimerPeriod": "0.00:00:30",

"CameraUrl": "HTTP://10.0.0.56:85/images/snapshot.jpg",

"CameraUserName": "",

"CameraUserPassword": "",

"ImageInputFilenameLocal": "InputLatest.jpg",

"ImageOutputFilenameLocal": "OutputLatest.jpg",

"ImageOutputMarkupFontPath": "C:/Windows/Fonts/consola.ttf",

"ImageOutputMarkupFontSize": 16,

"YoloV5ModelPath": "YoloV5/yolov5s.onnx",

"PredictionScoreThreshold": 0.5,

"PredictionLabelsOfInterest": [

"bicycle",

"person",

"bench"

]

}

}

The test-rig consisted of a Unv ADZK-10 Security Camera, Power over Ethernet(PoE) module and my development desktop PC.

Once the YoloV5s model was loaded, inferencing was taking roughly 0.47 seconds.

Summary

Again, I was “standing on the shoulders of giants” the TechWings code just worked. With a pretrained yoloV5 model, the ML.Net Open Neural Network Exchange(ONNX) plumbing it took a couple of hours to update the application. Most of this time was learning about the Six Labors ImageSharp library to mark up the images.